Organizations are increasingly turning to cloud computing for IT agility, resilience and scalability. Amazon Web Services (AWS) stands at the forefront of this digital transformation, offering a robust, flexible and cost-effective platform that helps businesses drive growth and innovation.

However, as organizations migrate to the cloud, they face a complex and growing threat landscape of sophisticated and cloud-conscious threat actors. Organizations with ambitious digital transformation strategies must be prepared to address these security challenges from Day One. The potential threat of compromise underscores the critical need to understand and implement security best practices tailored to the unique challenges of cloud environments.

Central to understanding and navigating these challenges is the AWS shared responsibility model. AWS is responsible for delivering security of the cloud, including the security of underlying infrastructure and services. Customers are responsible for protecting their data, applications and resources running in the cloud. This model highlights the importance of proactive security measures at every phase of cloud migration and operation and helps ensure businesses maintain a strong security posture.

In this blog, we cover five best practices for securing AWS resources to help you gain a better understanding of how to protect your cloud environments as you build in the cloud.

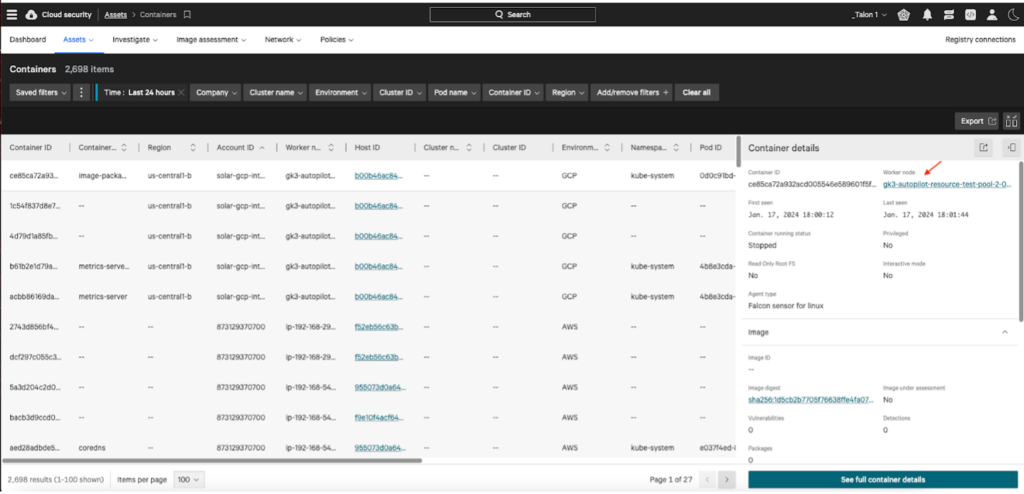

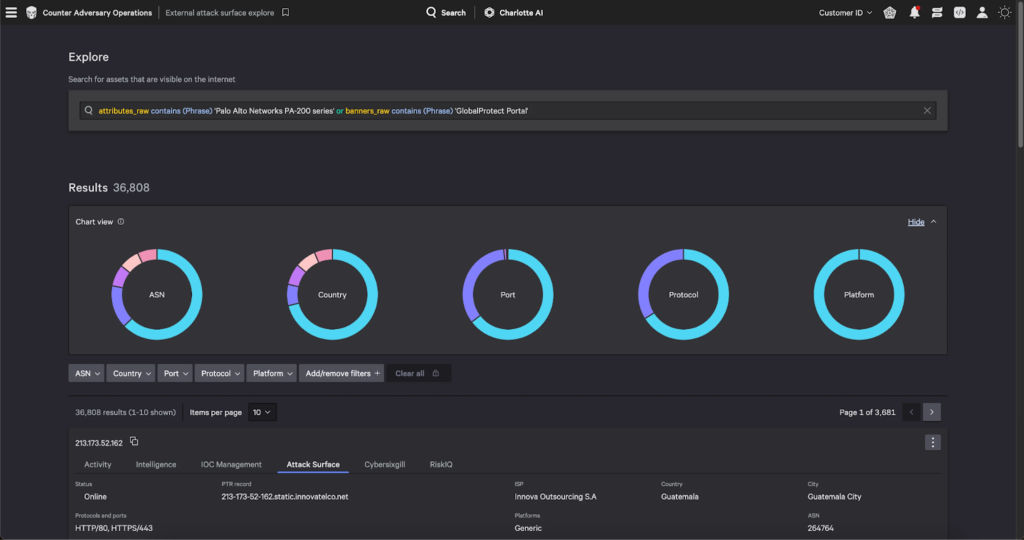

Best Practice #1: Know All of Your Assets

Cloud assets are not limited to compute instances (aka virtual machines) — they extend to all application workloads spanning compute, storage, networking and an extensive portfolio of managed services.

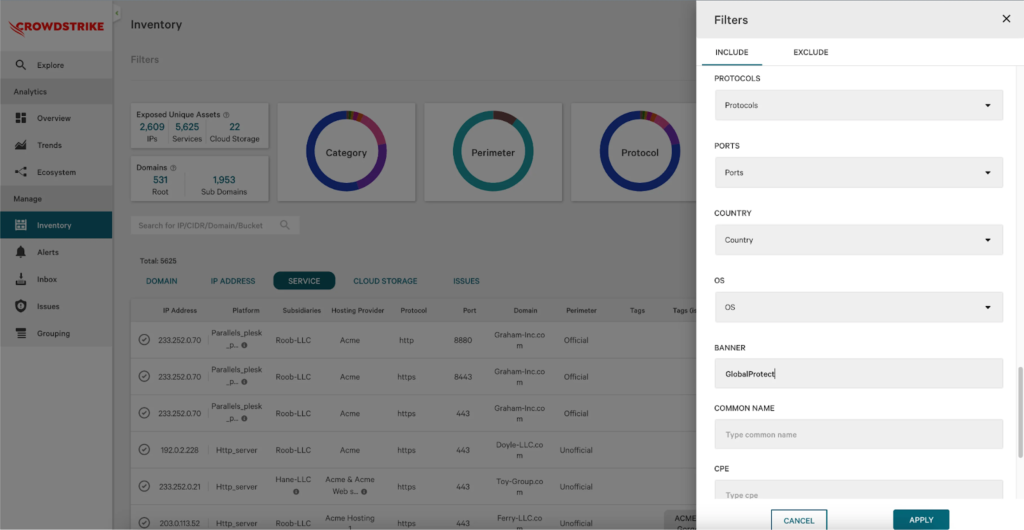

Understanding and maintaining an accurate inventory of your AWS assets is foundational to securing your cloud environment. Given the dynamic nature of cloud computing, it’s not uncommon for organizations to inadvertently lose track of assets running in their AWS accounts, which can lead to risk exposure and attacks on unprotected resources. In some cases, accounts created early in an organization’s cloud journey may not have the standard security controls that were implemented later on. In another common scenario, teams may forget about and unintentionally remove mitigations put in place to address application-specific exceptions, exposing those resources to potential attack.

To maintain adequate insight and awareness of all AWS assets in production, organizations should consider implementing the following:

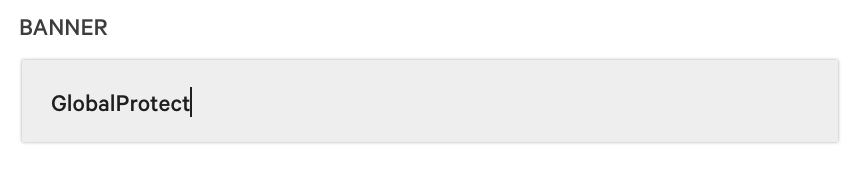

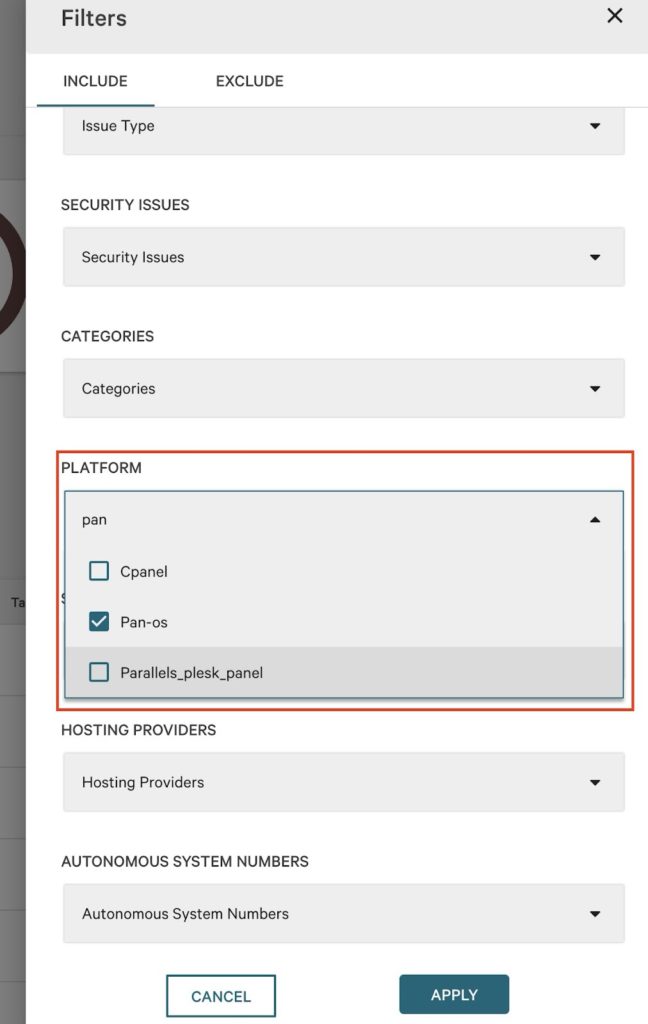

- Conduct asset inventories: Use tools and processes that provide continuous visibility into all cloud assets. This can help maintain an inventory of public and private cloud resources and ensure all assets are accounted for and monitored. AWS Resource Explorer and Cost Explorer can help discover new resources as they’re provisioned.

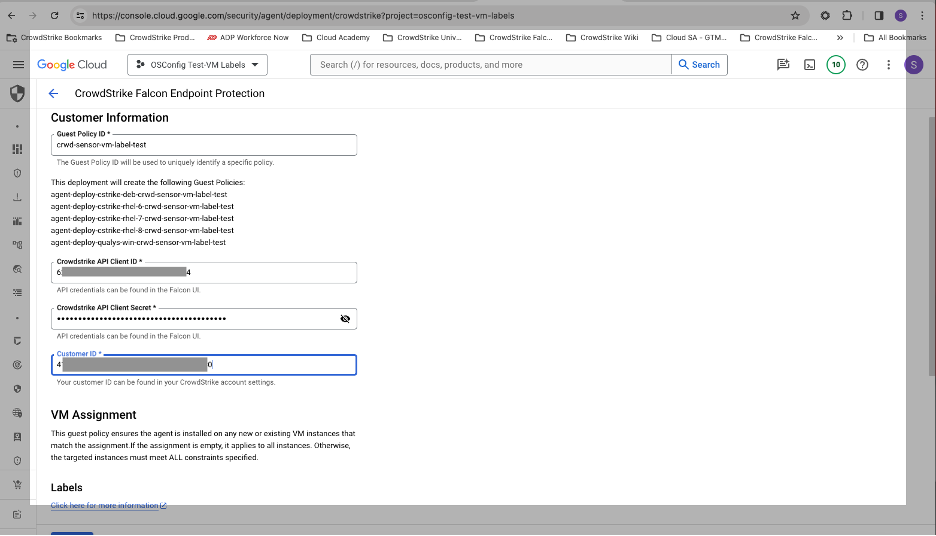

- Implement asset tagging and management policies: Establish and enforce policies for tagging cloud resources. This practice aids in organizing assets based on criticality, sensitivity and ownership, making it easier to manage and prioritize security efforts across the cloud environment. In combination with the AWS Identity and Access Management (IAM) service, tagging can also be used to dynamically grant access to resources via attribute-based access control (ABAC).

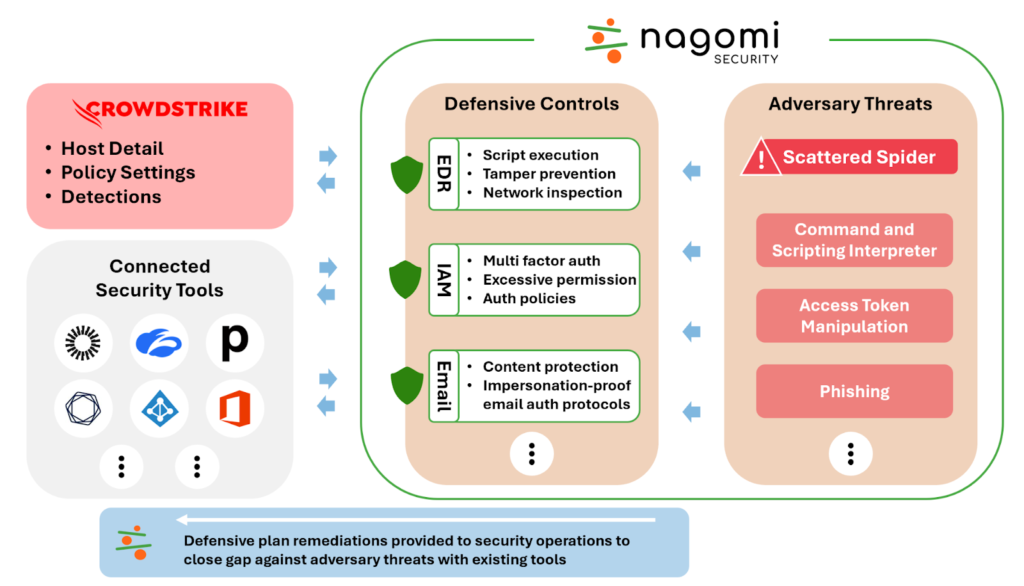

- Integrate security tools for holistic visibility: Combine the capabilities of cloud security posture management (CSPM) with other security tools like endpoint detection and response (EDR) solutions. Integration of these tools can provide a more comprehensive view of the security landscape, enabling quicker identification of misconfigurations, vulnerabilities and threats across all AWS assets. AWS services including Trusted Advisor, Security Hub, GuardDuty, Config and Inspector provide actionable insights to help security and operations teams improve their security posture.

CrowdStrike Falcon® Cloud Security makes it easy to implement these practices by offering a consolidated platform that integrates with AWS features to maintain coverage across a customer’s entire multi-account environment. Falcon Cloud Security offers CSPM, which leverages AWS EventBridge, IAM cross-account roles and CloudTrail API audit telemetry to provide continuous asset discovery, scan for misconfigurations and suspicious behavior, improve least-privilege controls and deploy runtime protection on EC2 and EKS clusters as they’re provisioned. It guides customers on how to secure their cloud environments to accelerate the learning of cloud security skills and the time-to-value for cloud initiatives. Cloud Operations teams can deploy AWS Security Hub with the CrowdStrike Falcon® Integration Gateway to view Falcon platform detections and trigger custom remediations inside AWS. AWS GuardDuty leverages CrowdStrike Falcon® Adversary Intelligence indicators of compromise and can provide an additional layer of visibility and protection for cloud teams.

Best Practice #2: Enforce Multifactor Authentication (MFA) and Use Role-based Access Control in AWS

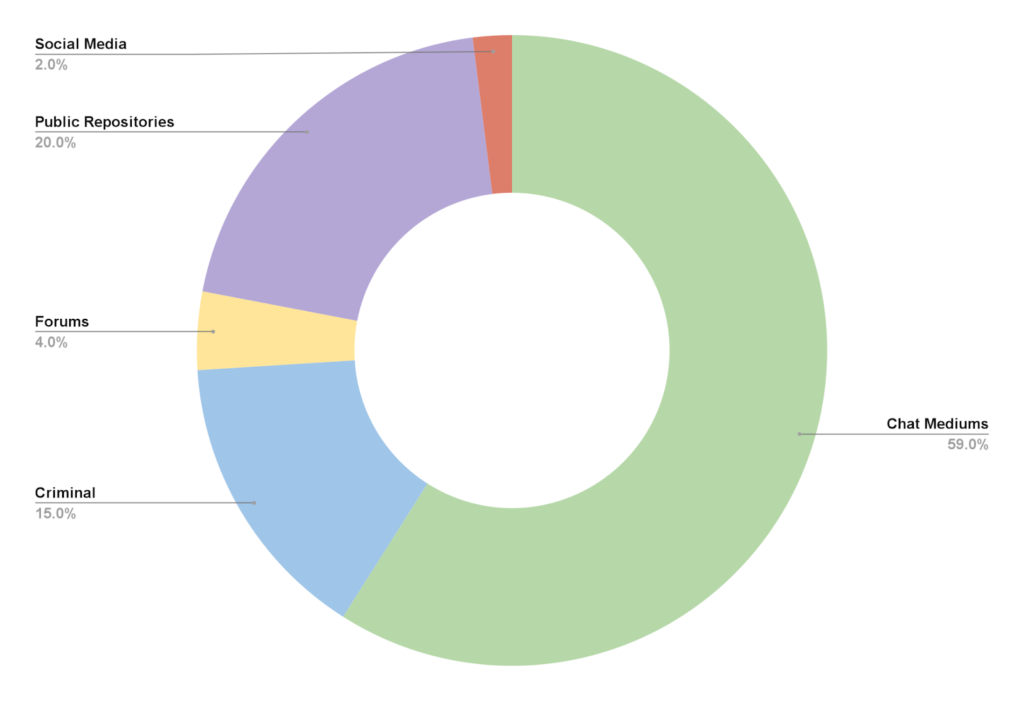

Stolen credentials pose a severe threat — whether they are user names and passwords or API key IDs and secrets — allowing adversaries to impersonate legitimate users and bypass identity-based access controls. This risk is exacerbated by scenarios where administrator credentials and hard-coded passwords are inadvertently stored in public-facing locations or within code repositories accessible online. Such exposures give attackers the opportunity to intercept live access keys, which they can use to authenticate to cloud services, posing as trusted users.

In cloud environments, as well as on-premises, organizations should adopt identity security best practices such as avoiding use of shared credentials, assigning least-privilege access policies and using a single source of truth through identity provider federation and single sign-on (SSO). AWS services such as IAM, Identity Center and Organizations can facilitate secure access to AWS services by supporting the creation of granular access policies, enabling temporary session tokens, and reporting on cross-account trusts and excessively permissive policies, thus minimizing the likelihood and impact of access key exposure. By implementing MFA in conjunction with SSO, role-based access and temporary sessions, organizations make it much harder for attackers to steal credentials and, more importantly, to effectively use them.

Falcon Cloud Security includes cloud infrastructure entitlement management (CIEM), which evaluates whether IAM roles are overly permissive and provides the visibility to make changes with awareness of which resources will be impacted. Additionally, Falcon Cloud Security conducts pre-runtime scanning of container images and infrastructure-as-code (IaC) templates to uncover improperly elevated Kubernetes pod privileges and hard-coded credentials to prevent credential theft and lateral movement. Adding the CrowdStrike Falcon® Identity Protection module delivers strong protection for Active Directory environments, dynamically identifying administrator and service accounts and anomalous or malicious use of credentials, and allowing integration with workload detection and response actions.

Best Practice #3: Automatically Scan AWS Resources for Excessive Public Exposure

The inadvertent public exposure and misconfiguration of cloud resources such as EC2 instances, Relational Database Service (RDS) and containers on ECS and EKS through overly permissive network access policies pose a risk to the security of cloud workloads. Such lapses can accidentally open the door to unauthorized access to vulnerable services, providing attackers with opportunities to exploit weaknesses for data theft, launching further attacks and moving laterally within the cloud environment.

To mitigate these risks and enhance cloud security posture, organizations should:

- Implement automated security audits: Utilize tools like AWS Trusted Advisor, AWS Config and AWS IAM Access Analyzer to continuously audit the configurations of AWS resources and identify and remediate excessive public exposure or misconfigurations.

- Secure AWS resources with proper security groups: Configure security groups for logical groups of AWS resources to restrict inbound and outbound traffic to only necessary and known IPs and ports. Whenever possible, use network access control lists (NACLs) to restrict inbound and outbound access across entire VPC subnets to prevent data exfiltration and block communication with potentially malicious external entities. Services like AWS Firewall Manager provide a single pane of glass for configuring network access for all resources in an AWS account using VPC Security Groups, Web Application Firewall (WAF) and Network Firewall.

- Collaborate across teams: Security teams should work closely with IT and DevOps to understand the necessary external services and configure permissions accordingly, balancing operational needs with security requirements.

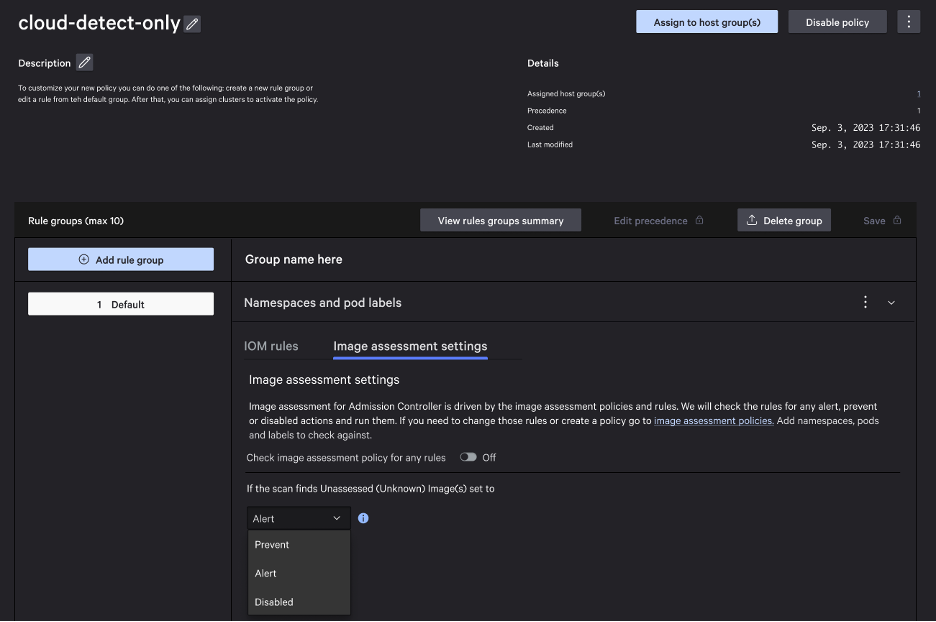

Falcon Cloud Security continuously monitors AWS service configurations for best practices, both in live environments and in pre-runtime IaC templates as part of a CI/CD or GitOps pipeline. Overly permissive network security policies are dynamically discovered and recorded as indicators of misconfiguration (IOMs), which are automatically correlated with all other security telemetry in the environment, along with insight into how the misconfiguration can be mitigated by the customer or maliciously used by the adversary.

Best Practice #4: Prioritize Alerts Based on Risk

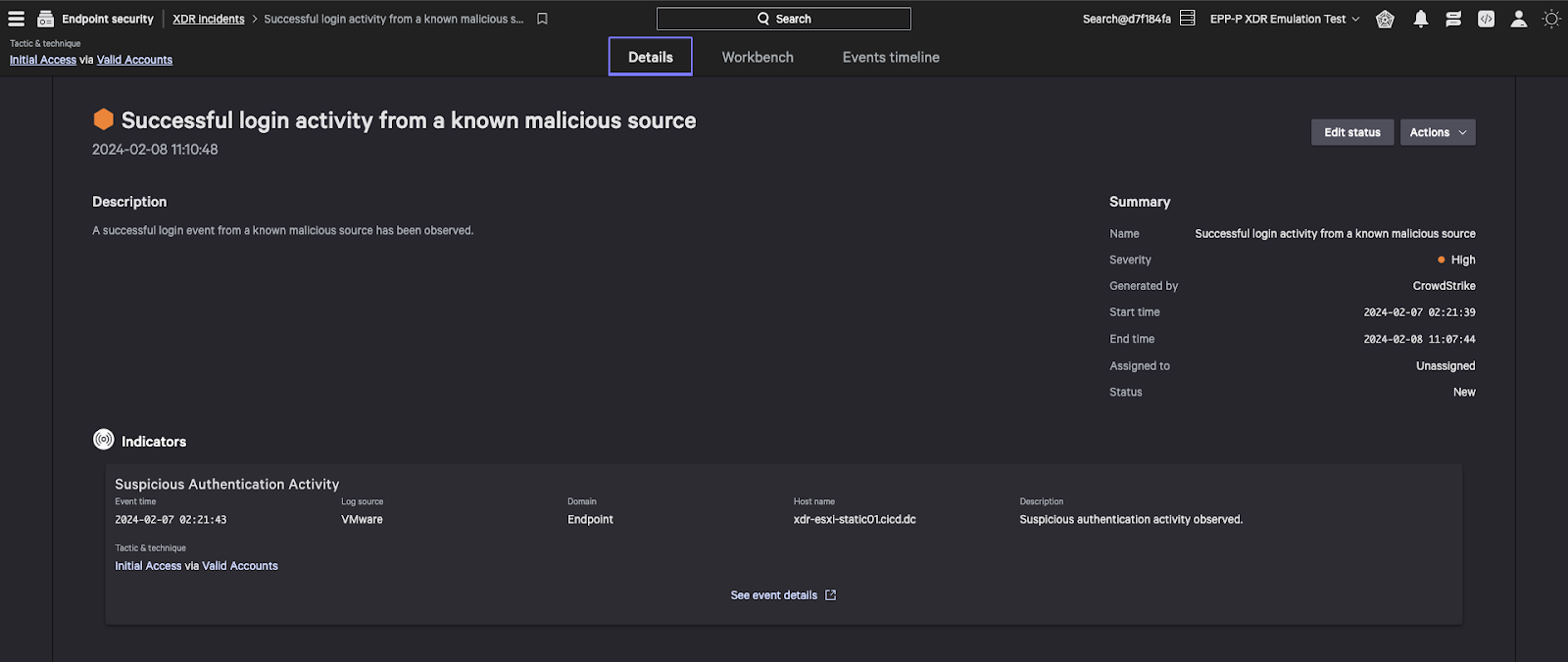

Adversaries are becoming more skilled in attacking cloud environments, as evidenced by a 75% increase in cloud intrusions year-over-year in 2023. They are also growing faster: The average breakout time for eCrime operators to move laterally from one breached host to another host was just 62 minutes in 2023. The rise of new technologies, such as generative AI, has the potential to lower the barrier to entry for less-skilled adversaries, making it easier to launch sophisticated attacks. Amid these evolving trends, effective alert management is paramount.

Cloud services are built to deliver a constant stream of API audit and service access logs, but sifting through all of this data can overwhelm security analysts and detract from their ability to focus on genuine threats. While some logs may indicate high-severity attacks that demand immediate response, most tend to be informational and often lack direct security implications. Generating alerts based on this data can be imprecise, potentially resulting in many false positives, each of which require SecOps investigation. Alert investigations can consume precious time and scarce resources, leading to a situation where noisy security alerts prevent timely detection and effective response.

To navigate this complex landscape and enhance the effectiveness of cloud security operations, several best practices can be adopted to manage and prioritize alerts efficiently:

- Prioritize alerts strategically: Develop a systematic approach to capture and prioritize high-fidelity alerts. Implementing a triage process based on the severity of events helps focus resources on the most critical investigations.

- Create context around alerts: Enhance alert quality by enriching them with correlated data and context. This additional information increases confidence in the criticality of alerts, enabling more informed decision-making regarding their investigation.

- Integrate and correlate telemetry sources: Improve confidence in prioritizing or deprioritizing alerts by incorporating details from other relevant data sources or security tools. This combination allows for a more comprehensive understanding of the security landscape, aiding in the accurate identification of genuine threats.

- Outsource to a competent third party: For organizations overwhelmed by the volume of alerts, partnering with a managed detection and response (MDR) provider can be a viable solution. These partners can absorb the event burden, alleviating the bottleneck and allowing in-house teams to focus on strategic security initiatives.

AWS Services like AWS GuardDuty, which is powered in part by CrowdStrike Falcon Adversary Intelligence indicators of compromise (IOCs), help surface and alert on suspicious and malicious activity within AWS accounts, prioritizing indicators of attack (IOAs) and IOCs based on risk severity.

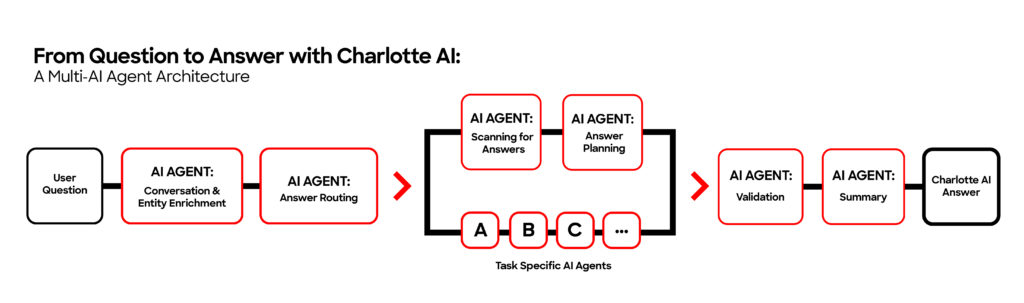

Falcon Cloud Security is a complete cloud security platform that unifies world-class threat intelligence and elite threat hunters. Falcon Cloud Security correlates telemetry and detections across IOMs, package vulnerabilities, suspicious behavior, adversary intelligence and third-party telemetry ingested through a library of data connectors to deliver a context-based risk assessment, which reduces false positives and automatically responds to stop breaches.

Best Practice #5: Enable Comprehensive Logging

Adversaries that gain access to a compromised account can operate virtually undetected, limited only by the permissions granted to the account they used to break in. This stealthiness is compounded by the potential for log tampering and manipulation, where malicious actors may alter or delete log files to erase evidence of their activities. Such actions make it challenging to trace the adversary’s movements, evaluate the extent of data tampering or theft, and understand the full scope of the security incident. The lack of a comprehensive audit trail due to disabled or misconfigured logging mechanisms hinders the ability to maintain visibility over cloud operations, making it more difficult to detect and respond to threats.

In response, organizations can:

- Enable comprehensive logging across the environment: Ensure AWS CloudTrail logs, S3 server access logs, Elastic Load Balancer (ELB) access logs, CloudFront logs and VPC flow logs are activated to maintain a detailed record of all activities and transactions.

- Ingest and alert on logs in your SIEM: Integrate and analyze logs within your security information and event management (SIEM) system to enable real-time alerts on suspicious activities. Retain logs even if immediate analysis capabilities are lacking, as they may provide valuable insights in future investigations.

- Ensure accuracy of logged data: For services behind proxies, like ELBs, ensure the logging captures original IP addresses from the X-Forwarded-For field to preserve crucial information for analysis.

- Detect and prevent log tampering: Monitor for API calls that attempt to disable logging and for unexpected changes in cloud services or account settings that could undermine logging integrity, in line with recommendations from the MITRE ATT&CK® framework. In addition, features such as MFA-Delete provide additional protection by requiring two-factor authentication to allow deletion of S3 buckets and critical data.

CrowdStrike Falcon Cloud Security for AWS

Falcon Cloud Security integrates with over 50 AWS services to deliver effective protection at every stage of the cloud journey, combining multi-account deployment automation, sensor-based runtime protection, agentless API attack and misconfiguration detection, and pre-runtime scanning of containers, Lambda functions and IaC templates.

CrowdStrike leverages real-time IOAs, threat intelligence, evolving adversary tradecraft and enriched telemetry from across vectors such as endpoint, cloud, identity and more. This not only enhances threat detection, it also facilitates automated protection, remediation and elite threat hunting, aligned closely with understanding AWS assets, enforcing strict access control and authentication measures, and ensuring meticulous monitoring and management of cloud resources.

You can try Falcon Cloud Security through a Cloud Security Health Check, during which you’ll engage in a one-on-one session with a cloud security expert, evaluate your current cloud environment, and identify misconfigurations, vulnerabilities and potential cloud threats.

Protecting AWS Resources with Falcon Next-Gen SIEM

CrowdStrike Falcon® Next-Gen SIEM unifies data, AI, automation and intelligence in one AI-native platform to stop breaches. Falcon Next-Gen SIEM extends CrowdStrike’s industry-leading detection and response and expert services to all data, including AWS logs, for complete visibility and protection. Your team can detect and respond to cloud-based threats in record time with real-time alerts, live dashboards and blazing-fast search. Native workflow automation lets you streamline analysis of cloud incidents and say goodbye to tedious tasks.

For the first time ever, your analysts can investigate cloud-based threats from the same console they use to manage cloud workload security and CSPM. CrowdStrike consolidates multiple security tools, including next-gen SIEM and cloud security, on one platform to cut complexity and costs. Watch a 3-minute demo of Falcon Next-Gen SIEM to see it in action.

Additional Resources

: Cloud Workload Security, Q1 2024

: Cloud Workload Security, Q1 2024

The CrowdStrike Falcon platform has received the

The CrowdStrike Falcon platform has received the